Feed aggregator

DeepSeek OCR Review

Mounting a Block Volume in OCI: A Quick Reference Guide

A quick reference guide for mounting block volumes to OCI compute instances running Oracle Linux Server 8.9

The post Mounting a Block Volume in OCI: A Quick Reference Guide appeared first on DBASolved.

M-Files Sharing Center: Improved collaboration

As I’ve said many times, I like M-Files because each monthly release not only fixes bugs, but also introduces new features. This time (December 2025), they worked on the sharing feature.

It’s not entirely new, as it was already possible to create links to share documents with external users. However, it was clearly not user-friendly and difficult to manage. Let’s take a closer look.

The Sharing Center in M-Files allows users to share content with external users by generating links that can be public or passcode-protected.

Key capabilities include:Access Control: Modify or revoke permissions instantly to maintain security.

Audit & Compliance: Track sharing history for regulatory compliance and internal governance.

External Collaboration: Share documents with partners or clients without exposing your entire repository.

The capabilities will evolve in the next releases.

Centralized Overview: See all active shares in one place. No more guessing who has access!

Editing Permissions: Allow external users to update shared documents.

And lot of new features that will be added in the upcoming releases!

A good internal content management system is crucial for maintaining data consistency and integrity. But what about when you share these documents via email, a shared directory, or cloud storage?

You have control over your documents and can see who has accessed them and when.

Need to end a collaboration? With one click, you can immediately revoke access.

Providing an official way to share documents with external people helps prevent users from using untrusted cloud services and other methods that can break security policies.

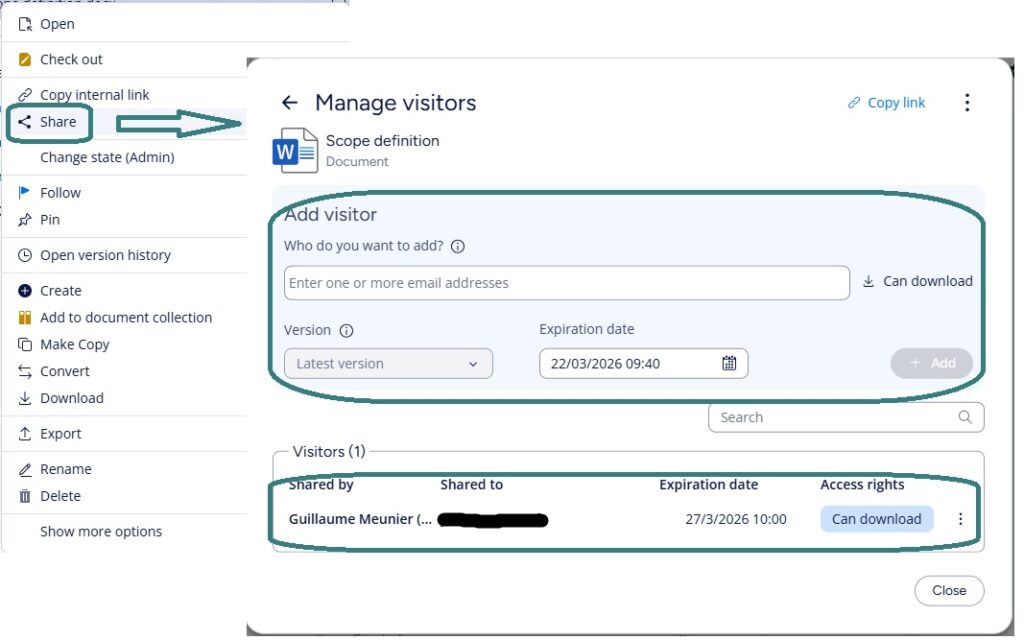

It’s hard to make it any simpler. Just right-click on the document and select “Share.”

A pop-up will ask you for an email address and an expiration date, and that’s it!

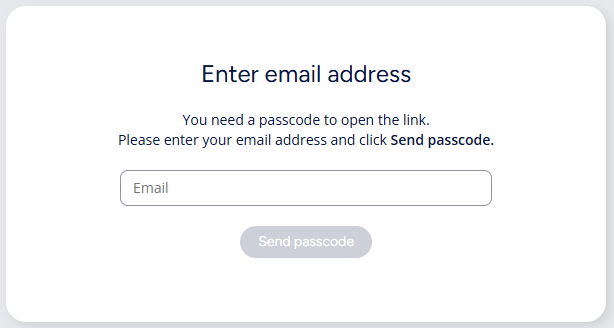

When an external user goes to the link, he or she will be asked to enter his or her email address. A passcode will then be sent to that email address for authentication.

Another interesting feature is that the generated link remains the same when you add or remove users, change permissions, or stop and resume sharing.

Small TipsSet expiration dates: The easier it is to share a document, the easier it is to forget about it. Therefore, it is important to set an expiration date for shared documents.

Use role-based permissions: Sharing information outside the organization is sensitive, so controlling who can perform this action is important.

Regularly review active shares: Even if an expiration date has been set, it is a good habit to ensure that only necessary access remains.

M-Files already provides a great tool for external collaboration. Hubshare. With this collaboration portal, you can do much more than share documents. Of course, this tool incurs an additional cost. M-Files Sharing Center solves another problem: how to share documents occasionally outside the organization without compromising security or the benefits M-Files provides internally.

The first version of the Sharing Center is currently limited to downloading, but an editing feature and a dashboard for quickly identifying shared documents across the repository will be included in a next release. These features’ simplicity and relevance will undoubtedly make it stand out even more from its competitors.

If you are curious about it, feel free to ask us!

L’article M-Files Sharing Center: Improved collaboration est apparu en premier sur dbi Blog.

Data Anonymization as a Service with Delphix Continuous Compliance

In the era of digital transformation, attack surfaces are constantly evolving and cyberattack techniques are becoming increasingly sophisticated. Maintaining the confidentiality, integrity, and availability of data is therefore a critical challenge for organizations, both from an operational and a regulatory standpoint (GDPR, ISO 27001, NIST). Therefore, data anonymization is crucial today.

Contrary to a widely held belief, the risk is not limited to the production environment. Development, testing, and pre-production environments are prime targets for attackers, as they often benefit from weaker security controls. The use of production data that is neither anonymized nor pseudonymized directly exposes organizations to data breaches, regulatory non-compliance, and legal sanctions.

Development teams require realistic datasets in order to:

- Test application performance

- Validate complex business processes

- Reproduce error scenarios

- Train Business Intelligence or Machine Learning algorithms

However, the use of real data requires the implementation of anonymization or pseudonymization mechanisms ensuring:

- Preservation of functional and referential consistency

- Prevention of data subject re-identification

Among the possible anonymization techniques, the main ones include:

- Dynamic Data Masking, applied on-the-fly at access time but which does not anonymize data physically

- Tokenization, which replaces a value with a surrogate identifier

- Cryptographic hashing, with or without salting

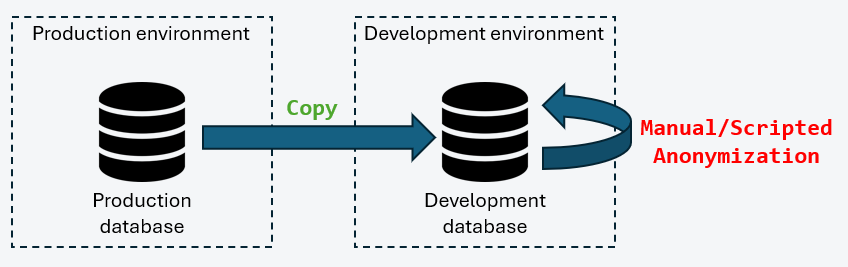

In this scenario, a full backup of the production database is restored into a development environment. Anonymization is then applied using manually developed SQL scripts or ETL processes.

This approach presents several critical weaknesses:

- Temporary exposure of personal data in clear text

- Lack of formal traceability of anonymization processes

- Risk of human error in scripts

- Non-compliance with GDPR requirements

This model should therefore be avoided in regulated environments.

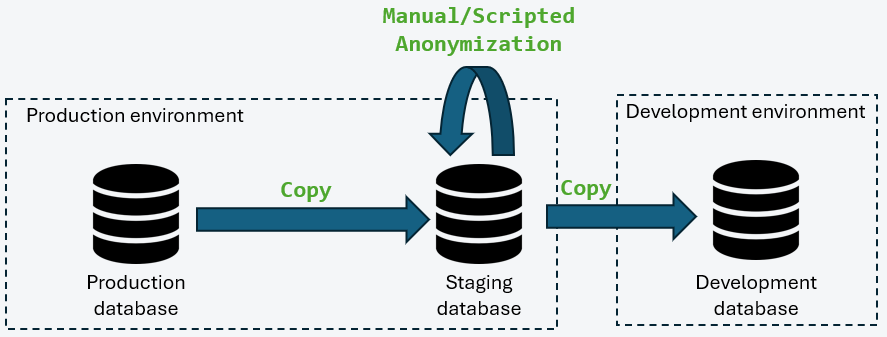

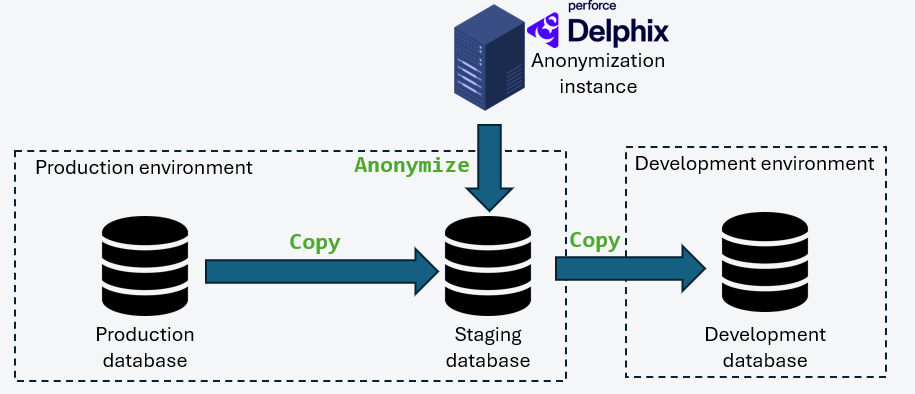

Data copy via a Staging Database in ProductionThis model introduces an intermediate staging database located within a security perimeter equivalent to that of production. Anonymization is performed within this secure zone before replication to non-production environments.

This approach makes it possible to:

- Ensure that no sensitive data in clear text leaves the secure perimeter

- Centralize anonymization rules

- Improve overall data governance

However, several challenges remain:

- Versioning and auditability of transformation rules

- Governance of responsibilities between teams (DBAs, security, business units)

- Maintaining inter-table referential integrity

- Performance management during large-scale anonymization

In this architecture, Delphix is integrated as the central engine for data virtualization and anonymization. The Continuous Compliance module enables process industrialization through:

- An automated data profiler identifying sensitive fields

- Deterministic or non-deterministic anonymization algorithms

- Massively parallelized execution

- Orchestration via REST APIs integrable into CI/CD pipelines

- Full traceability of processing for audit purposes

This approach enables the rapid provisioning of compliant, reproducible, and secure databases for all technical teams.

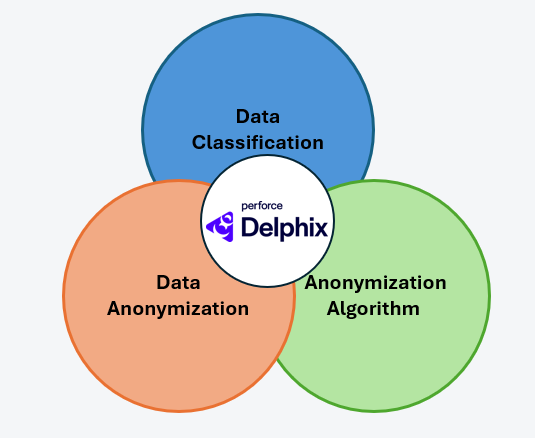

Database anonymization should no longer be viewed as a one-time constraint but as a structuring process within the data lifecycle. It is based on three fundamental pillars:

- Governance

- Pipeline industrialization

- Regulatory compliance

An in-house implementation is possible, but it requires a high level of organizational maturity, strong skills in anonymization algorithms, data engineering, and security, as well as a strict audit framework. Solutions such as Delphix provide an industrialized response to these challenges while reducing both operational and regulatory risks.

To take this further, Microsoft’s article explaining the integration of Delphix into Azure pipelines analyzes the same issues discussed above, but this time in the context of the cloud : Use Delphix for Data Masking in Azure Data Factory and Azure Synapse Analytics

What’s next ?This use case is just one example of how Delphix can be leveraged to optimize data management and compliance in complex environments. In upcoming articles, we will explore other recurring challenges, highlighting both possible in-house approaches and industrialized solutions with Delphix, to provide a broader technical perspective on data virtualization, security, and performance optimization.

What about you ?How confident are you about the management of your confidential data?

If you have any doubts, please don’t hesitate to reach out to me to discuss them !

L’article Data Anonymization as a Service with Delphix Continuous Compliance est apparu en premier sur dbi Blog.

Avoiding common ECM pitfalls with M-Files

Enterprise Content Management (ECM) systems promise efficiency, compliance, and better collaboration.

Many organizations struggle to realize these benefits because of common pitfalls in traditional ECM implementations.

Let’s explore these challenges and see how M-Files addresses them with its unique approach.

Pitfall 1: Information SilosTraditional ECM systems often replicate the same problem they aim to solve: Information silos. Documents remain locked in departmental repositories or legacy systems, making cross-functional collaboration difficult.

How does M-Files address this challenge?

M-Files connects to existing repositories without requiring migration. Its connector architecture allows users to access content from multiple sources through a single interface, breaking down silos without disrupting operations. Additionally, workflows defined in M-Files can be applied to linked content, providing incredible flexibility.

With folder-based systems, users must know exactly where a document is stored. This leads to wasted time spent searching for files. It also increases the risk of creating duplicates because users who cannot find a document may be tempted to create or add it again. Another common issue is that permissions are defined by folder. This means that, in order to give users access to data, the same file must be copied to multiple locations.

How M-Files Solves It:

Instead of folders, M-Files uses metadata-driven organization. Users search by “what” the document is (e.g., invoice, contract) rather than “where” it’s stored. This makes retrieval fast and intuitive and it allows users to personalize how the data is displayed based on their needs.

If an ECM system is hard to use, like lot of information to be filled or very restricted possibilities, employees will bypass it, creating compliance risks, inefficiencies and data loss.

How M-Files address that:

M-Files seamlessly integrates with familiar tools, such as Microsoft Teams, Outlook, and SharePoint, reducing friction. Its simple interface allows users to quickly become familiar with the software, and its AI-based suggestions make manipulating data easy, ensuring rapid adoption.

Regulated industries face strict requirements for document control, audit trails, and retention. Traditional ECM systems often require manual processes to stay compliant.

How M-Files helps:

M-Files includes a workflow engine that automates compliance with version control, audit logs, and retention policies. This ensures approvals and signatures occur in the correct order, thereby reducing human error and delays.

As organizations grow, ECM systems can become bottlenecks due to their rigid architectures.

M-Files offers several solutions: cloud, on-premises, and hybrid deployment options, ensuring scalability and flexibility. Its architecture supports global operations without sacrificing performance and helps streamline costs.

Selecting an ECM involves more than comparing the costs (licenses, infrastructure, etc.) of different market players. It is also, above all else, a matter of identifying the productivity gains, reduction in repetitive task workload, and efficiency that such a solution provides.

If you’re feeling overwhelmed by the vast world of ECM, don’t hesitate to ask us for help.

L’article Avoiding common ECM pitfalls with M-Files est apparu en premier sur dbi Blog.

The AI-Powered DBA: Why Embracing Artificial Intelligence Is No Longer Optional

Discover why AI elevates the DBA role rather than replacing it, and how your expertise becomes more valuable than ever.

The post The AI-Powered DBA: Why Embracing Artificial Intelligence Is No Longer Optional appeared first on DBASolved.

Exascale storage architecture

In this blog post, we will explore the Exascale storage architecture and processes pertaining to Exascale. But before diving in, a quick note to avoid any confusion about Exascale: even though in the previous article, we focused on Exascale Infrastructure and its various benefits in terms of small footprint and hyper-elasticity based on modern cloud characteristics, it is important to keep in mind that Exascale is an Exadata technology and not a cloud-only technology. You can benefit from it in non-cloud deployments as well such as on Exadata Database Machine deployed in your data centers.

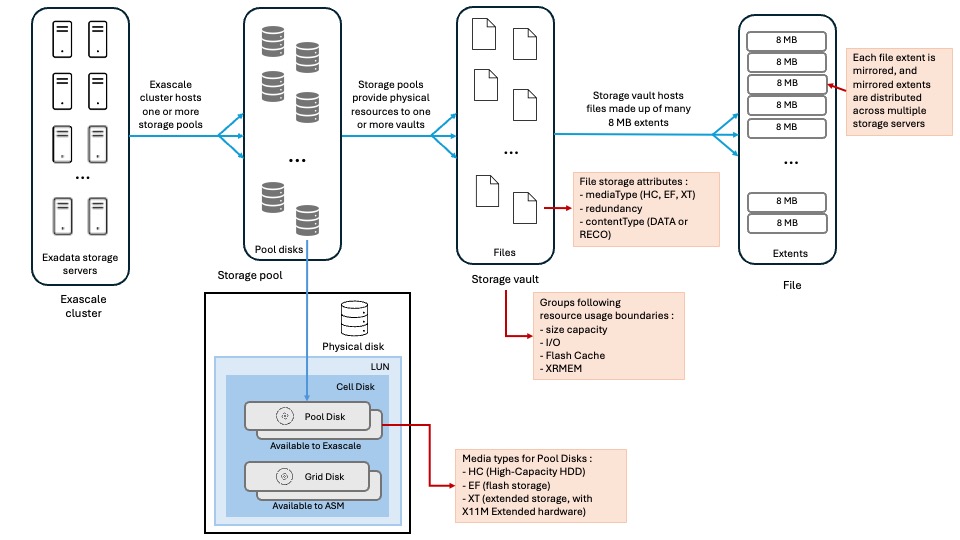

Exascale storage componentsHere is the overall picture:

Exascale cluster

Exascale cluster

An Exascale Cluster is composed of Exadata storage servers to provide storage to Grid Infrastructure clusters and the databases. An Exadata storage server can belong to only one Exascale cluster.

Software services (included in the Exadata System Software stack) run on each Exadata cluster for managing the cluster resources made available to GI and databases, namely pool disks, storage pools, vaults, block volumes and many other.

With Exadata Database Service on Exascale Infrastructure, the number of Exadata storage servers included in the Exascale cluster can be quite huge, hundreds maybe even thousands storage servers, to enable cloud-scale storage resource pooling.

Storage poolsA storage pool is a collection of pool disks (see below for details about pool disks).

Each Exascale cluster requires at least one storage pool.

A storage pool can be dynamically reconfigured by changing pool disks size, allocating more pool disks or adding Exadata storage servers.

The pool disks found inside a storage pool must be of the same media type.

Pool disksA pool disk is physical storage space allocated from an Exascale-specific cell disk to be integrated in a storage pool.

Each storage server physical disk has a LUN in the storage server OS and a cell disk is created as a container for all Exadata-related partitions within the LUN. Each partition in a cell disk is then designated as a pool disk for Exascale (or grid disk in case of ASM is used instead of Exascale).

A media type is associated to each pool disk based on the underlying storage device and can be one of the following :

- HC : points to high capacity storage using hard disk drives

- EF : based on extreme flash storage devices

- XT : corresponds to extended storage using hard disk drives found in Exadata Storage Server X11M Extended (XT) hardware

Vaults are logical storage containers used for storing files and are allocated from storage pools. By default, without specific provisioning attributes, vaults can use all resources from all storage pools of an Exascale cluster.

Here are the two main services provided by vaults:

- Security isolation: a security perimeter is associated with vaults based on user access controls which guarantee a strict isolation of data between users and clusters.

- Resource control: storage pool resources usage is configured at the vault level for attributes like storage space, IOPS, XRMEM and flash cache sizing.

For those familiar with ASM, think of vaults as the equivalent to ASM disk groups. For example, instance parameters like ‘db_create_file_dest’ and ‘db_recovery_file_dest’ reference vaults instead of ASM disk groups by using ‘@vault_name’ syntax.

Since attributes like redundancy, type of file content, type of media are positioned at the file level instead at the logical storage container, there is no need to organize vaults in the same manner as we did for disk groups. For instance, we don’t need to create a first vault for data and a second vault for recovery files as we are used to with ASM.

Beside database files, vaults can also store other types of files even though it is recommended to store non database files on block volumes. That’s because Exascale is optimized for storing large files such as database files whereas regular files are typically much smaller and fit more on block volumes.

FilesThe main files found on Exascale storage are Database and Grid Infrastructure files. Beyond that, all objects in Exascale are represented as files of a certain type. Each file type has storage attributes defined in a template. The file storage attributes are :

- mediaType : HC, EF or XT

- redundancy : currently high

- contentType : DATA and RECO

This makes a huge difference with ASM where different redundancy needs required to create different disk groups. With Exascale, it is now possible to store files with different redundancy requirements in the same storage structure (vaults). This also enables to optimize usage of the storage capacity.

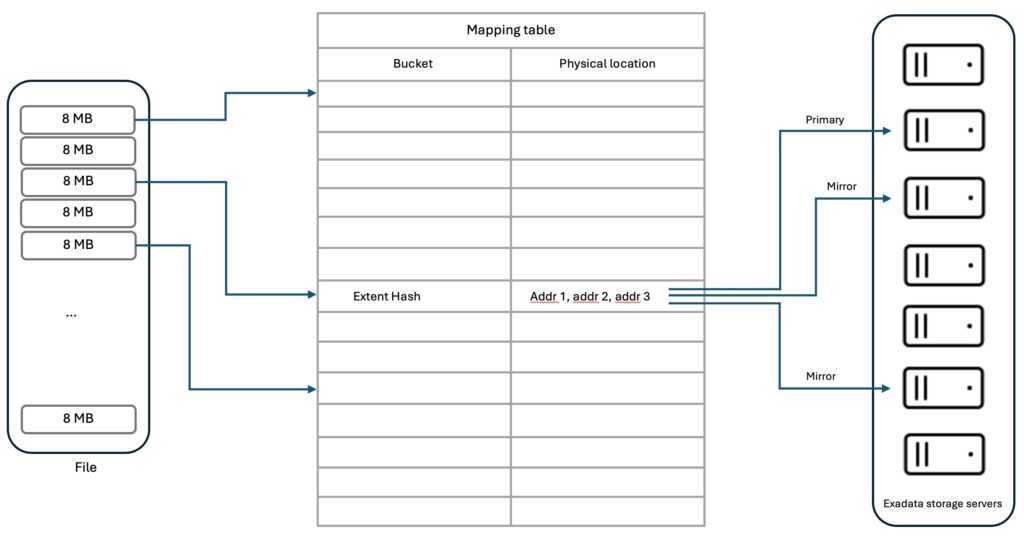

Files are composed of extents of 8MB in size which are mirrored and stripped across all vault’s storage resources.

The tight integration of the database kernel with Exascale makes it possible for Exascale to automatically understand the type of file the database asks to create and thus applies the appropriate attributes defined in the file template. This prevents Exascale to store data and recovery files extents (more on extents in the next section) on the same disks and also guarantees that mirrored extents are located on different storage servers than the primary extent.

File extentsRemember that in Exascale, storage management moved from the compute servers to the storage servers. Specifically, this means the building blocks of files, namely extents, are managed by the storage servers.

The new data structure used for extent management is a mapping table which tracks for each file extent the location of the primary and mirror copy extents in the storage servers. This mapping table is cached by each database server and instance to retrieve its file extents location. Once the database has the extent location, it can directly make an I/O call to the appropriate storage server. In case the mapping table is no more up-to-date because of database physical structure changes or storage servers addition or removal, an I/O call can be rejected, triggering a mapping table refresh allowing the I/O call to be retried.

Exascale Block Store with RDMA-enabled block volumes

Exascale Block Store with RDMA-enabled block volumes

Block volumes can be allocated from storage pools to store regular files on file systems like ACFS or XFS. They also enable centralization of VC VM images, thus cutting the dependency of VM images to internal compute node storage and streamlining migrations between physical database servers. Clone, snapshot and backup and restore features for block volumes can leverage all resources of the available storage servers.

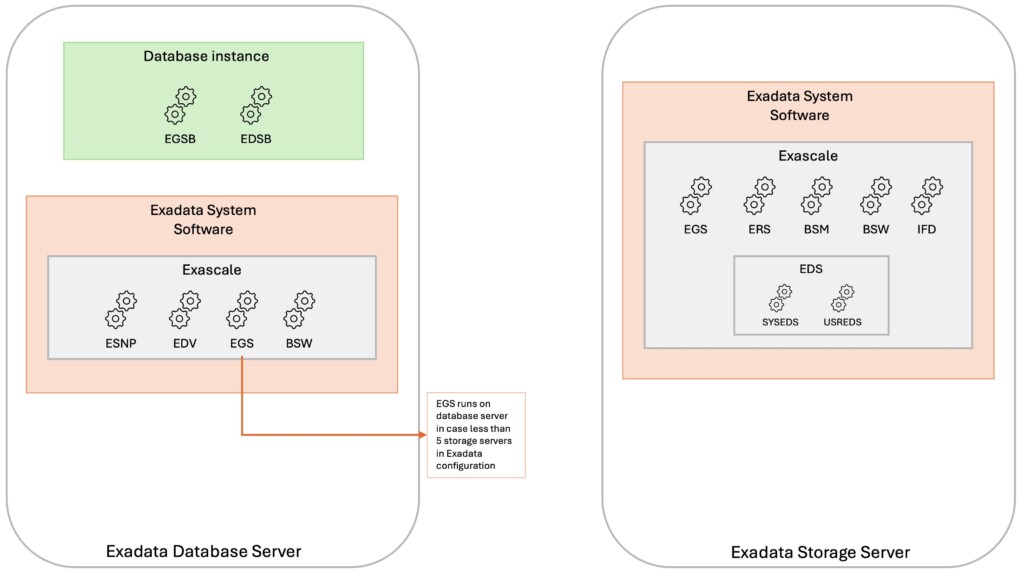

Exascale storage servicesFrom a software perspective, Exascale is composed of a number of software services available in the Exadata System Software (since release 24.1). These software services run mainly on the Exadata Storage Servers but also on the Exadata Database Servers.

Exascale storage server services ServiceDescriptionEGS – Cluster ServicesEGS (Exascale Global Services) main task is to manage the storage allocated to storage pools. In addition, EGS also controls storage cluster membership, security and identity services as well as monitoring the other Exascale services.ERS – Control ServicesERS (Exascale RESTful Services) provide the management endpoint for all Exascale management operations. The new Exascale command-line interface (ESCLI), used for monitoring and management functions, leverages ERS for command execution.EDS – Exascale Vault Manager ServicesEDS (Exascale Data Services) are responsible for files and vaults metadata management and are made up of two groups of services:System Vault Manager and User Vault Manager.

System Vault Manager (SYSEDS) manages Exascale vaults metadata, such as the security perimeter through ACL and vaults attributes

User Vault Manager (USREDS) manages Exascale files metadata, such as ACLs and attributes as well as clones and snapshots metadataBSM – Block Store ManagerBSM manages Exascale block storage metadata and controls all block store management operations like volume creation, attachment, detachment, modification or snapshot.BSW – Block Store WorkerThese services perform the actual requests from clients and translate them to storage server I/O.IFD – Instant Failure DetectionIFD service watches for failures which could arise in the Exascale cluster and triggers recovery actions when needed.Exadata Cell ServicesExadata cell services are required for Exascale to function and both services work in conjunction to provide the Exascale features. Exascale database server services ServiceDescriptionEGS – Cluster ServicesEGS instances will run on database servers when the Exadata configuration has fewer than five storage serversBSW – Block Store WorkerServices requests from block store clients and performs the resulting storage server I/OESNP – Exascale Node ProxyESNP provides Exascale cluster state to GI and Database processes.EDV – Exascale Direct VolumeEDV service exposes Exascale block volumes to Exadata compute nodes and runs I/O requests on EDV devices.EGSB/EDSBPer database instance services that maintain metadata about the Exascale cluster and vaults

Below diagram depicts how the various Exascale services are dispatched on the storage and compute nodes:

Wrap-up

Wrap-up

By rearchitecting Exadata storage management with focus on space efficiency, flexibility and elasticity, Exascale can now overcome the main limitations of ASM:

- diskgroup resizing complexity and time-consuming rebalance operation

- space distribution among DATA and RECO diskgroups requiring rigorous estimation of storage needs for each

- sparse diskgroup requirement for cloning with a read-only test master or use of ACFS without smart scan

- redundancy configuration at the diskgroup level

The below links will provide you further details on the matter:

Oracle Exadata Exascale advantages blog

Oracle Exadata Exascale storage fundamentals blog

Oracle And Me blog – Why ASM Needed an Heir Worthy of the 21st Century

Oracle And Me blog – New Exascale architecture

More on the exciting Exascale technology in coming posts …

L’article Exascale storage architecture est apparu en premier sur dbi Blog.