Feed aggregator

Detecting Password Guessing in Oracle using LCOUNT

Posted by Pete On 15/01/26 At 03:08 PM

How to activate the auto-index feature in Oracle 19c Enterprise Edition?

PAGE REFRESH CAUSED BY DATABASE TRIGGER.

Collection vs Global Temporary Table in Oracle 19c

How to ensure SESSION_USER = application user when running DBMS_SCHEDULER jobs that call external C++ functions?

Happy New Year 2026, Oracle Security Training, MySQL, Anniversary

Posted by Pete On 13/01/26 At 12:02 PM

Migrate your database to 26ai on ODA

Oracle 26ai is not yet available on Bare Metal ODA, but it will probably be in a few weeks. For now, 26ai is available as a DB System on Oracle Database Appliance, meaning that it’s already possible to test this new version. Let’s try to migrate a 19c Bare Metal PDB to a 26ai DB System using 3 different methods.

Prerequisites26ai is only available with the minimum release 19.29, meaning on ODA X8-2 and newer models. You will need to patch your ODA to 19.29 first if you want to test 26ai. 26ai is only compatible with multitenant architecture, so another prerequisite is to migrate your non-container databases to PDBs. If you’re still using non-CDB architecture, and among these 3 methods, only the first one will work for you.

The recommended patching method as told by Oracle is using AutoUpgrade: please refer to this blog post if you want more details.

On my side, I will explore these 3 other possibilities:

- Datapump Export/Import

- PDB copy through a DB link

- PDB unplug/plug

These files are needed for creating a 26ai DB System:

36524660 => System image for 26ai DB Systems

36524627 => the GI clone needed for deploying newer 26ai GI version

36524642 => the DB clone for deploying new version 26ai

Be sure to choose the very latest 19.29 patch when downloading these files, download link from MOS will first propose older versions.

My 19c source databaseMy source database is a 19c container database with one PDB running on an ODA X8-2M. I want to migrate this PDB to 26ai:

su - oracle

. oraenv <<< CPROD19

ORACLE_SID = [CPROD19] ? The Oracle base remains unchanged with value /u01/app/odaorabase/oracle

sqlplus / as sysdba

SQL*Plus: Release 19.0.0.0.0 - Production on Thu Jan 8 09:53:43 2026

Version 19.29.0.0.0

Copyright (c) 1982, 2025, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.29.0.0.0

show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 PRODDB01 READ WRITE NO

exit

It’s not yet possible to deploy a 26ai container database on Bare Metal, so let’s create a DB System for testing this migration. First unzip and register the VM template, GI 26ai and DB 26ai:

cd /opt/dbi

unzip -o p36524660_1929000_Linux-x86-64.zip

unzip -o p36524627_1929000_Linux-x86-64.zip

unzip -o p36524642_1929000_Linux-x86-64.zip

odacli update-repository -f /opt/dbi/odacli-dcs-23.26.0.0.0-251116-ODAVM-19.29.0.0.zip

odacli update-repository -f /opt/dbi/odacli-dcs-23.26.0.0.0-251116-GI-23.26.0.0.zip

odacli update-repository -f /opt/dbi/odacli-dcs-23.26.0.0.0-251116-DB-23.26.0.0.zip

sleep 30 ; odacli list-jobs | tail -n 4

d4700315-db8c-4522-af55-0fddd262bfe4 Repository Update 2025-12-08 15:20:14 CET Success

ba7d452f-e03d-46d0-a607-fd7c758cd1b1 Repository Update 2025-12-08 15:20:59 CET Success

bfc102d1-985b-4792-8054-03709aa8d949 Repository Update 2025-12-08 15:21:20 CET Success

odacli describe-dbsystem-image | grep 23.26

DBVM 23.26.0.0.0 23.26.0.0.0

GI 23.26.0.0.0 23.26.0.0.0

DB 23.26.0.0.0 23.26.0.0.0A CPU pool is needed for DB Systems, if you don’t have one. A 2-core CPU pool is enough:

odacli create-cpupool -c 2 -n cpupool2c -dbs

Now let’s create a json file, then create a new DB System based on this file:

cat /opt/dbi/create_dbs-04-tst-cdb.json

{

"system": {

"name": "dbs-04-tst",

"shape": "dbs2",

"systemPassword": "***********",

"timeZone": "Europe/Zurich",

"diskGroup": "DATA",

"cpuPoolName": "cpupool2c",

"enableRoleSeparation": true,

"customRoleSeparation": {

"groups": [

{

"name": "oinstall",

"id": 1001,

"role": "oinstall"

},

{

"name": "dbaoper",

"id": 1002,

"role": "dbaoper"

},

{

"name": "dba",

"id": 1003,

"role": "dba"

},

{

"name": "asmadmin",

"id": 1004,

"role": "asmadmin"

},

{

"name": "asmoper",

"id": 1005,

"role": "asmoper"

},

{

"name": "asmdba",

"id": 1006,

"role": "asmdba"

}

],

"users": [

{

"name": "grid",

"id": 1001,

"role": "gridUser"

},

{

"name": "oracle",

"id": 1000,

"role": "oracleUser"

}

]

}

},

"database": {

"name": "CTEST26",

"uniqueName": "CTEST26_S1",

"domainName": "dbi-lab.ch",

"adminPassword": "**********",

"version": "23.26.0.0.0",

"edition": "EE",

"type": "SI",

"dbClass": "OLTP",

"shape": "odb2",

"role": "PRIMARY",

"enableDbConsole": false,

"enableFlashStorage": false,

"redundancy": null,

"characterSet": {

"characterSet": "AL32UTF8",

"nlsCharacterset": "AL16UTF16",

"dbTerritory": "AMERICA",

"dbLanguage": "AMERICAN"

},

"rmanBackupPassword": null,

"enableTDE": false,

"isCdb": true,

"pdbName": "pdb1",

"pdbAdminUser": "pdbadmin",

"tdePassword": null

},

"network": {

"domainName": "dbi-lab.ch",

"ntpServers": [

"85.195.224.28"

],

"dnsServers": [

"10.16.0.250"

],

"nodes": [

{

"name": "dbs-04-tst",

"ipAddress": "10.16.0.247",

"netmask": "255.255.255.0",

"gateway": "10.16.0.1",

"number": 0

}

],

"publicVNetwork": "pubnet"

},

"grid": {

"language": "en"

}

}

odacli create-dbsystem -p /opt/dbi/create_dbs-04-tst-cdb.json

Enter password for system "dbs-04-tst":

Retype password for system "dbs-04-tst":

Enter administrator password for DB "CTEST26":

Retype administrator password for DB "CTEST26":

...35 minutes later, my new DB System is ready to use:

odacli describe-job -i c79ecbbf-dd0c-4b75-ba10-249c78b25f33

Job details

----------------------------------------------------------------

ID: c79ecbbf-dd0c-4b75-ba10-249c78b25f33

Description: DB System dbs-04-tst creation

Status: Success

Created: January 08, 2026 10:23:39 CET

Message:

Task Name Start Time End Time Status

---------------------------------------- ---------------------------------------- ---------------------------------------- ----------------

Create DB System metadata January 08, 2026 10:23:39 CET January 08, 2026 10:23:41 CET Success

Persist new DB System January 08, 2026 10:23:41 CET January 08, 2026 10:23:41 CET Success

Remove unused network interfaces January 08, 2026 10:23:41 CET January 08, 2026 10:23:41 CET Success

Validate DB System creation January 08, 2026 10:23:41 CET January 08, 2026 10:23:46 CET Success

Create the infra quota group January 08, 2026 10:23:46 CET January 08, 2026 10:23:50 CET Success

Setup DB System environment January 08, 2026 10:23:46 CET January 08, 2026 10:23:46 CET Success

Create DB System ASM volume January 08, 2026 10:23:50 CET January 08, 2026 10:24:01 CET Success

Create DB System ACFS filesystem January 08, 2026 10:24:01 CET January 08, 2026 10:24:13 CET Success

Create DB System ACFS mount point January 08, 2026 10:24:01 CET January 08, 2026 10:24:01 CET Success

Create DB System VM ACFS snapshots January 08, 2026 10:24:13 CET January 08, 2026 10:24:28 CET Success

Setup ASM client cluster config January 08, 2026 10:24:28 CET January 08, 2026 10:24:30 CET Success

Create temporary SSH key pair January 08, 2026 10:24:30 CET January 08, 2026 10:24:31 CET Success

Create BM certificates in ACFS location January 08, 2026 10:24:31 CET January 08, 2026 10:24:32 CET Success

Create DB System cloud-init config January 08, 2026 10:24:32 CET January 08, 2026 10:24:32 CET Success

Provision DB System VM(s) January 08, 2026 10:24:32 CET January 08, 2026 10:24:35 CET Success

Create u01 vDisk for DB System January 08, 2026 10:24:35 CET January 08, 2026 10:24:36 CET Success

Add DB System to Clusterware January 08, 2026 10:24:36 CET January 08, 2026 10:24:37 CET Success

Attach disks to DB System January 08, 2026 10:24:36 CET January 08, 2026 10:24:36 CET Success

Attach u01 vDisk to DB System January 08, 2026 10:24:36 CET January 08, 2026 10:24:36 CET Success

Add extra PCI slots January 08, 2026 10:24:37 CET January 08, 2026 10:24:37 CET Success

Create Network Filters January 08, 2026 10:24:37 CET January 08, 2026 10:24:37 CET Success

Export clones repository January 08, 2026 10:24:37 CET January 08, 2026 10:24:37 CET Success

Start DB System January 08, 2026 10:24:37 CET January 08, 2026 10:24:40 CET Success

Wait DB System VM first boot January 08, 2026 10:24:40 CET January 08, 2026 10:26:49 CET Success

Setup Mutual TLS (mTLS) January 08, 2026 10:26:49 CET January 08, 2026 10:26:51 CET Success

Cleanup temporary SSH key pair January 08, 2026 10:26:51 CET January 08, 2026 10:26:52 CET Success

Copy ASM client cluster config January 08, 2026 10:26:51 CET January 08, 2026 10:26:51 CET Success

Delete Mutual TLS (mTLS) Artifacts January 08, 2026 10:26:51 CET January 08, 2026 10:26:51 CET Success

Wait DB System VM DCS Agent readiness January 08, 2026 10:26:52 CET January 08, 2026 10:27:12 CET Success

Add Network Filters January 08, 2026 10:27:12 CET January 08, 2026 10:27:14 CET Success

Install DB System January 08, 2026 10:27:14 CET January 08, 2026 10:53:57 CET Success

Wait DB System VM DCS Agent readiness January 08, 2026 10:53:57 CET January 08, 2026 10:55:58 CET Success

Set DB System as configured January 08, 2026 10:55:58 CET January 08, 2026 10:55:58 CET Success

odacli list-dbsystems

Name Shape GI version DB info Status Created Updated

-------------------- ---------- ------------------ ------------------------------ ---------------------- ------------------------ ------------------------

dbs-04-tst dbs2 23.26.0.0.0 23.26(CONFIGURED=1) CONFIGURED 2026-01-08 10:23:39 CET 2026-01-08 10:55:58 CET

Let’s consider a classic migration using datapump. This tool has been the official one for migration for nearly 2 decades now.

Let’s first create a new PDB inside the container database of the new DB System:

ssh root@dbs-04-tst

su - oracle

. oraenv <<< CTEST26

sqlplus / as sysdba

show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 PDB1 READ WRITE NO

alter pluggable database PDB1 close immediate;

Pluggable database altered.

drop pluggable database PDB1 including datafiles;

Pluggable database dropped.

create pluggable database MIG26DTPUMP admin user pdbadmin identified by "*********";

Pluggable database created.

alter pluggable database MIG26DTPUMP open;

Pluggable database altered.

alter pluggable database MIG26DTPUMP save state;

Pluggable database altered.

show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

4 MIG26DTPUMP READ WRITE NO

alter session set container=MIG26DTPUMP;

create tablespace users datafile size 100M autoextend on maxsize 10G;

Tablespace created.

exit

On source database, non-CDB or PDB it doesn’t matter, export data using datapump:

su - oracle

. oraenv <<< CPROD19

export ORACLE_PDB_SID=PRODDB01

sqlplus / as sysdba

create or replace directory mig as '/backup/dumps/';

col current_scn for 999999999999

select current_scn from v$database;

CURRENT_SCN

---------------

6292616

exit

expdp system directory=MIG full=y dumpfile=PRODDB01_20260112.dmp logfile=exp_PRODDB01_20260112.log flashback_scn=6292616

Export: Release 19.0.0.0.0 - Production on Mon Jan 12 10:36:52 2026

Version 19.29.0.0.0

Copyright (c) 1982, 2019, Oracle and/or its affiliates. All rights reserved.

Password:

Connected to: Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Starting "SYSTEM"."SYS_EXPORT_FULL_01": system/******** directory=MIG full=y dumpfile=PRODDB01_20260112.dmp logfile=exp_PRODDB01_20260112.log flashback_scn=6292616

Processing object type DATABASE_EXPORT/EARLY_OPTIONS/VIEWS_AS_TABLES/TABLE_DATA

Processing object type DATABASE_EXPORT/NORMAL_OPTIONS/TABLE_DATA

Processing object type DATABASE_EXPORT/NORMAL_OPTIONS/VIEWS_AS_TABLES/TABLE_DATA

Processing object type DATABASE_EXPORT/SCHEMA/TABLE/TABLE_DATA

Processing object type DATABASE_EXPORT/SCHEMA/TABLE/INDEX/STATISTICS/INDEX_STATISTICS

Processing object type DATABASE_EXPORT/SCHEMA/TABLE/STATISTICS/TABLE_STATISTICS

Processing object type DATABASE_EXPORT/STATISTICS/MARKER

Processing object type DATABASE_EXPORT/PRE_SYSTEM_IMPCALLOUT/MARKER

Processing object type DATABASE_EXPORT/PRE_INSTANCE_IMPCALLOUT/MARKER

Processing object type DATABASE_EXPORT/TABLESPACE

...

. . exported "WMSYS"."WM$METADATA_MAP" 11.16 KB 0 rows

. . exported "MDSYS"."RDF_PARAM$" 6.515 KB 3 rows

. . exported "SYS"."AUDTAB$TBS$FOR_EXPORT" 5.960 KB 2 rows

. . exported "SYS"."DBA_SENSITIVE_DATA" 8.070 KB 0 rows

. . exported "SYS"."DBA_TSDP_POLICY_PROTECTION" 7.656 KB 0 rows

. . exported "SYS"."NACL$_ACE_EXP" 9.812 KB 0 rows

. . exported "SYS"."NACL$_HOST_EXP" 6.921 KB 1 rows

. . exported "SYS"."NACL$_WALLET_EXP" 5.929 KB 0 rows

. . exported "DBI"."CONSULTANTS" 5.937 KB 1 rows

Master table "SYSTEM"."SYS_EXPORT_FULL_01" successfully loaded/unloaded

******************************************************************************

Dump file set for SYSTEM.SYS_EXPORT_FULL_01 is:

/backup/dumps/PRODDB01_20260112.dmp

Job "SYSTEM"."SYS_EXPORT_FULL_01" successfully completed at Mon Jan 12 10:38:52 2026 elapsed 0 00:01:54

ls -lrth /backup/dumps/*20260112*

-rw-r-----. 1 oracle asmadmin 34M Jan 12 10:38 /backup/dumps/PRODDB01_20260112.dmp

-rw-r--r--. 1 oracle asmadmin 9.8K Jan 12 10:38 /backup/dumps/exp_PRODDB01_20260112.logOn target database, let’s do the import:

ssh root@dbs-04-tst

su - oracle

. oraenv <<< CTEST26

export ORACLE_PDB_SID=MIG26DTPUMP

sqlplus / as sysdba

create or replace directory mig as '/backup/dumps/';

exit

vi /backup/dumps/imp_PRODDB01.par

directory=MIG

schemas=dbi

dumpfile=PRODDB01_20260112.dmp

logfile=imp_PRODDB01_to_MIG26DTPUMP_20260112.log

exclude=statistics

impdp system parfile=/backup/dumps/imp_PRODDB01.par

Import: Release 23.26.0.0.0 - for Oracle Cloud and Engineered Systems on Mon Jan 12 10:51:39 2026

Version 23.26.0.0.0

Copyright (c) 1982, 2025, Oracle and/or its affiliates. All rights reserved.

Password:

Connected to: Oracle AI Database 26ai Enterprise Edition Release 23.26.0.0.0 - for Oracle Cloud and Engineered Systems

Master table "SYSTEM"."SYS_IMPORT_SCHEMA_01" successfully loaded/unloaded

import done in AL32UTF8 character set and AL16UTF16 NCHAR character set

export done in WE8MSWIN1252 character set and AL16UTF16 NCHAR character set

Warning: possible data loss in character set conversions

Starting "SYSTEM"."SYS_IMPORT_SCHEMA_01": system/******** parfile=/backup/dumps/imp_PRODDB01.par

Processing object type DATABASE_EXPORT/SCHEMA/USER

Processing object type DATABASE_EXPORT/SCHEMA/GRANT/SYSTEM_GRANT

Processing object type DATABASE_EXPORT/SCHEMA/ROLE_GRANT

Processing object type DATABASE_EXPORT/SCHEMA/DEFAULT_ROLE

Processing object type DATABASE_EXPORT/SCHEMA/PROCACT_SCHEMA/LOGREP

Processing object type DATABASE_EXPORT/SCHEMA/TABLE/TABLE

Processing object type DATABASE_EXPORT/SCHEMA/TABLE/TABLE_DATA

. . imported "DBI"."CONSULTANTS" 5.9 KB 1 rows

Job "SYSTEM"."SYS_IMPORT_SCHEMA_01" successfully completed at Mon Jan 12 10:51:54 2026 elapsed 0 00:00:08Let’s now compute statistics for my schema:

sqlplus / as sysdba

exec dbms_stats.gather_schema_stats(ownname => 'DBI');

PL/SQL procedure successfully completed.

exit;Let’s do a quick check on data:

sqlplus / as sysdba

select start_date from dbi.consultants where name like 'DUB%';

START_DAT

---------

04-SEP-17

exit

That’s fine.

Pros & cons

- + Same procedure as previous migrations

- + Reorganize data and indexes

- + Keep the source database untouched

- – Rather slow when database is big

If the source database is a PDB, it can be copied through a DB link, this is a very convenient way of migrating a database.

First, it’s weird but the PDB administrator of the source DB must have the CREATE PLUGGABLE DATABASE privilege:

su - oracle

. oraenv <<< CPROD19

sqlplus / as sysdba

alter session set container=PRODDB01;

grant create pluggable database to pdbadmin;

exitOn the DB System, let’s create a new TNS entry for the 19c PDB:

ssh dbs-04-tst

su - oracle

. oraenv <<< CTEST26

vi $ORACLE_HOME/network/admin/tnsnames.ora

...

PRODDB01 =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = dbioda01.dbi-lab.ch)(PORT = 1521))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = proddb01.dbi-lab.ch)

)

)On the target CDB, create a new database link to the source database:

CREATE DATABASE LINK PRODDB01 CONNECT TO PDBADMIN IDENTIFIED BY "*********" USING 'PRODDB01';

select count(*) from dual@PRODDB01;

COUNT(*)

----------

1Now let’s copy the source PDB under a new name on the 26ai container:

create pluggable database MIG26DBLINK from PRODDB01@PRODDB01;

Pluggable database created.

alter pluggable database MIG26DBLINK open;

Opening this PDB will take some minutes because it automatically triggers the upgrade from 19c to 26ai:

tail -n 15 --f /u01/app/oracle/diag/rdbms/ctest26_s1/CTEST26/trace/alert_CTEST26.log

...

MIG26DBLINK(3):Starting Upgrade on PDB Open

2026-01-12T10:57:49.606651+01:00

MIG26DBLINK(3):alter pluggable database application APP$CDB$CATALOG begin install '19.0.0.0.0' on error capture

MIG26DBLINK(3):Completed: alter pluggable database application APP$CDB$CATALOG begin install '19.0.0.0.0' on error capture

MIG26DBLINK(3):alter pluggable database application APP$CDB$CATALOG end install '19.0.0.0.0'

MIG26DBLINK(3):Completed: alter pluggable database application APP$CDB$CATALOG end install '19.0.0.0.0'

MIG26DBLINK(3):alter pluggable database application APP$CDB$CATALOG begin upgrade

'19.0.0.0.0' to '23.0.0.0.0.partial' on error capture

MIG26DBLINK(3):Completed: alter pluggable database application APP$CDB$CATALOG begin upgrade

'19.0.0.0.0' to '23.0.0.0.0.partial' on error capture

...

MIG26DBLINK(3):SERVER COMPONENT id=CATJAVA: status=UPGRADED, version=23.26.0.0.0, timestamp=2026-01-12 11:09:54 Container=MIG26DBLINK Id=3

2026-01-12T11:09:58.200636+01:00

MIG26DBLINK(3):Resize operation completed for file# 19, fname +DATA/CTEST26_S1/482EE16D86417106E063F700240A6555/DATAFILE/undotbs1.294.1222340161, old size 450560K, new size 455680K

2026-01-12T11:09:59.496448+01:00

MIG26DBLINK(3):Resize operation completed for file# 19, fname +DATA/CTEST26_S1/482EE16D86417106E063F700240A6555/DATAFILE/undotbs1.294.1222340161, old size 455680K, new size 460800K

2026-01-12T11:10:26.701609+01:00

MIG26DBLINK(3):oracle :

MIG26DBLINK(3):SERVER COMPONENT id=XDB: status=UPGRADED, version=23.26.0.0.0, timestamp=2026-01-12 11:10:26 Container=MIG26DBLINK Id=3

2026-01-12T11:10:36.378304+01:00

MIG26DBLINK(3):Resize operation completed for file# 19, fname +DATA/CTEST26_S1/482EE16D86417106E063F700240A6555/DATAFILE/undotbs1.294.1222340161, old size 460800K, new size 465920K

2026-01-12T11:10:45.077998+01:00

MIG26DBLINK(3):Resize operation completed for file# 19, fname +DATA/CTEST26_S1/482EE16D86417106E063F700240A6555/DATAFILE/undotbs1.294.1222340161, old size 465920K, new size 471040K

...

MIG26DBLINK(3):***************************************************************

MIG26DBLINK(3):WARNING: Pluggable Database MIG26DBLINK with pdb id - 3 is

MIG26DBLINK(3): altered with errors or warnings. Please look into

MIG26DBLINK(3): PDB_PLUG_IN_VIOLATIONS view for more details.

MIG26DBLINK(3):***************************************************************

MIG26DBLINK(3):--ATTENTION--

MIG26DBLINK(3):Errors reported while opening PDB (ContainerId: 3) and have been recorded in pdb_alert$ table.

MIG26DBLINK(3) Error Violation: SQL Patch, Cause: '23.26.0.0.0 Release_Update 2509270520' is installed in the CDB but no release updates are installed in the PDB, Action: Call datapatch to install in the PDB or the CDB

2026-01-12T11:13:31.632629+01:00

MIG26DBLINK(3):Opening pdb with no Resource Manager plan active

Violations: Type: 1, Count: 1

Violations: Type: 2, Count: 2

Completed: Pluggable database MIG26DBLINK opened read write

Completed: alter pluggable database MIG26DBLINK openAfter a couple of minutes, the PDB is opened with errors:

Warning: PDB altered with errors.

exitLet’s apply datapatch on this very PDB:

$ORACLE_HOME/OPatch/datapatch -pdbs MIG26DBLINK

SQL Patching tool version 23.26.0.0.0 Lim on Mon Jan 12 11:14:55 2026

Copyright (c) 2012, 2025, Oracle. All rights reserved.

Log file for this invocation: /u01/app/oracle/product/23.0.0.0/dbhome_1/cfgtoollogs/sqlpatch/sqlpatch_sid_CTEST26_ts_2026_01_12_11_14_55_pid_5074/sqlpatch_invocation.log

Connecting to database...OK

Gathering database info...done

Note: Datapatch will only apply or rollback SQL fixes for PDBs

that are in an open state, no patches will be applied to closed PDBs.

Please refer to Note: Datapatch: Database 12c Post Patch SQL Automation

(Doc ID 1585822.1)

Bootstrapping registry and package to current versions...done

Determining current state...done

Current state of interim SQL patches:

No interim patches found

Current state of release update SQL patches:

Binary registry:

23.26.0.0.0 Release_Update 250927052030: Installed

PDB MIG26DBLINK:

Applied 23.26.0.0.0 Release_Update 250927052030 successfully

Adding patches to installation queue and performing prereq checks...done

Installation queue:

For the following PDBs: MIG26DBLINK

No interim patches need to be rolled back

No release update patches need to be installed

No interim patches need to be applied

Bypass install queue:

For the following PDBs: MIG26DBLINK

No interim rollbacks will bypass install

Patch 38404116 (Database Release Update : 23.26.0.0.0 (38404116) Gold Image): will bypass install

Apply from 23.26.0.0.0 Release_Update 250927052030 to 23.26.0.0.0 Release_Update 250927052030

No interim applys will bypass install

Installation queue after removing bypass entries...

Installation queue:

For the following PDBs: MIG26DBLINK

No interim patches need to be rolled back

No release update patches need to be installed

No interim patches need to be applied

Processing bypass install queue:

Patch 38404116 apply (pdb MIG26DBLINK): SUCCESS (bypass_install)

SQL Patching tool complete on Mon Jan 12 11:15:03 2026Everything is fine, now let’s close and open this PDB in normal mode:

sqlplus / as sysdba

show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 MIG26DBLINK READ WRITE YES

4 MIG26DTPUMP READ WRITE NO

alter pluggable database MIG26DBLINK close immediate;

alter pluggable database MIG26DBLINK open;

alter pluggable database MIG26DBLINK save state;

show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 MIG26DBLINK READ WRITE NO

4 MIG26DTPUMP READ WRITE NOLet’s do a quick data check:

sqlplus / as sysdba

alter session set container=MIG26DBLINK;

select start_date from dbi.consultants where name like 'DUB%';

START_DAT

---------

04-SEP-17

exitDatabase is ready to use.

Pros & cons

- + Maybe the easiest way of migrating a PDB

- + Keep the source database untouched

- + Rather fast

- + No downtime of the source database

- – Need to apply datapatch

If the source database is a PDB, it can be unplugged and plugged back to another container. This is how multitenant architecture was presented when it first came to 12cR1.

Let’s unplug the source PDB:

su - oracle

. oraenv <<< CPROD19

sqlplus / as sysdba

alter pluggable database PRODDB01 close immediate;

Pluggable database altered.

alter pluggable database PRODDB01 unplug into '/backup/dumps/PRODDB01.xml';

Pluggable database altered.

exitNow let’s plug this PDB to the new 26ai container:

ssh root@dbs-04-tst

su - oracle

. oraenv <<< CTEST26

sqlplus / as sysdba

show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 MIG26DBLINK READ WRITE NO

4 MIG26DTPUMP READ WRITE NO

create pluggable database MIG26REPLUG using '/backup/dumps/PRODDB01.xml' COPY;

create pluggable database MIG26REPLUG using '/backup/dumps/PRODDB01.xml' COPY

*

ERROR at line 1:

ORA-19505: failed to identify file

"+DATA/CPROD19_S1/450AA2873245B6F5E063F100240AC35A/DATAFILE/system.302.122233608

1"

ORA-17503: ksfdopn:2 Failed to open file

+DATA/CPROD19_S1/450AA2873245B6F5E063F100240AC35A/DATAFILE/system.302.1222336081

ORA-15260: permission denied on ASM disk group

Help: https://docs.oracle.com/error-help/db/ora-19505/

Files from the source database are not readable by this DB System. Let’s change the permission on these files from the Bare Metal ASM instance:

exit

exit

exit

su - grid

asmcmd ls -lt +DATA/CPROD19_S1/450AA2873245B6F5E063F100240AC35A/DATAFILE/* --permission

Type Redund Striped Time Sys User Group Permission Name

DATAFILE MIRROR COARSE JAN 12 11:00:00 Y oracle@e7d9d36f6b157fdabf89026bcf48d757 rw------- USERS.297.1222336081

DATAFILE MIRROR COARSE JAN 12 11:00:00 Y oracle@e7d9d36f6b157fdabf89026bcf48d757 rw------- UNDOTBS1.306.1222336081

DATAFILE MIRROR COARSE JAN 12 11:00:00 Y oracle@e7d9d36f6b157fdabf89026bcf48d757 rw------- SYSTEM.302.1222336081

DATAFILE MIRROR COARSE JAN 12 11:00:00 Y oracle@e7d9d36f6b157fdabf89026bcf48d757 rw------- SYSAUX.305.1222336081

asmcmd ls -lt +DATA/CTEST26_S1/DATAFILE/* --permission

Type Redund Striped Time Sys User Group Permission Name

DATAFILE MIRROR COARSE JAN 12 10:00:00 Y oracle@b94b488f82b1cf5cbf58f54162aafac0 rw------- USERS.299.1222339507

DATAFILE MIRROR COARSE JAN 12 10:00:00 Y oracle@b94b488f82b1cf5cbf58f54162aafac0 rw------- UNDOTBS1.693.1222338993

DATAFILE MIRROR COARSE JAN 12 10:00:00 Y oracle@b94b488f82b1cf5cbf58f54162aafac0 rw------- SYSTEM.906.1222338951

DATAFILE MIRROR COARSE JAN 12 10:00:00 Y oracle@b94b488f82b1cf5cbf58f54162aafac0 rw------- SYSAUX.832.1222338987

asmcmd chmod a+r +DATA/CPROD19_S1/450AA2873245B6F5E063F100240AC35A/DATAFILE/*

asmcmd ls -lt +DATA/CPROD19_S1/450AA2873245B6F5E063F100240AC35A/DATAFILE/* --permission

Type Redund Striped Time Sys User Group Permission Name

DATAFILE MIRROR COARSE JAN 12 11:00:00 Y oracle@e7d9d36f6b157fdabf89026bcf48d757 rw-r--r-- USERS.297.1222342153

DATAFILE MIRROR COARSE JAN 12 11:00:00 Y oracle@e7d9d36f6b157fdabf89026bcf48d757 rw-r--r-- UNDOTBS1.301.1222341995

DATAFILE MIRROR COARSE JAN 12 11:00:00 Y oracle@e7d9d36f6b157fdabf89026bcf48d757 rw-r--r-- SYSTEM.305.1222341995

DATAFILE MIRROR COARSE JAN 12 11:00:00 Y oracle@e7d9d36f6b157fdabf89026bcf48d757 rw-r--r-- SYSAUX.302.1222341995

exit

ssh root@dbs-04-tst

su - oracle

. oraenv <<< CTEST26

sqlplus / as sysdba

create pluggable database MIG26REPLUG using '/backup/dumps/PRODDB01.xml' COPY;

Pluggable database created.

show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 MIG26DBLINK READ WRITE NO

4 MIG26DTPUMP READ WRITE NO

6 MIG26REPLUG MOUNTED

alter pluggable database MIG26REPLUG open;It will also take a couple of minutes for this upgrade to 26ai. You will see corresponding traces in the alert.log file of the 26ai container:

tail -f /u01/app/oracle/diag/rdbms/ctest26_s1/CTEST26/trace/alert_CTEST26.log

...

2026-01-12T11:34:21.075364+01:00

MIG26REPLUG(6):Starting Upgrade on PDB Open

...

MIG26REPLUG(6):***************************************************************

MIG26REPLUG(6):WARNING: Pluggable Database MIG26REPLUG with pdb id - 6 is

MIG26REPLUG(6): altered with errors or warnings. Please look into

MIG26REPLUG(6): PDB_PLUG_IN_VIOLATIONS view for more details.

MIG26REPLUG(6):***************************************************************

MIG26REPLUG(6):--ATTENTION--

MIG26REPLUG(6):Errors reported while opening PDB (ContainerId: 6) and have been recorded in pdb_alert$ table.

MIG26REPLUG(6) Error Violation: SQL Patch, Cause: '23.26.0.0.0 Release_Update 2509270520' is installed in the CDB but no release updates are installed in the PDB, Action: Call datapatch to install in the PDB or the CDB

2026-01-12T11:49:56.836062+01:00

MIG26REPLUG(6):Opening pdb with no Resource Manager plan active

Violations: Type: 1, Count: 1

Violations: Type: 2, Count: 1

Completed: Pluggable database MIG26REPLUG opened read write

Completed: alter pluggable database MIG26REPLUG openLet’s have a look at my PDBs and apply the datapatch on MIG26REPLUG:

show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 MIG26DBLINK READ WRITE NO

4 MIG26DTPUMP READ WRITE NO

6 MIG26REPLUG READ WRITE YES

exit

$ORACLE_HOME/OPatch/datapatch -pdbs MIG26REPLUG

SQL Patching tool version 23.26.0.0.0 Lim on Mon Jan 12 11:51:42 2026

Copyright (c) 2012, 2025, Oracle. All rights reserved.

Log file for this invocation: /u01/app/oracle/product/23.0.0.0/dbhome_1/cfgtoollogs/sqlpatch/sqlpatch_sid_CTEST26_ts_2026_01_12_11_51_42_pid_24026/sqlpatch_invocation.log

Connecting to database...OK

Gathering database info...done

Note: Datapatch will only apply or rollback SQL fixes for PDBs

that are in an open state, no patches will be applied to closed PDBs.

Please refer to Note: Datapatch: Database 12c Post Patch SQL Automation

(Doc ID 1585822.1)

Bootstrapping registry and package to current versions...done

Determining current state...done

Current state of interim SQL patches:

No interim patches found

Current state of release update SQL patches:

Binary registry:

23.26.0.0.0 Release_Update 250927052030: Installed

PDB MIG26REPLUG:

Applied 23.26.0.0.0 Release_Update 250927052030 successfully

Adding patches to installation queue and performing prereq checks...done

Installation queue:

For the following PDBs: MIG26REPLUG

No interim patches need to be rolled back

No release update patches need to be installed

No interim patches need to be applied

Bypass install queue:

For the following PDBs: MIG26REPLUG

No interim rollbacks will bypass install

Patch 38404116 (Database Release Update : 23.26.0.0.0 (38404116) Gold Image): will bypass install

Apply from 23.26.0.0.0 Release_Update 250927052030 to 23.26.0.0.0 Release_Update 250927052030

No interim applys will bypass install

Installation queue after removing bypass entries...

Installation queue:

For the following PDBs: MIG26REPLUG

No interim patches need to be rolled back

No release update patches need to be installed

No interim patches need to be applied

Processing bypass install queue:

Patch 38404116 apply (pdb MIG26REPLUG): SUCCESS (bypass_install)

SQL Patching tool complete on Mon Jan 12 11:51:50 2026Now let’s restart the PDB in normal mode:

sqlplus / as sysdba

alter pluggable database MIG26REPLUG close immediate;

alter pluggable database MIG26REPLUG open;

alter pluggable database MIG26REPLUG save state;

show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 MIG26DBLINK READ WRITE NO

4 MIG26DTPUMP READ WRITE NO

6 MIG26REPLUG READ WRITE NOThis PDB is now running on 26ai.

Let’s check my consultants table:

alter session set container=MIG26REPLUG;

select start_date from dbi.consultants where name like 'DUB%';

START_DAT

---------

04-SEP-17

exit

Pros & cons

- + Easy migration

- + Preserve source files with the copy option

- + Rather fast

- – Need a downtime of the source PDB

- – Reuse of source database is only possible by unplugging and plugging back the PDB with the xml file

There are multiple ways of migrating to 26ai. There is quite a lot of new features coming with 26ai. You will use them or not, but trying to migrate to this version is something to start thinking about in 2026.

L’article Migrate your database to 26ai on ODA est apparu en premier sur dbi Blog.

Upgrade Classic Extracts to Integrated Mode Before Migrating to GoldenGate 23ai

Classic Extracts have been deprecated in GoldenGate 19c and shouldn’t be part of your GoldenGate replications for a long time now. Yet, some customers still have them, and with GoldenGate 19c support coming to an end in a few months, it is high time you upgrade to GoldenGate 23ai.

However, GoldenGate 23ai doesn’t support classic extract, and the migration utility provided by Oracle will not take care of them. So, what to do with your classic extracts before migrating to GoldenGate 23ai ?

Upgrade the extracts to integrated modeThe natural solution is to migrate the extracts from the classic mode to the integrated mode. It might not be possible, depending on your situation, but it is the easiest way to get your extracts ready for a GoldenGate 23ai migration.

To do so, we will use the info extract <extract> upgrade command, which tells us whether the extract is ready for an upgrade. Log in with the correct alias, stop the extract and register the extract before running the command.

GGSCI (vmogg) > dblogin useridalias ggadmin

Successfully logged into database.

GGSCI (vmogg as ggadmin@DB1) > stop extract ext

Sending STOP request to EXTRACT EXT ...

Request processed.

GGSCI (vmogg as ggadmin@DB1) > info extract ext

EXTRACT EXT Last Started 2025-12-21 10:13 Status STOPPED

Checkpoint Lag 00:00:00 (updated 00:00:10 ago)

Log Read Checkpoint Oracle Redo Logs

2025-12-21 10:19:13 Seqno 10, RBA 18472448

SCN 0.2170788 (2170788)

GGSCI (vmogg as ggadmin@DB1) > register extract ext database

2025-12-21 10:21:13 INFO OGG-02003 Extract EXT successfully registered with database at SCN 2170800.

GGSCI (vmogg as ggadmin@DB1) > info extract ext upgradeYou might need to run the command multiple times, depending on the output of the last command. Once the extract is ready to be upgraded, you will see the following output.

GGSCI (vmogg as ggadmin@DB1) > info extract ext upgrade

Extract EXT is ready to be upgraded to integrated capture.After this, run the upgrade command and restart the extract. The extract is now in Oracle Integrated Redo Logs mode.

GGSCI (vmogg as ggadmin@DB1) > alter extract ext upgrade integrated tranlog

Extract EXT successfully upgraded to integrated capture.

GGSCI (vmogg as ggadmin@DB1) > info extract ext

EXTRACT EXT Initialized 2025-12-21 10:13 Status STOPPED

Checkpoint Lag 00:00:00 (updated 00:11:43 ago)

Log Read Checkpoint Oracle Integrated Redo Logs

2025-12-21 10:19:13

SCN 0.2170788 (2170788)

GGSCI (vmogg as ggadmin@DB1) > start extract ext

Sending START request to MANAGER ...

EXTRACT EXT startingIf your extract doesn’t start, have a detailed look at the parameters used in the extract with view params ext. You could use parameters for your extract that were accepted for classic extracts but not supported for integrated extracts.

Repeat this procedure for all your classic extracts in all your GoldenGate deployments, and you will be ready to migrate to GoldenGate 23ai !

OGG-08241 when registering the extract

If you get the following error when registering the extract:

OGG-08241 ERROR: This EXTRACT EXT is already registered with the database.Check the dba_capture view of the database you are trying to register the extract into. If you see an extract already registered, it might mean that you previously registered an integrated extract with the same name.

SELECT capture_name, queue_name, queue_owner, source_database, start_scn from dba_capture;If you receive this error when running the upgrade command, you should try restarting the extract and stopping it again.

Extract ext is not ready to be upgraded because recovery SCN ... has not reached SCN ...start extract ext

stop extract ext

info extract ext upgradeTechnically, the info extract ... upgrade command works for ABENDED extracts. But depending on the reason your extract ended up in this state, you might not be able to upgrade.

L’article Upgrade Classic Extracts to Integrated Mode Before Migrating to GoldenGate 23ai est apparu en premier sur dbi Blog.

Containerize Vue 3 application with GitLab CI/CD

In this article, I will explain how I containerized my Vuetify + Vue 3 application with GitLab CI/CD.

My goal was to deploy my application on Kubernetes. To achieve this, I need to:

- Build the Vue 3 application

- Build the Docker image

- Push the image to the GitLab registry

Quick overview: overall, the full project uses a micro-service architecture and runs on Kubernetes. The Vue 3 project only contains the UI, and we containerize it and serve it with an Nginx image. The backend is a REST API built with NestJS, and we containerize it separately.

Add a Dockerfile to the projectFirst, I need a Dockerfile to build the image for my application. In this Dockerfile, I use a double-stage build. As a result, a final image containing only what is strictly necessary.

# Build the Vue.js application

FROM node:current-alpine AS build

COPY . ./app

WORKDIR /app

RUN npm install

RUN npm run build

# Final Nginx container

FROM nginx:alpine

COPY --from=build /app/dist /usr/share/nginx/htmlThe important part in this Dockerfile is the multi-stage build.

The first part, with the Node container, build the application, but production does not require all the tools used during this step.

As a result, the second step copies only the dist folder, the result of the build, and embeds it into an Nginx container to serve the generated files.

In the second step, I add the .gitlab-ci.yml file to the project root directory.

This file configures the pipeline, I use the docker-in-docker service to build the image. First, I login into the registry of my project. Next, I build and push the image.

stages:

- build

build:

# Use the official docker image.

image: docker:latest

stage: build

services:

- docker:dind

before_script:

# Login to the gitlab registry

- docker login -u "$CI_REGISTRY_USER" -p "$CI_REGISTRY_PASSWORD" $CI_REGISTRY

script:

# Build and push the image

- docker build --pull -t "$CI_REGISTRY_IMAGE:$CI_COMMIT_SHA" .

- docker push "$CI_REGISTRY_IMAGE:$CI_COMMIT_SHA"

# Run this job where a Dockerfile exists

rules:

- if: $CI_COMMIT_BRANCH

exists:

- DockerfileNote: all the variables ($CI_REGISTRY_IMAGE, $CI_COMMIT_SHA…) used in the .gitlab-ci.yml are predefined variables provided by GitLab CI/CD.

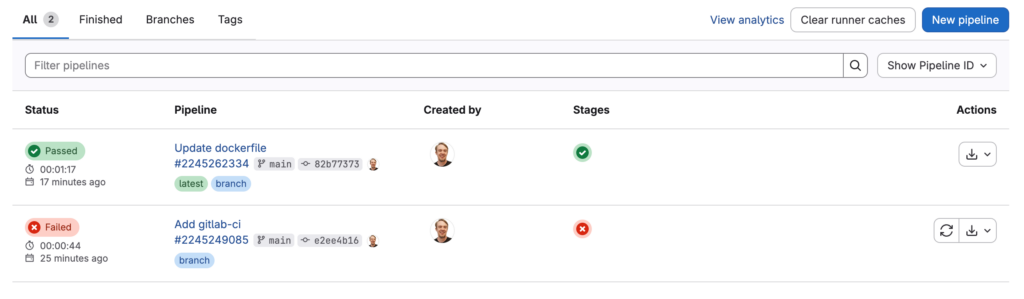

Build the containerOnce I push the .gitlab-ci.yml file, GitLab automatically triggers the pipeline following the rules definition.

After completion, the status of the pipeline is green and the status passed

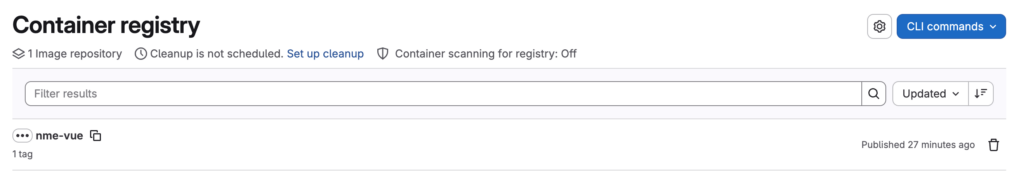

As expected, the image is available in the registry of the project.

Conclusion

Conclusion

In summary, properly containerizing a Vue application is easy, but it requires to separate build and execution. A multi-step build with an Nginx container produces a lightweight, production-ready image.

L’article Containerize Vue 3 application with GitLab CI/CD est apparu en premier sur dbi Blog.

SQL Server Always-On: Centralizing Backup History Across Replicas

Monitoring backups in a SQL Server Always On Availability Group can often feel like a game of hide and seek where the rules change every time you flip a failover switch. On paper, your backup strategy is solid ; you’ve configured your replica priorities and your jobs are running like clockwork. But when you query the backup history to ensure everything is under control, you realize the truth is fragmented.

Because the msdb database is local to each instance, each replica only knows about the backups it performed itself. If your backups happen on the secondary today and the primary tomorrow, no single node holds the complete story. This leads to inconsistent monitoring reports, “false positive” alerts, and a lot of manual jumping between instances just to answer a simple question: “Are we actually protected with consistent backups?”.

Before we dive deeper, a quick disclaimer for the lucky ones: If you are running SQL Server 2022 and have implemented Contained Availability Groups you can stop reading here, you are safe. In a Contained AG, the system databases are replicated alongside your data, meaning the backup history is finally unified and follows the group.

For the others, in this post, we’re going to explore how to stop chasing metadata across your cluster. We’ll look at the limitations of the local msdb, compare different ways to consolidate a unified backup view using Linked Servers, OPENDATASOURCE, and PowerShell, and see how to build a monitoring query that finally tells the whole truth, regardless of where the backup actually ran.

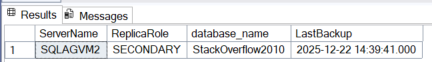

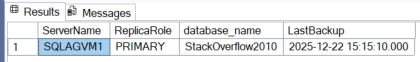

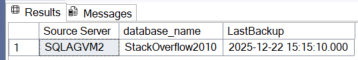

The traditional approach is therefore to query the msdb through the AG listener, pointing to the primary replica. Using a simple TSQL query to find the most recent backup, we get the following results.

SELECT

bs.database_name,

MAX(bs.backup_finish_date) AS LastBackup

FROM msdb.dbo.backupset bs

WHERE bs.database_name = 'StackOverflow2010'

GROUP BY bs.database_name;

As we can see, the two backup dates differ (14:39 being the time the database was created in the AG and 15:15 being the time of the last backup). As a result, if a failover occurs, the main msdb will contain incomplete data because the primary msdb will be the one on SQLAGVM2.

This traditional approach is perfectly acceptable for standalone instances because there is only one source of truth, but in the case of Always-On, we need a more robust solution. Let’s examine the different possibilities.

The good old Linked ServersIf you want to keep everything within the SQL Engine, Linked Servers are your go-to tool. The idea is simple: from your Primary replica, you reach out to the Secondary, query its msdb, and union the results with your local data.

SELECT

@@SERVERNAME AS [PrimaryServer],

bs.database_name,

MAX(bs.backup_finish_date) AS LastBackup

FROM msdb.dbo.backupset bs

WHERE bs.database_name = 'StackOverflow2010'

GROUP BY bs.database_name

UNION ALL

SELECT

'REMOTE_MSDB' AS [ReportingServer],

bs.database_name,

MAX(bs.backup_finish_date) AS LastBackup

FROM [REMOTE_MSDB].msdb.dbo.backupset bs

WHERE bs.database_name = 'StackOverflow2010'

GROUP BY bs.database_name;On paper, the best way to secure a Linked Server is to use the Self option, ensuring that your own permissions are carried over to the next node. It’s the most transparent approach for auditing and security. However, this is where we often hit a silent wall: the Double-Hop. Unless Kerberos delegation is perfectly configured in your domain, NTLM will prevent your identity from traveling to the second replica. You’ll end up with a connection error, not because of a lack of permissions, but because your identity simply couldn’t make the trip. To determine the type of authentication protocol you are using, use the following query.

SELECT auth_scheme

FROM sys.dm_exec_connections

WHERE session_id = @@SPID;To bypass this hurdle, it is common to see Fixed Logins being used as a pragmatic workaround. But by hardcoding credentials to make the bridge work, we create a permanent, pre-authenticated tunnel. From a security standpoint, this can facilitate lateral movement if one instance is ever compromised, a major concern in modern Zero Trust architectures. Furthermore, it obscures your audit logs, as the remote server only sees the service account instead of the actual user. These hidden complexities and security risks are precisely why many DBAs are now moving toward more decoupled, scalable alternatives.

The “On-the-Fly” Connection: OPENDATASOURCETo address the frustrations of Linked Servers, another T-SQL path often explored is the use of ad hoc queries via OPENDATASOURCE.

The concept is tempting: instead of building a permanent bridge (Linked Server), you use a temporary ladder ; OPENDATASOURCE allows you to define a connection string directly inside your T-SQL statement, reaching out to a remote replica only for the duration of that specific query. It feels lightweight and dynamic because it requires no pre-configured server objects.

In theory, you would use it like this to pull backup history from your secondary node:

SELECT

@@SERVERNAME AS [Source Server],

bs.database_name,

MAX(bs.backup_finish_date) AS LastBackup

FROM OPENDATASOURCE('MSOLEDBSQL', 'Data Source=SQLAGVM1,1432;Integrated Security=SSPI').msdb.dbo.backupset bs

WHERE bs.database_name = 'StackOverflow2010'

GROUP BY bs.database_name;However, if the environment is not properly configured, an error will occur immediately.

Msg 15281, Level 16, State 1, Line 1

SQL Server blocked access to STATEMENT 'OpenRowset/OpenDatasource' of component 'Ad Hoc Distributed Queries' because this component is turned off as part of the security configuration for this server. A system administrator can enable the use of 'Ad Hoc Distributed Queries' by using sp_configure. By default, SQL Server locks this door. It is a protective measure to prevent users from using the SQL instance as a jumping point to query any other server on the network. To get past this error, a sysadmin must explicitly enable Ad Hoc Distributed Queries. This requires tweaking the advanced configuration of the instance.

EXEC sp_configure 'show advanced options', 1;

RECONFIGURE;

GO

EXEC sp_configure 'Ad Hoc Distributed Queries', 1;

RECONFIGURE;

GOOnce these options have been reconfigured, access to the remote msdb is finally possible.

While we successfully made OPENDATASOURCE work, the trade-offs are significant. We have ended up with a solution that is brittle, difficult to maintain, and a potential liability:

- Hardcoding & Maintenance: Every replica requires a manually adapted connection string. If a server is renamed, a port is migrated, or a password expires, the entire monitoring logic collapses.

- Security & Shadow IT Risk: Enabling Ad Hoc Distributed Queries opens a permanent hole in your instance. You aren’t just allowing your script to run; you are allowing any sysadmin to connect to any external server, creating a ghost feature that can be easily misused.

In short, we are fighting the SQL engine’s security defaults just to get a simple timestamp. For a truly robust and scalable solution, it is time to look beyond T-SQL.

Here comes the superhero PowershellPowerShell steps in as the ultimate lifesaver, delivering high-level automation and pure execution simplicity. It allows you to centralize and control your scripts from an external management server, piloting your entire fleet remotely without cluttering your SQL instances.

By leveraging the power of dbatools, we shatter the limitations of traditional T-SQL. We gain dynamic flexibility and enhanced security by bypassing risky configurations, all while maintaining total control over how we manipulate the retrieved data. The security gain does not come from PowerShell itself, but from removing risky SQL Server surface area features and centralizing access control on a hardened management host. This works because PowerShell establishes direct connections from your management host to each replica. This bypasses the NTLM double-hop issue entirely, as your identity is never passed between servers, removing any need for complex Kerberos delegation or risky fixed logins.

Here is how to put this into practice. By using the Availability Group Listener as your unique gateway, you can dynamically discover the cluster topology and query all member nodes.

$Listener = 'SQLLISTENER,1432'

$TargetDB = 'StackOverflow2010'

try {

$Nodes = Invoke-DbaQuery -SqlInstance $Listener -Database 'master' -Query "SELECT replica_server_name FROM sys.dm_hadr_availability_replica_cluster_states"

$BackupQuery = "SELECT SERVERPROPERTY('ServerName') as [InstanceName], MAX(backup_finish_date) as [LastBackup] FROM msdb.dbo.backupset WHERE database_name = '$TargetDB' GROUP BY database_name"

write-output $Nodes

$Results = foreach ($Node in $Nodes.replica_server_name) {

write-output $Node

Invoke-DbaQuery -SqlInstance $Node -Database 'msdb' -Query $BackupQuery -ErrorAction SilentlyContinue

}

$Results | Where-Object { $_.LastBackup } | Sort-Object LastBackup -Descending | Select-Object -First 1 | Format-Table -AutoSize

}

catch {

Write-Error "Error : $($_.Exception.Message)"

}This script is just a first step, but it lays the foundation for truly scalable infrastructure management. By shifting the logic to PowerShell instead of overloading our SQL instances, we achieve a robust and extensible method capable of handling large Always-On ecosystems without additional manual effort.

In this example, the listener name is hardcoded for the sake of clarity. However, the true strength of this approach lies in its ability to work behind the scenes with a dynamic inventory. In a Production environment, you would typically query a CMDB or a centralized configuration file to automatically populate the list of instances. This transforms a simple check into a silent, reliable automation that adapts seamlessly as your SQL environment evolves.

While we wrote the T-SQL manually in this example for the sake of clarity, it is worth noting that dbatools offers an even more streamlined approach with the Get-DbaBackupHistory command. This native function eliminates the need for manual queries entirely, returning rich metadata objects that are ready to be filtered and aggregated across your entire fleet.

$Nodes.replica_server_name | Get-DbaBackupHistory -Database 'StackOverflow2010' | Sort-Object End -Descending | Select-Object -First 1To wrap up, remember that technical skills are only as good as the management strategy behind them. Transitioning away from legacy methods toward a PowerShell-driven approach is about gaining control over your environment. Here is what you should keep in mind:

- Beyond T-SQL Boundaries: While Linked Servers or OPENDATASOURCE might work for quick fixes, they quickly become bottlenecks and security risks in hardened infrastructures.

- Object-Oriented Efficiency: By using PowerShell and dbatools, you stop managing raw text and start handling objects. This allows you to effortlessly filter, sort, and aggregate data from multiple Always-On nodes to extract a single, reliable source of truth.

- Smarter Security: Running queries externally via dedicated management shells ensures you maintain a high security posture without needing to enable high-risk surface area features on your SQL instances.

The real game-changer is the Central Management Server. By centralizing your logic on a dedicated administration machine, you stop scattering scripts across every instance. This server becomes your orchestrator: it pulls from your inventory (CMDB, central tables), broadcasts tasks across your entire fleet, and consolidates the results. This is exactly the approach we take at dbi services to manage the extensive SQL Server environments under our care. We leverage PowerShell and the dbatools module as the backbone of our scripting architecture. This allows us to collect data in the most comprehensive and optimized way possible, ensuring we deliver top-tier service to our clients.

This is how you move from artisanal, server-by-server management to an industrial-grade automation capable of piloting hundreds of instances with the same simplicity as a single one.

L’article SQL Server Always-On: Centralizing Backup History Across Replicas est apparu en premier sur dbi Blog.

PostgreSQL: An introduction to row locking

Sometimes it is necessary to troubleshoot locks in PostgreSQL to figure out what is going on in the system. While locks are important and necessary in a database system, too many locks can also be an issue, performance wise and resource consumption wise. There are several levels of locks, e.g. table level and row level locks. Depending on what kind of locks are causing an issue you need to take other actions to resolve it.

We’ll start with some basics and a very simple playground: One simple table containing one row:

postgres=# create table t (a int);

CREATE TABLE

postgres=# insert into t values (1);

INSERT 0 1

One source of information about locking is pg_locks. Do we already see something in this catalog view? Indeed we do:

postgres=# select locktype,database::regdatabase,relation::regclass,pid,mode,granted,waitstart from pg_locks;

locktype | database | relation | pid | mode | granted | waitstart

------------+----------+----------+------+-----------------+---------+-----------

relation | postgres | pg_locks | 9119 | AccessShareLock | t |

virtualxid | | | 9119 | ExclusiveLock | t |

(2 rows)

What we see here is our own session (ignore the second row with “virtualxid” for now):

postgres=# select pg_backend_pid();

pg_backend_pid

----------------

9119

(1 row)

The question is why? We only did a simple select, but even a select which does not modify the database needs locking because while we’re reading nobody else is allowed to modify the underlying objects. This is what “AccessShareLock” is about: Others can still read, but not change.

If you do the same in a second session you might expect to see four rows in total, but:

-- session 2

postgres=# select locktype,database::regdatabase,relation::regclass,pid,mode,granted,waitstart from pg_locks;

locktype | database | relation | pid | mode | granted | waitstart

------------+----------+----------+------+-----------------+---------+-----------

relation | postgres | pg_locks | 9673 | AccessShareLock | t |

virtualxid | | | 9673 | ExclusiveLock | t |

(2 rows)

Seems a bit surprising but pg_locks shows only locks from active processes and once the statement completes the process is not active anymore and the lock is gone.

So what we can do to exclude our own locks is this:

postgres=# select locktype,database::regdatabase,relation::regclass,pid,mode,granted,waitstart from pg_locks where pid != pg_backend_pid();

locktype | database | relation | pid | mode | granted | waitstart

----------+----------+----------+-----+------+---------+-----------

(0 rows)

The story is a bit different if we do the same thing in a transaction in the first session and then query the view in the second session:

-- session 1

postgres=# begin;

BEGIN

postgres=*# select locktype,database::regdatabase,relation::regclass,pid,mode,granted,waitstart from pg_locks where pid != pg_backend_pid();

locktype | database | relation | pid | mode | granted | waitstart

----------+----------+----------+-----+------+---------+-----------

(0 rows)

-- session 2

postgres=# select locktype,database::regdatabase,relation::regclass,pid,mode,granted,waitstart from pg_locks where pid != pg_backend_pid();

locktype | database | relation | pid | mode | granted | waitstart

------------+----------+----------+------+-----------------+---------+-----------

relation | postgres | pg_locks | 9119 | AccessShareLock | t |

virtualxid | | | 9119 | ExclusiveLock | t |

(2 rows)

Because the first session is still active and the transaction is open, this session still holds the lock. Ending the transaction releases the lock:

-- session 1

postgres=*# end;

COMMIT

-- session 2

postgres=# select locktype,database::regdatabase,relation::regclass,pid,mode,granted,waitstart from pg_locks where pid != pg_backend_pid();

locktype | database | relation | pid | mode | granted | waitstart

----------+----------+----------+-----+------+---------+-----------

(0 rows)

Lets start doing something with our table in session two:

-- session 2

postgres=# begin;

BEGIN

postgres=*# select * from t;

a

---

1

(1 row)

How does it look like in session one?

postgres=# select locktype,database::regdatabase,relation::regclass,pid,mode,granted,waitstart from pg_locks where pid != pg_backend_pid();

locktype | database | relation | pid | mode | granted | waitstart

------------+----------+----------+------+-----------------+---------+-----------

relation | postgres | t | 9673 | AccessShareLock | t |

virtualxid | | | 9673 | ExclusiveLock | t |

(2 rows)

This is consistent to the results above. As the second session is reading from the table and the transaction is still open, the lock is still there, no surprise. Lets update the row in the still open transaction and then have a look again in session one:

-- session 2

postgres=*# update t set a = 2 where a = 1;

UPDATE 1

-- session 1

postgres=# select locktype,database::regdatabase,relation::regclass,pid,mode,granted,waitstart from pg_locks where pid != pg_backend_pid();

locktype | database | relation | pid | mode | granted | waitstart

---------------+----------+----------+------+------------------+---------+-----------

relation | postgres | t | 9673 | AccessShareLock | t |

relation | postgres | t | 9673 | RowExclusiveLock | t |

virtualxid | | | 9673 | ExclusiveLock | t |

transactionid | | | 9673 | ExclusiveLock | t |

(4 rows)

Now we got a “RowExclusiveLock” in addition and that means nobody else can do anything with the new row/tuple we’ve just created. When someone else (third session) is trying to perform the same update this will block:

-- session 3

postgres=# begin;

BEGIN

postgres=*# update t set a = 2 where a = 1;

… and the results in the first session look like this (I have added an order by and the “page” and “tuple” columns):

-- session 1

postgres=# select locktype,database::regdatabase,relation::regclass,pid,mode,granted,waitstart,page,tuple from pg_locks where pid != pg_backend_pid() order by pid;

locktype | database | relation | pid | mode | granted | waitstart | page | tuple

---------------+----------+----------+------+------------------+---------+-------------------------------+------+-------

relation | postgres | t | 9673 | RowExclusiveLock | t | | |

virtualxid | | | 9673 | ExclusiveLock | t | | |

transactionid | | | 9673 | ExclusiveLock | t | | |

relation | postgres | t | 9673 | AccessShareLock | t | | |

tuple | postgres | t | 9691 | ExclusiveLock | t | | 0 | 1

transactionid | | | 9691 | ExclusiveLock | t | | |

relation | postgres | t | 9691 | RowExclusiveLock | t | | |

virtualxid | | | 9691 | ExclusiveLock | t | | |

transactionid | | | 9691 | ShareLock | f | 2026-01-08 09:14:00.330564+01 | |

(9 rows)

What we see now is, that the third session (pid=9691) is trying to get the lock but can’t and must wait (waitstart) for the second session to release the lock. We can also see that the row/tuple in question is in block/page 0 and the tuple/row is the first one in that block. Once session two either commits or rollbacks the update in session three will proceed but there is nothing to update as there is no row which matches the where clause anymore.

-- session 2

postgres=*# commit;

COMMIT

-- session 3

UPDATE 0

postgres=*# select * from t;

a

---

2

(1 row)

postgres=*# end;

COMMIT

In session one we can verify that all locks are gone:

postgres=# select locktype,database::regdatabase,relation::regclass,pid,mode,granted,waitstart,page,tuple from pg_locks where pid != pg_backend_pid() order by pid;

locktype | database | relation | pid | mode | granted | waitstart | page | tuple

----------+----------+----------+-----+------+---------+-----------+------+-------

(0 rows)

Another example with 5 rows in the table and two sessions update only the last row:

-- session 1

postgres=# truncate t;

TRUNCATE TABLE

postgres=# insert into t values (1),(2),(3),(4),(5);

INSERT 0 5

-- session 2

postgres=# begin;

BEGIN

postgres=*# update t set a = -1 where a = 5;

UPDATE 1

-- session 3

postgres=# begin;

BEGIN

postgres=*# update t set a = -1 where a = 5;

-- session 1

postgres=# select locktype,database::regdatabase,relation::regclass,pid,mode,granted,waitstart,page,tuple from pg_locks where pid != pg_backend_pid() order by pid;

locktype | database | relation | pid | mode | granted | waitstart | page | tuple

---------------+----------+----------+------+------------------+---------+-------------------------------+------+-------

relation | postgres | t | 9673 | RowExclusiveLock | t | | |

virtualxid | | | 9673 | ExclusiveLock | t | | |

transactionid | | | 9673 | ExclusiveLock | t | | |

virtualxid | | | 9691 | ExclusiveLock | t | | |

transactionid | | | 9691 | ShareLock | f | 2026-01-08 09:58:38.867534+01 | |

tuple | postgres | t | 9691 | ExclusiveLock | t | | 0 | 5

transactionid | | | 9691 | ExclusiveLock | t | | |

relation | postgres | t | 9691 | RowExclusiveLock | t | | |

(8 rows)

Now it is row number 5 in block 0.

The rows with “transactionid” and “virtualxid” are about the transactions, details here. Those values are also recorded in pg_locks:

-- session 1

postgres=# select locktype,database::regdatabase,relation::regclass,pid,mode,granted,waitstart,page,tuple,transactionid,virtualxid from pg_locks where pid != pg_backend_pid() order by pid;

locktype | database | relation | pid | mode | granted | waitstart | page | tuple | transactionid | virtualxid

---------------+----------+----------+------+------------------+---------+-------------------------------+------+-------+---------------+------------

relation | postgres | t | 9673 | RowExclusiveLock | t | | | | |

virtualxid | | | 9673 | ExclusiveLock | t | | | | | 24/22

transactionid | | | 9673 | ExclusiveLock | t | | | | 836 |

virtualxid | | | 9691 | ExclusiveLock | t | | | | | 25/18

transactionid | | | 9691 | ShareLock | f | 2026-01-08 09:58:38.867534+01 | | | 836 |

tuple | postgres | t | 9691 | ExclusiveLock | t | | 0 | 5 | |

transactionid | | | 9691 | ExclusiveLock | t | | | | 837 |

relation | postgres | t | 9691 | RowExclusiveLock | t | | | | |

When there are only a few locks in the database this is an easy way to find out who is blocking whom but if you have hundreds of sessions and locks there is a more convenient way to check for blockers and waiters, and this is the function pg_blocking_pids:

-- session 1

postgres=# select pg_blocking_pids(9691);

pg_blocking_pids

------------------

{9673}

(1 row)

Another option to check more details in regards to row locking is the extension pgrowlocks:

-- session 1

postgres=# create extension pgrowlocks;

CREATE EXTENSION

postgres=# select * from t as a, pgrowlocks('t') as b where b.locked_row = a.ctid;

a | locked_row | locker | multi | xids | modes | pids

---+------------+--------+-------+-------+-------------------+--------

5 | (0,5) | 836 | f | {836} | {"No Key Update"} | {9673}

(1 row)

In the next post we’ll look more closely on transaction IDs and virtual transaction IDs when it comes to locking.

L’article PostgreSQL: An introduction to row locking est apparu en premier sur dbi Blog.

Managing Ad Hoc PS/Queries in PeopleSoft

- Do not increase the PIA inactivity timeout or PS/Query timeouts. They are both delivered set at 20 minutes. They are primarily there to protect users and the system as a whole.

- If you have already increased them, you may need to bring them down in stages, allowing users to get used to the new limits and handle exceptional queries.

- If you permit users to use PS/Query, then the PIA inactivity timeout should be the same ICQuery Tuxedo service timeout set on the PSQRYSRV server (default 20 minutes)

- If you do not use online PS/Query, then the PIA timeout can be reduced as low as the PSAPPSRV timeout (default 5 minutes).

- It is not possible to regulate when users run queries in the PIA. Users, quite legitimately, also need to run queries that may take longer than the timeouts. Encourage users to schedule such queries on the process scheduler.

- You can restrict the number of online queries that execute concurrently on the database by restricting the maximum number of PSQRYSRV processes. There is still nothing to prevent users from starting queries which then just queue up on the application server. Users cannot distinguish between a query that is queuing in the application server or executing on the database. The service timeout starts from when the service request is queued in the application server.

- However, you still don’t want even scheduled queries to run for hours on end. Set a maximum processing time in the process definition for the PSQUERY application engine. Say 4 hours. The process scheduler will terminate the PSQUERY process after this amount of time.

- A common cause of long-running PS/Queries is a mistake in the query definition, resulting in missing or incorrect joins in the SQL.

- A SQL query can continue to run on the database server after the client-side process, the PSQRYSRV server process or the PSQUERY application engine process, has terminated, at least until the current fetch returns. A common cause is that a large sort or hash operation must complete before the first fetch returns.

- Enable dead connect detection by setting SQLNET.EXPIRE_TIME in SQLNET.ORA. The idle Oracle shadow process will periodically poll the client process to check that it is still responsive. If a PSQUERY process has been terminated by the process scheduler, this mechanism should also terminate the session if it is still running on the database.

- Use the database resource manager to allocate and manage CPU allocation and limit run time.

- The resource manager only intervenes when the system runs out of free CPU.

- Define a hierarchy of resource manager consumer groups. Map database sessions to consumer groups using session instrumentation. In general, online processing is higher priority than scheduled processes, which are higher priority than ad-hoc queries.

- Set a maximum CPU/runtime limit for scheduled queries. Using different consumer groups for online and scheduled batch queries.

- For scheduled queries, match the consumer group timeout to the maximum runtime for the PSQUERY application engine.

- For online queries, match the consumer group timeout to the PIA inactivity timeout (which should also be the same as the ICQuery Tuxedo service timeout).

- See A Resource Manager CPU Time Limit for PS/Queries Executed Online in the PIA

- NB: Resource Manager is an Oracle Enterprise Edition Feature.

- On Exadata, use SQL Quarantine to identify queries with a track record of exceeding the consumer group timeout.

- Enable SQL Quarantine for scheduled queries only using a trigger on PSPRQSRQST – see Github: set_prcs_sess_parm_trg.sql

- Quarantined queries are immediately terminated with error ORA-00040: active time limit exceeded - call aborted. For scheduled queries, this error can be found in the process scheduler message log.

- Enable SQL Quarantine for SQL Developer, etc, using an on-logon trigger – see Github: set_sess_parm_trg.sql

- Add run control logging for scheduled PS/Queries

- Whenever a scheduled PS/Query, run via PSQUERY, terminates other than successfully, the SQL query can be found in the message log. However, bind variable values are not recorded.

- Bind variable values may be changed between executions of the same query using the same run control by the same user. Enable run control logging so that it is possible to reconstruct the query and bind variables run by the user.

- There is nothing to stop the user from altering the PS/Query between executions, in which case you may need to get the SQL of a long-running query that completes successfully from AWR.

- It is not possible to allocate PS/Queries to a different temporary tablespace because that is determined by the database user ID. Everything in PeopleSoft runs as the Owner ID (usually SYSADM). Unless you are faking a reporting database with a second schema pointed to by a second entry in PS.PSDBOWNER.

So Long 2025 and Welcome 2026

As we wrap up 2025 and head into 2026, it’s worth taking a quick look back and taking a sneak peek ahead.

We were proud to show our leadership in the Oracle community by once again being the top-level Titanium sponsor of the ODTUG Kscope25 conference in Grapevine, Texas. Kscope is the top technical event for people working with Oracle EPM, Oracle APEX, Oracle Cloud Infrastructure, Oracle Database, and other related technologies. It was a lot of fun seeing customers, partners, and friends in person.

This past year was also a great one for Dodeca. We added some initial AI capabilities, delivered significant enhancements for EPM Cloud, and introduced direct support for several new data sources such as Oracle Cloud EDM and Parquet. We also strengthened security by enhancing support for both SAML and OAuth authentication.

We had some fantastic customer presentations as well including a presentation where one customer shared how Dodeca helped their organization save more than 1,600 man-days per quarter. That is just one of many similar stories we hear from customers.

To add to our acclaimed technical support, we introduced free, online, hands-on training for Dodeca. In this 3-hour, instructor-guided class, you can try Dodeca for yourself and see what all the hype is about. As a self-funded, founder-led company, our measure of success isn’t profitability. Instead, it is the success of our customers who continue using our products in mission-critical applications year after year.

We did have some sad news this year. In late October we lost our Chief Operating Officer, Michael McCarty, after a short illness. Michael was a friend for 30 years and helped lead our company for the past 25 years. His experience and humor are deeply missed, and we’re grateful for everything he contributed to our team and our work.

Looking ahead to 2026, we’re excited to keep pushing forward. We’ll continue adding capabilities to help customers eliminate spreadsheet chaos and use standardization, automation, and enterprise integration to bring confidence to the accuracy of corporate spreadsheets. And if you’re not yet a Dodeca customer, hopefully this will be the year you discover what Dodeca can do for you.

Here’s to a great 2026.

Planning GoldenGate 23ai Migration Before Support Expires

At the moment, three versions of GoldenGate are still under Premier Support, but this will very soon change, with GoldenGate 19c and 21c coming out of Premier Support in April this year ! I give below the official Oracle support end dates, as per 2026.

GoldenGate ReleaseRelease dateEnd of Premier SupportEnd of Extended Support23aiMay 2024June 2029June 203221cApril 2021April 2026None19cMay 2019April 2026April 2027Of course, you should always consult the official updated resource (last update in November 2025). In fact, Oracle already extended the support for GoldenGate 19c once, so it’s always good to stay updated on this issue.

I don’t think it should come as a surprise that anything older than GoldenGate 19c is out of support for quite some time now.

Another valuable lesson from this table is that you should never play too much with intermediate release, except if you really need them. If you upgraded to GoldenGate 21c and have Extended Support, your GoldenGate won’t be supported ! However, GoldenGate 19c Extended Support ends only in April 2027.

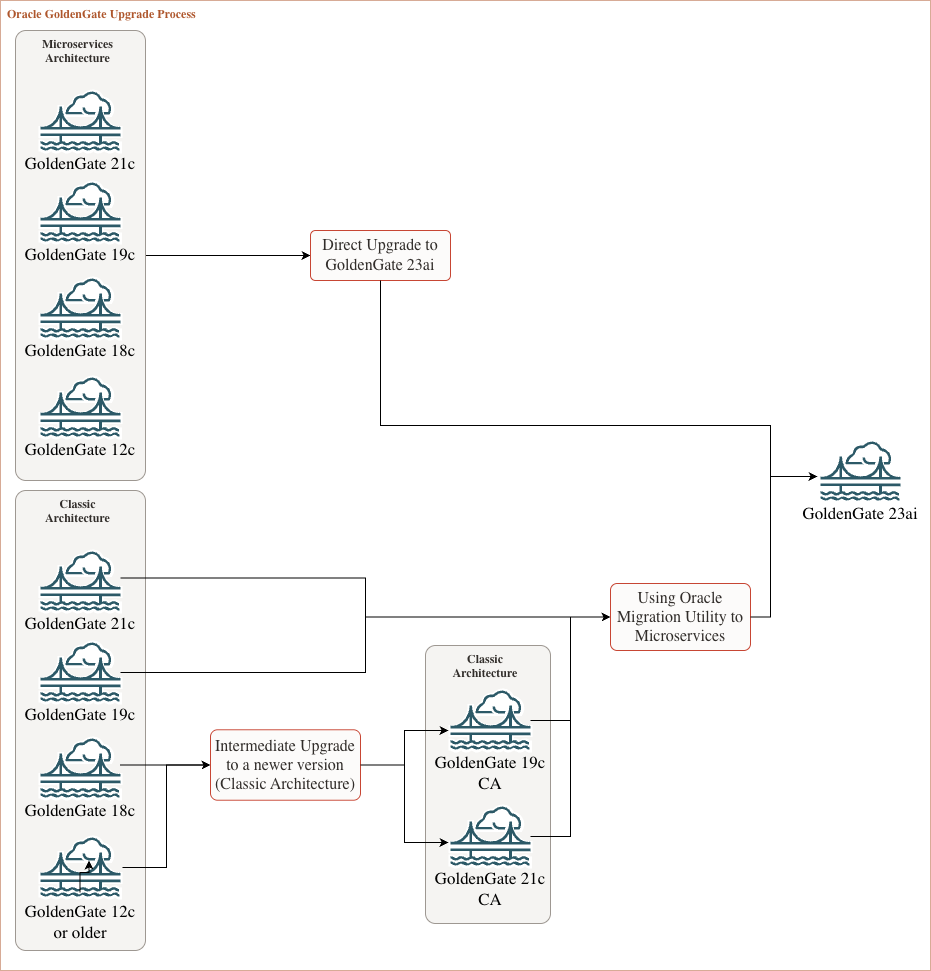

Now would be the perfect time to upgrade to the latest version of GoldenGate, 23ai (soon to be 26ai). With that in mind, the upgrade process is more or less complex, depending on your current situation. I tried to summarize all situations in the following diagram. More explanations are available below, and full upgrade examples will follow in future blog posts.

Diagram representing migration processes to GoldenGate 23ai, depending on the source version and architecture.

Upgrading any Microservices Architecture to GoldenGate 23ai

Diagram representing migration processes to GoldenGate 23ai, depending on the source version and architecture.

Upgrading any Microservices Architecture to GoldenGate 23ai

If you already opted for GoldenGate Microservices Architecture, and you should have since it was first released nearly ten years ago, then the upgrade process is straightforward, with only one step to upgrade to GoldenGate 23ai. Again, the procedure itself is out of scope for this blog.

Upgrading from GoldenGate Classic Architecture to GoldenGate 23aiIf you still have a Classic Architecture installation running, the upgrade process will be more complex, and depends on the version of GoldenGate you have.

Classic Architecture 19c/21c to 23aiIf your version of GoldenGate is not too old (19c/21c), then you can use the Oracle Migration Utility available with the patch 37274898 (now KB100447 in MOS). I will give real migration examples when I have time to write more on the subject.

Classic Architecture 18c or older to 23aiIf you still have a GoldenGate 18c or older with a Classic Architecture, then you will need a two steps process to upgrade to GoldenGate 23ai.

- First a standard upgrade to a more recent version of GoldenGate, either 19c or 21c, with Classic Architecture.

- Then, use the Oracle Migration Utility mentioned above, to migrate from Classic Architecture to GoldenGate 23ai with Microservices Architecture.

As always, keeping your GoldenGate installations up-to-date is the best way to ensure you stay supported, secured and avoid a painful migration process when it becomes too late.

L’article Planning GoldenGate 23ai Migration Before Support Expires est apparu en premier sur dbi Blog.

The End of Manual GoldenGate Operations: Why GoldenGateMCP Signals a Fundamental Shift

Manual database troubleshooting is ending. Operational intelligence transforms how DBAs work. Here's what's next.

The post The End of Manual GoldenGate Operations: Why GoldenGateMCP Signals a Fundamental Shift appeared first on DBASolved.

Linux Expectation Management – scripting password changes using expect and secret-tool

Imagine you find yourself in a situation where you need to keep the passwords of a linux account in synch across multiple servers.

You don’t have admin privileges, and the accounts may be used in such a way that renders use of SSH keys impractical.

Depending on the number of servers involved, changing the password by hand may become something of a chore.

So, how could you automate this in such a way that enables you to run a single script, but which also avoids exposing the password in plain text ?

Fortunately, this being Linux, there’s a tool for that. Two tools, in fact :

- expect provides the ability to supply input to a program (e.g. ssh, passwd) programatically

- secret-tool allows the secure storage and retrieval of secrets, such as keys and passwords.

Now, when it comes to scripting, mine tend to be practical rather than elegent. Less Starship Enterprise and more USS Ceritos. This may explain some of the examples that follow…