Bobby Durrett's DBA Blog

ORA-04063 Errors During Datapatch Rollback of Oracle Patch 30763851

I am working on an Oracle 19c database running on a RHEL 7 VM. I have been trying to roll back this patch:

Patch 30763851 IMPDP 11.2 TO 18C OR HIGHER HITS ORA-904 WHEN TABLES HAVE EXTENDED STATISTICSI have it sitting on top of 19.5

Database Release Update: 19.5.0.0.191015 (30125133)The Opatch rollback command ran fine, but Datapatch threw these errors:

[2025-10-22 18:50:15] -> Error at line 11329: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_OPQTYPE_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11331: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_OPQTYPE_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11333: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_OPQTYPE_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11335: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_OPQTYPE_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11337: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_OPQTYPE_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11339: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_OPQTYPE_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11341: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_OPQTYPE_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11343: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_OPQTYPE_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11355: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_P2TPARTCOL_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11357: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_P2TPARTCOL_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11363: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_SP2TPARTCOL_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11365: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_SP2TPARTCOL_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11381: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_COLUMN_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11383: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_COLUMN_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11385: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_COLUMN_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11387: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_COLUMN_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11389: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_PCOLUMN_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11391: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_PCOLUMN_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11393: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_PCOLUMN_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11395: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_PCOLUMN_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11397: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_P2TCOLUMN_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11399: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_P2TCOLUMN_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11401: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_SP2TCOLUMN_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11403: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_SP2TCOLUMN_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11405: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_COLUMN_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11407: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_COLUMN_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11409: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_PCOLUMN_VIEW" has errors"

[2025-10-22 18:50:15] -> Error at line 11411: script rdbms/admin/dpload.sql

[2025-10-22 18:50:15] - ORA-04063: view "SYS.KU$_PCOLUMN_VIEW" has errors"I checked DBA_OBJECTS, and all the SYS objects are VALID. I tried querying one of the views and it worked fine. So, I went to My Oracle Support, our Oracle database support site, and searched for ORA-04063 and one of the view names and found nothing. A Google search also came up empty. I tried just ignoring it but that didn’t work. My whole goal in doing this was to apply the October 2025 patches that just came out this week. But because the SQL patch registry indicated that patch 30763851 rolled back with errors, every time I applied a new patch it would try to roll 30763851 back first and error again. Here is what DBA_REGISTRY_SQLPATCH looked like after two failed rollback attempts:

INSTALL_ID PATCH_ID PATCH_TYPE ACTION STATUS

---------- ---------- ---------- --------------- --------------

1 30125133 RU APPLY SUCCESS

2 30763851 INTERIM APPLY SUCCESS

3 30763851 INTERIM ROLLBACK WITH ERRORS

3 30763851 INTERIM ROLLBACK WITH ERRORS

4 30763851 INTERIM ROLLBACK WITH ERRORS

4 30763851 INTERIM ROLLBACK WITH ERRORS Each rollback attempt tried twice so I have four failures with two rollback attempts.

I opened a case with Oracle support just in case this was a known issue that wasn’t available for me to find on my own. Sometimes that happens. But while waiting on Oracle I kept trying to fix it myself.

The errors refer to $ORACLE_HOME/rdbms/admin/dpload.sql which I think reloads datapump after some change. It runs catmetviews.sql and catmetviews_mig.sql which have the CREATE VIEW statements for the views getting errors, like SYS.KU$_OPQTYPE_VIEW. But the code in catmetviews_mig.sql wasn’t straightforward. I imagined running some sort of trace to see why the script was throwing the ORA-04063 errors, but I never had to take it that far.

At first all this stressed me out. I thought, “I can’t back out this patch. I will never be able to patch this database to a current patch level.” Then I chilled out and realized that if it was a problem with Oracle’s code, they had to help me back out 30763851. But it might take some time to work through an SR with Oracle.

But what if it wasn’t an issue with Oracle’s code but something weird in our environment? I didn’t think it indicated a real problem, but there were some weird messages coming out that I am used to seeing. They were from triggers that come with an auditing tool called DB Protect. They were throwing messages like this:

[SYS.SENSOR_DDL_TRIGGER_A] Caught a standard exception: aliasId=100327, error=-29260, message="ORA-29260: network error: TNS:no listener"We are used to seeing these errors when we do DDL but prior to this it didn’t cause any actual problems. We had already decommisioned the DB Protect tool but had not cleaned up the triggers. Dropping SYS.SENSOR_DDL_TRIGGER_A eliminated the ORA-04063 errors.

Probably no one will ever encounter this same issue, but I thought I would document it. If you have the same symptoms and you are not using DB Protect any more, do these commands:

DROP TRIGGER SYS.SENSOR_DDL_TRIGGER_A;

DROP TRIGGER SYS.SENSOR_DDL_TRIGGER_B;I think the A trigger was the problem, but we don’t need either one.

Anyway, this post is just so someone who searches for ORA-04063 and one of the views will find this information and drop the triggers if they have them. It’s a long shot but might as well document it for posterity and for me.

Bobby

Migrating WordPress to Amazon Linux 2023

I recently migrated my WordPress blog from Amazon Linux 2 to Amazon Linux 2023 to take advantage of newer software versions. The process was mostly smooth, but I wanted to document a few things I ran into—especially around encryption setup.

I followed these instructions for setting WordPress up on AL 2023: https://docs.aws.amazon.com/linux/al2023/ug/hosting-wordpress-aml-2023.html

The first thing I had to do was follow these instructions to get Apache, PHP, and MariaDB setup: https://docs.aws.amazon.com/linux/al2023/ug/ec2-lamp-amazon-linux-2023.html

I also had to setup a new elastic ip: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/elastic-ip-addresses-eip.html

I used the security group that I had for my existing AL 2 EC2 so I had the right ip addresses opened up.

I copied the database over from the old to new EC2:

on the old server:

mysqldump -u blogdbuser -pMYPASSWORD --single-transaction --routines --triggers blogdb > blogdb.sql

on the new server:

mysql -vvv -n -u blogdbuser -pMYPASSWORD blogdb < blogdb.sql > blogdb.log

Similarly for the web pages and other files:

New server:

# clear out existing web server directory

cd /var/www/html

sudo rm -fr *

sudo rm -f .htaccess .user.ini .wpcli

Old server:

sudo tar -cvf /home/ec2-user/html.tar /var/www/html

New server:

sudo tar -xvf /home/ec2-user/html.tar -C /Moving the DNS entries over to the new elastic ip address was easy. I just had the change the “A” records for bobbydurrettdba.com and www.bobbydurrettdba.com in Route 53. First, I changed the TTL from one day to 10 minutes so my changes would propogate quickly while I messed with things. Later I set these back. One day was 86400 seconds. Ten minutes was 600 seconds.

The biggest challenge I had was getting encryption setup properly. The documentation missed a couple of key steps. I thought about just writing this post about the encryption part because it was the only thing that wasn’t straightforward.

I was following the steps in https://docs.aws.amazon.com/linux/al2023/ug/SSL-on-amazon-linux-2023.html and using the Let’s Encrypt script for getting certificates. This was called Certbot and documented here: https://eff-certbot.readthedocs.io/en/stable/install.html

There were two key things missing from this documentation:

- How to install Snap on Amazon Linux 2023

- The need for a port 80 VirtualHost

Certbot wants you to install something called Snap to use to install Certbot. I got this from a Google search:

sudo dnf install openssl mod_ssl

sudo wget -O /etc/yum.repos.d/snapd.repo https://bboozzoo.github.io/snapd-amazon-linux/al2023/snapd.repo

sudo dnf install snapd -y

sudo systemctl enable --now snapd.socket

sudo ln -s /var/lib/snapd/snap /snap

sudo rebootThe other undocumented thing was that I had to add these lines after the “Listen 80” line in /etc/httpd/conf/httpd.conf:

sudo vi /etc/httpd/conf/httpd.conf

after Listen 80 add this:

<VirtualHost *:80>

DocumentRoot "/var/www/html"

ServerName bobbydurrettdba.com

ServerAlias www.bobbydurrettdba.com

</VirtualHost>Then I just ran the documented Certbot commands like this:

sudo snap install --classic certbot

sudo ln -s /snap/bin/certbot /usr/bin/certbot

sudo certbot --apacheIt took me a few iterations to get this right. Anyway, I just wanted to post a quick note documenting this.

Bobby

What I Learned About Machine Learning – Don’t Use It!

I set out to use Machine Learning to monitor Oracle databases but ultimately chose not to. The most valuable lesson I learned was the importance of visualizing my data to determine the best approach. By examining real production performance issues, I developed scripts that trigger alerts during potential performance problems. I used six weeks of historical performance metrics to compare current behavior against past trends. I already knew that a high value for a given metric didn’t necessarily indicate a performance issue with business impact. In the end, I built a script that sends an alert when certain metrics are the top wait event and are performing three times worse than at any point in the past six weeks. While it doesn’t guarantee a business impact, it flags anomalies that are unusual enough to warrant investigation.

2. Why I Tried Machine LearningI think anyone reading this post would know why I tried to use Machine Learning. It’s very popular now. I took a class online about ML with Python and I bought a book about the same subject. I had already used Python in some earlier classes and in my work, so it was natural to take a class/read a book about ML based on Python. As I mentioned in an earlier post, I studied AI in school many years ago and there have been many recent advances so naturally I wanted to catch up with the current state of the art in AI and ML. Having taken the class, and later having read a book, I needed some application of what I learned to a valuable business problem. We had a couple of bad performance problems with our Oracle databases and our current monitoring didn’t catch them. So, I wanted to use ML – specifically with PyTorch in a Python script – to write a script that would have alerted on those performance problems.

3. Failed Attempt Number One – AutoencoderIn my first attempt to write a monitoring script, I worked with Copilot and ChatGPT to hack together a script using an autoencoder model. I queried a bunch of our databases and collected a list of all the wait events. My idea was to treat each wait as an input to the autoencoder. For each wait, I pulled the number of events and the total wait time over the past hour. I didn’t differentiate between foreground and background waits—I figured AI could sort that out. I also included DB time and DB CPU time. These were all deltas between two hourly snapshots, so the data represented CPU and waits for a given hour.

The concept behind the autoencoder was to compress these inputs into a smaller set of values and then reconstruct the original inputs. With six weeks of hourly snapshots, I trained the autoencoder on that historical data, assuming it represented “normal” behavior. The idea was that any new snapshot that couldn’t be accurately reconstructed by the model would be considered abnormal and trigger an alert.

I got the model fully running across more than 20 production databases. I tuned the threshold so it would have caught two recent known performance issues. But in practice, it didn’t work well. It mostly triggered alerts on weekends when backups were running and I/O times naturally spiked. It wasn’t catching real performance problems—it was just reacting to predictable noise.

Here is the PyTorch autoencoder model:

# Define the Autoencoder model

class TabularAutoencoder(nn.Module):

def __init__(self, input_dim):

super(TabularAutoencoder, self).__init__()

# Encoder

self.encoder = nn.Sequential(

nn.Linear(input_dim, 64), # Reduce dimensionality from input_dim to 64

#nn.LayerNorm(64), # Layer Normalization for stability

nn.ReLU(True),

nn.Dropout(0.2), # Dropout for regularization

nn.Linear(64, 32), # Reduce to 32 dimensions

nn.ReLU(True),

nn.Linear(32, 16), # Bottleneck layer (compressing to 16 dimensions)

)

# Decoder

self.decoder = nn.Sequential(

nn.Linear(16, 32), # Expand back to 32 dimensions

nn.ReLU(True),

nn.Linear(32, 64), # Expand to 64 dimensions

#nn.LayerNorm(64), # Layer Normalization for stability

nn.ReLU(True),

nn.Linear(64, input_dim), # Expand back to original input dimension

)

def forward(self, x):

x = self.encoder(x)

x = self.decoder(x)

return xMuch more recently, I came back to using a PyTorch model to build an alerting script. I had spent some time learning about large language models and exploring how to apply GenAI to business problems, rather than working with these simpler ML models in PyTorch. But I decided to give it another try and see if I could fix the limitations I ran into with the autoencoder approach.

I didn’t really expect binary classification to work, but it’s the simplest kind of ML model, so I thought it was worth a shot. I selected a few snapshots that occurred during a severe performance problem on an important Oracle database. I labeled these as 1 (problem), and the rest as 0 (no problem). It was easy enough to train a model that correctly identified the problem snapshots during training and testing.

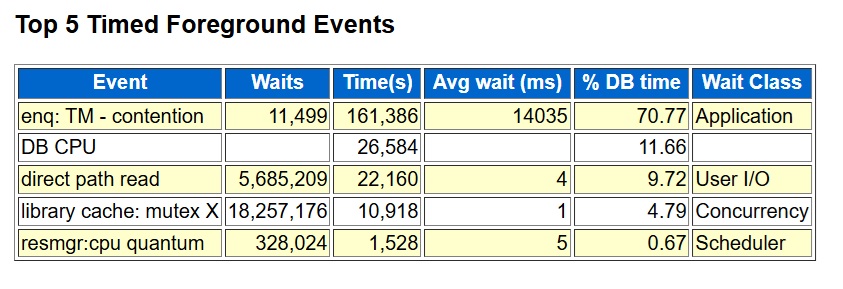

However, when I applied the model to later snapshots it hadn’t seen before, it started generating false alarms that didn’t make sense. It got even worse when I tried it on a completely different database. I had expected the model to pick up on the fact that the top wait event during the problem period was enq: TM – contention. A quick glance at the top foreground events in an AWR report made it obvious that this was the issue. But the trained model ended up sending an alert on snapshots that didn’t even have this wait event in the top waits.

Here is the PyTorch binary classification model:

"""

Mostly got the model from Copilot. input_dim is the number of performance

metrics which is over 100. Gets it down to 64 to 32 and then 1.

The ReLU functions add "non-linear" change to allow it to do

functions that the linear steps can't do.

"""

class MyModel(nn.Module):

def __init__(self, input_dim):

super(MyModel, self).__init__()

self.fc1 = nn.Linear(input_dim, 64)

self.relu1 = nn.ReLU()

self.fc2 = nn.Linear(64, 32)

self.relu2 = nn.ReLU()

self.output = nn.Linear(32, 1)

def forward(self, x):

x = self.fc1(x)

x = self.relu1(x)

x = self.fc2(x)

x = self.relu2(x)

x = self.output(x)

return x

Here are the foreground waits and cpu from an AWR report of the problem time:

5. Failed Attempt Number Three – Z-Score

5. Failed Attempt Number Three – Z-Score

While talking with Copilot, I decided to try a simple statistical approach to anomaly detection. One suggestion was to use a z-score. In my context, this meant looking at the top foreground wait event—enq: TM – contention—in the current snapshot and calculating its z-score relative to the mean and standard deviation of that wait’s values in previous snapshots.

Like the binary classification attempt, the z-score alerting script worked well with the original snapshots from the known problem period. But when I ran the same script against other databases, it produced a lot of false alarms.

Output of z-score script on problem database (alerts on expected snapshot):

SNAP_ID SNAP_DTTIME EVENT_NAME RATIO_ZSCORE AVGWAIT_ZSCORE

---------- ------------------- --------------------- ------------ --------------

151752 2025-06-19 00:00:41 enq: TM - contention 3.0878532 9.74301378Output on another database (no rows expected):

SNAP_ID SNAP_DTTIME EVENT_NAME RATIO_ZSCORE AVGWAIT_ZSCORE

---------- ------------------- ----------------------------- ------------ --------------

48916 2025-06-22 01:00:01 cursor: pin S wait on X 9.00654128 5.36254393

48917 2025-06-22 02:00:06 cursor: pin S wait on X 7.16211659 8.09929239

48918 2025-06-22 03:00:11 cursor: pin S wait on X 5.41578347 4.52106805

48919 2025-06-22 04:00:13 cursor: pin S wait on X 4.69593403 4.08335551

48920 2025-06-22 05:00:16 cursor: pin S wait on X 3.17592316 3.46979661

49081 2025-06-28 22:00:32 cursor: pin S wait on X 4.62636243 3.15122436

49254 2025-07-06 03:00:04 cursor: pin S wait on X 4.73771834 3.23654318

49255 2025-07-06 04:00:10 cursor: pin S wait on X 3.96208561 3.61732606

49289 2025-07-07 14:00:55 SQL*Net more data from dblink 18.2249941 6.61795927

49364 2025-07-10 17:00:20 library cache lock 89.7469665 392.322777

49365 2025-07-10 18:00:45 library cache lock 14.6228987 120.71596

49760 2025-07-27 05:00:21 cursor: pin S wait on X 5.68469758 3.52381292

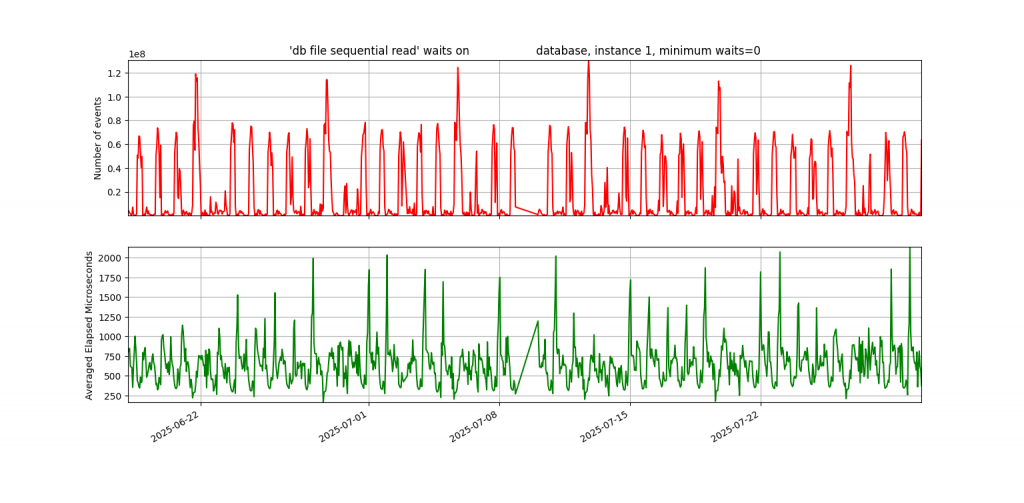

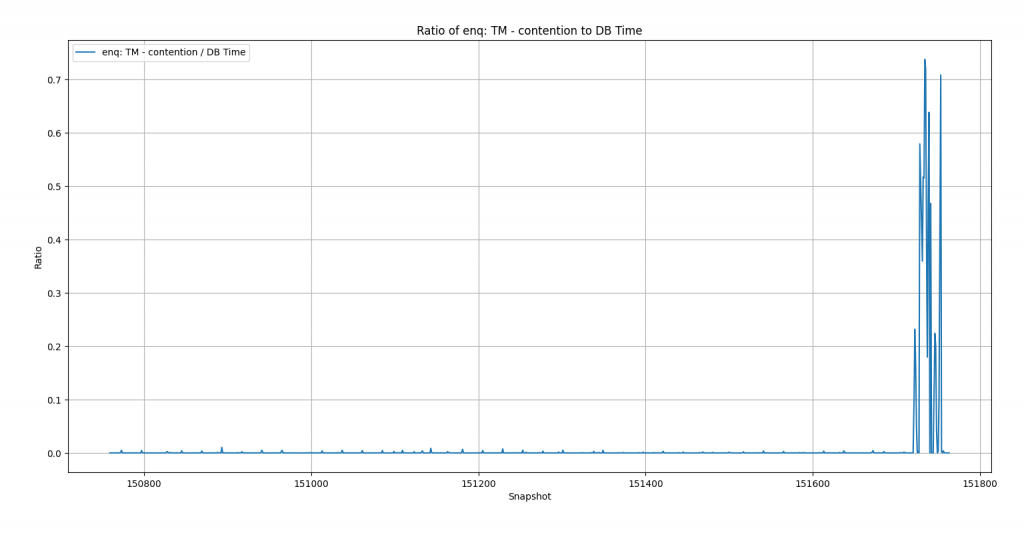

Now I’m getting to the high point of this post. I graphed the waits for the snapshots where the z-score worked and the ones where it didn’t—and the truth jumped out at me. In the database with the known problem, the graph of enq: TM – contention was almost a flat line across the bottom for six weeks, followed by a huge spike during the problem hours. In another database—or even the same one with a different top wait—the graph looked completely different: a wavy pattern, almost like a sine wave, stretching back across the entire six weeks.

“Heck!” I said to myself. “Why don’t I just look for top waits that are never close to this high in the past six weeks?” Sure, it might not always be a real business problem, but if a wait is so dramatically different from six weeks of history, it’s worth at least sending an email—if not a wake-up-in-the-middle-of-the-night page.

With z-score, we were sending an alert when a metric was three standard deviations from the mean. But for wavy waits like db file sequential read, that wasn’t selective enough. So, I designed a new monitoring script: it looks at the top wait in the snapshot, checks if it’s a higher percentage of DB time than CPU, and then compares it to the past six weeks. If it’s more than three times higher than it’s ever been in that history, it triggers an alert. This is all based on the percentage of total DB time.

First the wavy graph of db file sequential read waits:

Next, this is the key graph to this entire post. Not wavy:

The light clicked on when I saw this graph. The enq: TM – contention waits were insignificant until the problem occurred.

Here is the script that checks if we should alert: neveralert.sql

7. Don’t Check Your Brain at the Machine Learning DoorWhen I started with the autoencoder script, I felt overwhelmed—like many people do when first approaching Artificial Intelligence and Machine Learning. I expected the model to magically work without fully understanding how or why. I relied on chat tools to help me piece together code I didn’t grasp, and when it didn’t work, I couldn’t explain why. I’m trying to be honest about my journey here so it might help others and remind myself what I learned.

Each step—from binary classification to z-score to manual logic—brought me closer to a solution rooted in my own experience. You must use your own brain. This has always been true in Oracle performance work: you can’t just follow something you read or heard about without understanding it. You need to think critically and apply your domain knowledge.

If it sounds like I didn’t get anything out of my machine learning training, that’s not the case. Both the class and the book emphasized the importance of visualizing data to guide modeling decisions. They used Matplotlib to create insightful graphs, and while I’ve used simpler visualizations in my own PythonDBAGraphs repo, this experience showed me the value of going deeper with data visualization. I think both the class and book would agree: visualizing your data is one of the best ways to decide how to use it.

Even though this journey didn’t end with a PyTorch-based ML solution, it sharpened my understanding of both ML and Oracle performance—and I’m confident it will help me build better solutions in the future.

Bobby

Picking Right Plan for SQL Profile

Sunday four batch jobs that normally run in an hour had been stuck for 3 hours and had not completed the first unit of work out of many. Earlier in the day the on call DBA had applied a SQL Profile and cancelled the jobs and rerun them but it did not help. We picked a different SQL Profile, killed the jobs, and the jobs ran normally. How did we figure out the right plan to use for a SQL Profile?

The main clue came from the output of my sqlstat.sql script:

The good plan seemed to be 2367956558. EXECUTIONS_DELTA = 1 means that the SQL finished in that hour. Elapsed Average ms of 45177.105 was 45 seconds. 19790.066 was 19 seconds.

PLAN_HASH_VALUE END_INTERVAL_TIME EXECUTIONS_DELTA Elapsed Average ms

--------------- ------------------------- ---------------- ------------------

2367956558 20-APR-25 01.00.23.638 AM 1 45177.105

2367956558 20-APR-25 03.00.29.921 AM 1 19790.066The other plans 2166514251 and 3151484146 seemed to have executions that spanned multiple hours. For example, the first 2166514251 line had EXECUTIONS_DELTA = 0 which means it didn’t finish in that hour. Plus, the elapsed time of 8995202.39 ms = 8995 seconds = 2.5 hours suggests that it was running in parallel, probably for the entire hour.

PLAN_HASH_VALUE END_INTERVAL_TIME EXECUTIONS_DELTA Elapsed Average ms

--------------- ------------------------- ---------------- ------------------

2166514251 20-APR-25 01.00.23.638 AM 0 8995202.39So, it seems clear that 2367956558 is the best plan.

I could say a lot more about this incident, but I wanted to focus on the sqlstat.sql output. The values of EXECUTIONS_DELTA and Elapsed Average ms are keys to identifying plans with the best behavior.

Bobby

Matplotlib Oracle Example

Everyone who has heard the old saying “a picture is worth a thousand words” appreciates its simple wisdom. With Oracle databases you have situations where a graph of the output of a SQL query is easier to understand than the standard text output. It’s helpful to have a simple way to graph Oracle data, and Python has widely used libraries that make it easy.

This post describes a Python script that graphs data from an Oracle database using the Matplotlib graphics library. The script uses three widely used Python libraries: cx_Oracle, NumPy, and Matplotlib. This post provides a simple and easily understood example that can be reused whenever someone needs to graph Oracle data. It is written as a straight-line program without any functions or error handling to keep it as short and readable as possible. It demonstrates the pattern of cx_Oracle -> NumPy -> Matplotlib and the use of Matplotlib’s object-oriented approach.

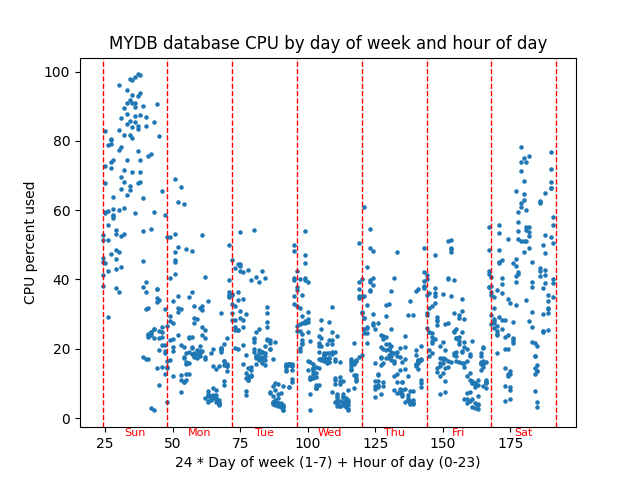

Here is an example graph:

The script graphs database server percent CPU used versus a combination of the day of week and the hour of the day to see if there is any pattern of CPU usage throughout a typical week. This graph has about 6 weeks of hourly AWR snapshots graphed in a scatter plot with CPU percentage on the Y axis and (24 * day of week) + hour of day as the X axis. You could think of the X axis as the hour of the week. This graph might be helpful in performance tuning because it shows whether CPU usage follows a weekly pattern.

Here is the current version of the script: scatter.py.

The script has three main parts which correspond to the three non-internal Python libraries that I use:

- cx_Oracle – Query the CPU data from an Oracle database

- NumPy – Massage query data to get it ready to be graphed

- Matplotlib – Graph the data

These libraries all have lots of great documentation, but Matplotlib’s documentation is confusing at first. At least it was for me. Here are three useful links:

Quick start – This is a great overview. The picture of the “Parts of a Figure” is helpful. I don’t know if earlier versions of Matplotlib had this picture.

Axes – This is a nice list of all the methods of an Axes object. Most of the code in the example script involves calling these methods. I have trouble finding these methods using a Google search, so I bookmarked this link.

Figure – The example script does not call any Figure object methods, but I wanted to document where to find them here. I bookmarked this URL as well as the Axes one because a Matplotlib graph is composed of at least one Figure and Axes object. With the Quick start link and these two lists of methods you have all you need to write Matplotlib scripts.

cx_OracleThe query for this graph pulls operating system CPU metrics from the DBA_HIST_OSSTAT view and uses them to calculate the percent of the time the CPU is busy. It is made of two subqueries in a with statement and the final main query.

with

myoscpu as

(select

busy_v.SNAP_ID,

busy_v.VALUE BUSY_TIME,

idle_v.VALUE IDLE_TIME

from

DBA_HIST_OSSTAT busy_v,

DBA_HIST_OSSTAT idle_v

where

busy_v.SNAP_ID = idle_v.SNAP_ID AND

busy_v.DBID = idle_v.DBID AND

busy_v.INSTANCE_NUMBER = idle_v.INSTANCE_NUMBER AND

busy_v.STAT_NAME = 'BUSY_TIME' AND

idle_v.STAT_NAME = 'IDLE_TIME'),

The myoscpu subquery pulls the CPU busy and idle times from the view along with the snapshot id. I think these are totals since the database last came up, so you have to take the difference between their values at two different points in time to get the CPU usage for that time.

myoscpudiff as

(select

after.SNAP_ID,

(after.BUSY_TIME - before.BUSY_TIME) BUSY_TIME,

(after.IDLE_TIME - before.IDLE_TIME) IDLE_TIME

from

myoscpu before,

myoscpu after

where before.SNAP_ID + 1 = after.SNAP_ID

order by before.SNAP_ID)The myoscpudiff subquery gets the change in busy and idle time between two snapshots. It is built on myoscpu. My assumption is that the snapshots are an hour apart which is the case on the databases I work with.

select

to_number(to_char(sn.END_INTERVAL_TIME,'D')) day_of_week,

to_number(to_char(sn.END_INTERVAL_TIME,'HH24')) hour_of_day,

100*BUSY_TIME/(BUSY_TIME+IDLE_TIME) pct_busy

from

myoscpudiff my,

DBA_HIST_SNAPSHOT sn

where

my.SNAP_ID = sn.SNAP_ID

order by my.SNAP_IDThe final query builds on myoscpudiff to give you the day of the week which ranges from 1 to 7 which is Sunday to Saturday, the hour of the day which ranges from 0 to 23, and the cpu percent busy which ranges from 0 to 100.

import cx_Oracle

...

# run query retrieve all rows

connect_string = username+'/'+password+'@'+database

con = cx_Oracle.connect(connect_string)

cur = con.cursor()

cur.execute(query)

# returned is a list of tuples

# with int and float columns

# day of week,hour of day, and cpu percent

returned = cur.fetchall()

...

cur.close()

con.close()

The cx_Oracle calls are simple database functions. You connect to the database, get a cursor, execute the query and then fetch all the returned rows. Lastly you close the cursor and connection.

print("Data type of returned rows and one row")

print(type(returned))

print(type(returned[0]))

print("Length of list and tuple")

print(len(returned))

print(len(returned[0]))

print("Data types of day of week, hour of day, and cpu percent")

print(type(returned[0][0]))

print(type(returned[0][1]))

print(type(returned[0][2]))I put in these print statements to show what the data that is returned from fetchall() is like. I want to compare this later to NumPy’s version of the same data. Here is the output:

Data type of returned rows and one row

<class 'list'>

<class 'tuple'>

Length of list and tuple

1024

3

Data types of day of week, hour of day, and cpu percent

<class 'int'>

<class 'int'>

<class 'float'>The data returned by fetchall() is a regular Python list and each element of that list is a standard Python tuple. The list is 1024 elements long because I have that many snapshots. I have 6 weeks of hourly snapshots. Should be about 6*7*24 = 1008. The tuples have three elements, and they are normal Python number types – int and float. So, cx_Oracle returns database data in standard Python data types – list, tuple, int, float.

So, we are done with cx_Oracle. We pulled in the database metric that we want to graph versus day and hour and now we need to get it ready to put into Matplotlib.

NumPyNumPy can do efficient manipulation of arrays of data. The main NumPy type, a ndarray, is a multi-dimensional array and there is a lot of things you can do with your data once it is in an ndarray. You could do the equivalent with Python lists and for loops but a NumPy ndarray is much faster with large amounts of data.

import numpy as np

...

# change into numpy array and switch columns

# and rows so there are three rows and many columns

# instead of many rows and three columns

dataarray = np.array(returned).transpose()

# dataarray[0] is day of week

# dataarray[1] is hour of day

# dataarray[2] is cpu percentThe function np.array() converts the list of tuples into a ndarray. The function transpose() switches the rows and columns so we now have 3 rows of data that are 1024 columns long whereas before we had 1024 list elements with size 3 tuples.

I added print statements to show the new types and numbers.

print("Shape of numpy array after converting returned data and transposing rows and columns")

print(dataarray.shape)

print("Data type of transposed and converted database data and of the first row of that data")

print(type(dataarray))

print(type(dataarray[0]))

print("Data type of the first element of each of the three transposed rows.")

print(type(dataarray[0][0]))

print(type(dataarray[1][0]))

print(type(dataarray[2][0]))Here is its output:

Shape of numpy array after converting returned data and transposing rows and columns

(3, 1024)

Data type of transposed and converted database data and of the first row of that data

<class 'numpy.ndarray'>

<class 'numpy.ndarray'>

Data type of the first element of each of the three transposed rows.

<class 'numpy.float64'>

<class 'numpy.float64'>

<class 'numpy.float64'>The shape of a ndarray shows the size of each of its dimensions. In this case it is 3 rows 1024 columns as I said. Note that the overall dataarray is a ndarray and any given row is also. So, list and tuple types are replaced by ndarray types. Also, NumPy has its own number types such as numpy.float64 instead of the built in int and float.

Now that our CPU data is in a NumPy array we can easly massage it to the form needed to plot points on our graph.

# do 24 * day of week + hour of day as x axis

xarray = (dataarray[0] * 24) + dataarray[1]

# pull cpu percentage into its own array

yarray = dataarray[2]My idea for the graph is to combine the day of week and hour of day into the x axis by multiplying day of week by 24 and adding hour of the day to basically get the hours of the week from Sunday midnight to Saturday 11 pm or something like that. The nice thing about NumPy is that you can multiply 24 by the entire row of days of the week and add the entire row of hour of the day all in one statement. xarray is calculated in one line rather than writing a loop and it is done efficiently.

Here are some print statements and their output:

print("Shape of numpy x and y arrays")

print(xarray.shape)

print(yarray.shape)Shape of numpy x and y arrays

(1024,)

(1024,)Now we have two length 1024 ndarrays representing the x and y values of the points that we want to plot.

So, we have used NumPy to get the data that we pulled from our Oracle database using cx_Oracle into a form that is ready to be graphed. Matplotlib works closely with NumPy and NumPy has some nice features for manipulating arrays of numbers.

MatplotlibNow we get to the main thing I want to talk about, which is Matplotlib. Hopefully this is a clean and straightforward example of its use.

import matplotlib.pyplot as plt

...

# get figure and axes

fig, ax = plt.subplots()First step is to create a figure and axes. A figure is essentially the entire window, and an axes object is an x and y axis that you can graph on. You can have multiple axes on a figure, but for a simple graph like this, you have one figure, and one axes.

# point_size is size of points on the graph

point_size = 5.0

...

# graph the points setting them all to one size

ax.scatter(xarray, yarray, s=point_size)This actually graphs the points. A scatter plot just puts a circle (or other shape) at the x and y coordinates in the two arrays. I set all points to a certain size and figured out what size circle would look best by trying different values. Note that scatter() is a method of the Axes type object ax.

# add title

ax.set_title(database+" database CPU by day of week and hour of day")

# label the x and y axes

ax.set_xlabel("24 * Day of week (1-7) + Hour of day (0-23)")

ax.set_ylabel("CPU percent used")More methods on ax. Sets title on top center of graph. Puts labels that describe the x axis and the y axis.

# add vertical red lines for days

for day_of_week in range(8):

ax.axvline(x=(day_of_week+1)*24, color='red', linestyle='--',linewidth=1.0)The previous lines are all I really needed to make the graph. But then I thought about making it more readable. As I said before the X axis is basically the hour of the week ranging from 24 to 191. But I thought some red lines marking the beginning and end of each day would make it more readable. This puts 8 lines at locations 24, 48,…,192. I set the linewidth to 1.0 and used the dashes line style to try to keep it from covering up the points. I think axvline means vertical line on Axes object.

# Calculate the y-coordinate for day names

# It should be a fraction of the range between the minimum and maximum Y values

# positioned below the lower bound of the graph.

# The minimum and maximum CPU varies depending on the load on the queried database.

lower_bound = ax.get_ylim()[0]

upper_bound = ax.get_ylim()[1]

yrange = upper_bound - lower_bound

fraction = .025

y_coord = lower_bound - (fraction * yrange)

xloc = 36

for day in ['Sun','Mon','Tue','Wed','Thu','Fri','Sat']:

ax.text(xloc, y_coord, day, fontsize=8, color='red', ha='center',fontweight='ultralight')

xloc += 24

I kept messing with the script to try to make it better. I didn’t want to make it too complicated because I wanted to use it as an example in a blog post. But then again, this code shows some of the kinds of details that you can get into. The text() method of ax just puts some text on the graph. I made it red like the dashed lines and tried to make the letters light so they wouldn’t obscure the main parts of the graph. The x coordinates were just the center of the word and essentially the middle of the day. The first day starts at x=24 so 12 hours later or x=36 would be halfway through the day, approximately. I just had a list of the three-character day names and looped through them bumping the x location up by 24 hours for each day.

But the y coordinate was more complicated. I started out just choosing a fixed location for y like -5. For one database this worked fine. Then I tried another database, and it was way off. The reason is that Matplotlib scales the y coordinates based on the graphed data. If your database’s cpu is always around 30% then the range of visible y coordinates will be close to that. If your database’s cpu varies widely from 0% to 100% then Matplotlib will set the scale wide enough so the entire range 0 to 100 is visible. So, to put the text where I wanted it, just below the y axis line, I needed to make it a percentage of the visible y range below the lowest visible value. The get_ylim() method shows the calculated lower and upper bounds of the y axis which were calculated based on the y values of the graphed points. I manually messed with the value for the variable fraction until it looked right on the screen. Then I ran the script with a variety of databases to make sure it looked right on all of them.

# show graph

plt.show()Lastly you just show the graph. Note that like the subplots() call this is not a method of an axes or figure object but just a matplotlib.pyplot call. Everything else in this example is a call to a method of the ax Axes type object.

ConclusionThis post shows how to graph Oracle database data using Python libraries cx_Oracle, NumPy, and Matplotlib. It first shows how to pull Oracle data into Python’s native data structures like lists and tuples. Then it shows how to convert the data into NumPy’s ndarrays and manipulate the data so that it can be graphed. Lastly it shows how to use Matplotlib Axes object methods to graph the data and add useful elements to the graph such as labels, vertical lines, and text.

This is a simple example, and all the software involved is free, open-source, widely used, easy to install, and well-documented. Give it a try!

2025 Update

I am not a very good blogger. Only four posts in 2024. I will need to pick it up because I just increased my spend on AWS for this blog. This site kept going down and I finally spent a few minutes looking at it and found that it was running out of memory. I was running on a minimal t2.micro EC2 which has 1 virtual CPU and 1 gigabyte of memory with no swap. So, rather than just add swap I bumped it up to a t3.small and paid for a 3-year reserved instance. It was about $220, nothing outrageous. Worth it to me. I still have two years left on the t2.micro reserved instance that I was using for the blog so I need to find a use for it, but I don’t regret upgrading. I spent a little money to make the site more capable so now I must write more posts!

I have three Machine Learning books to work through. After finishing my edX ML class with Python I picked up a book that covered the same topics and used the same Python libraries and I have been steadily working through it: Machine Learning with PyTorch and Scikit-Learn. I am on chapter 9 and want to get up to chapter 16 which covers Transformers. My edX class got up to the material in chapter 15 so the book would add to what was taught in the class. Earlier chapters also expand on what was taught in the class. The cool thing about the book is the included code. Lots of nice code examples to refer to later. Even the Matplotlib code for the graphs could be very helpful to me. I’ve gotten away from using Matplotlib after using it in my PythonDBAGraphs scripts.

My birthday is the day after Christmas, so I get all my presents for the year at the end of December. I got two ML books for Christmas/Birthday. Probably the first I will dive into after I finish the PyTorch/Scikit-Learn book is Natural Language Processing with Python. This is available for free on the NLTK web site. I have the original printed book. I think once I get through the Transformers chapter of the PyTorch book it makes sense to look at natural language processing since that is what ChatGPT and such is all about. I have played with some of this already, but I like the idea of working through these books.

The second book that I got in December as a present seems more technical and math related, although the author claims to have kept the math to a minimum. It is Pattern Recognition and Machine-Learning. This might be a slower read. It seems to be available for free as a PDF. ChatGPT recommended this and the NLTK book when I was chatting with it about my desire to learn more about Artificial Intelligence and Machine Learning.

I have all these conversations with ChatGPT about whether it makes sense for me as an Oracle database specialist to learn about machine learning. It assures me that I should, but it might be biased in favor of ML. Oracle 23ai does have AI in its name and does have some machine learning features. But it could just be a fad that blows over after the AI bubble bursts as many have before it. I can’t predict that. My current job title is “Technical Architect”. I work on a DBA team and I’m in the on-call rotation like everyone else, but my role includes learning about new technology. Plus, I think that I personally add value because of some of the computer science background I had in school and that I have been refreshing in recent years. Plus, I need to get some level of understanding of machine learning for my own understanding regardless of how much we do or don’t use it for my job. Just being a citizen of the world with a computer science orientation I feel is enough motivation to get up to speed on recent AI advancements. Am I misguided to think I should study machine learning?

Despite all this talk about AI, I am still interested in databases. I have this folder on my laptop called “Limits of SQL Optimization”. I had these grandiose ideas about writing interesting blog posts about what SQL could or couldn’t do without human intervention. Maybe SQL is a little like AI because the optimizer does what a human programmer would have to do without it. I’m interested in it all really. I like learning about how computer things work. I’ve spent my career so far working with SQL statements and trying to understand how the database system processes them. I thought about playing with MySQL’s source code. I downloaded it and compiled it but that’s about it. Plus, some day we will have Oracle 23ai and I’ll have to figure out how to support it in our environment, even if we do not use its AI features. Anyway, I’m sure I will have some non-AI database things to post about here.

To wrap up I think I may have some things to post on this blog in 2025, so it’s worth the extra expense to keep it running. Could be some more machine learning/artificial intelligence coming as I work through my books. I still have databases on the brain. Wish you all a great new year.

Bobby

Using PyTorch to Predict Host CPU from Date/Time

In my previous post I mentioned that I took a machine learning class based on Python and a library called PyTorch. Since the class ended, I have been working on a useful application of the PyTorch library and machine learning ideas to my work with Oracle databases. I do not have a fully baked script to share today but I wanted to show some things I am exploring about the relationship between the current date and time and database performance metrics such as host CPU utilization percentage. I have an example that you can download here: datetimeml2.zip

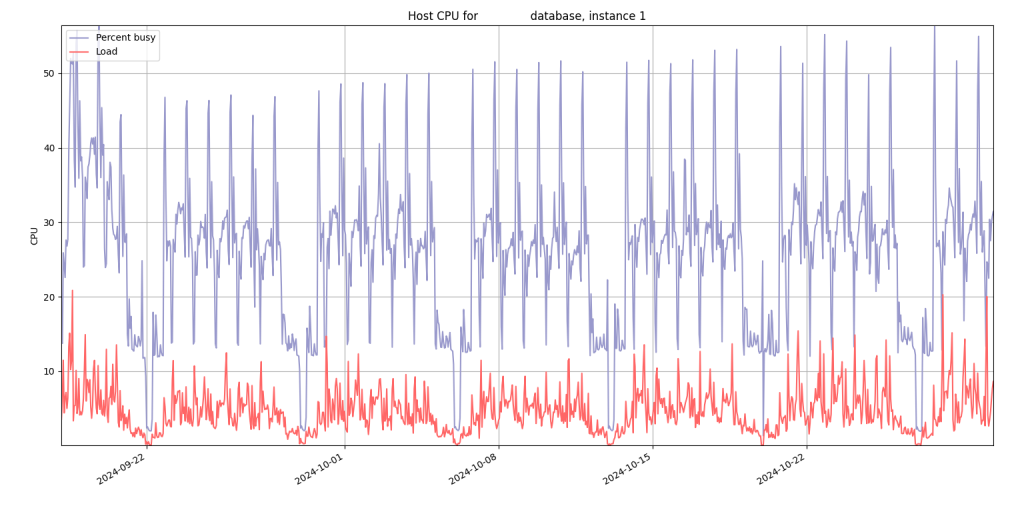

There is a relationship between the day of the week and the hour of the day and database performance metrics on many Oracle database systems. This is a graph from my hostcpu.py script that shows CPU utilization on a production Oracle database by date and hour of the day:

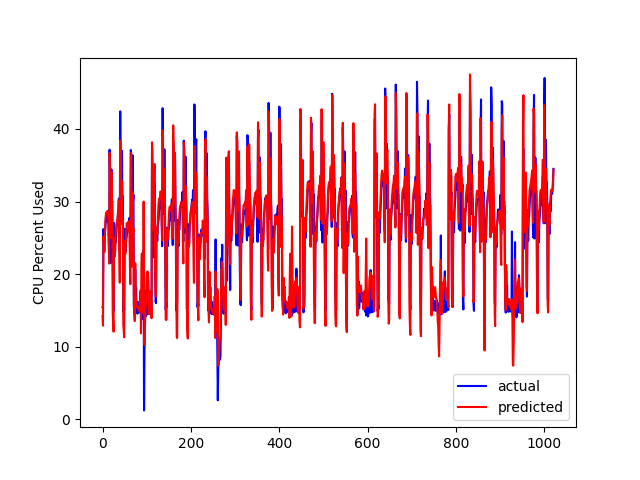

During the weekdays the CPU peaks at a certain hour and on the weekends, there are some valleys. So, I thought I would use PyTorch to model this relationship. Here is what the graph looks like of actual host CPU used versus predicted by PyTorch:

It’s the same source database but an earlier date. The prediction is close. I guess the big question after I got to this point was, so what? I’m not sure exactly what to do with it now that I have a model of the database CPU. I guess if the CPU is at 100% for an entire day instead of going up and down, I should throw an alert? What if CPU stays near 0% for a day during the week? It must be helpful to have a prediction of the host CPU but exactly how to alert on deviations from the predicted value is still a work in progress.

I thought it would be helpful to talk about the date and time inputs to this model. If you look at datetimeoscpu.sql in the zip it has this SQL for getting the date and time values:

select

to_char(sn.END_INTERVAL_TIME,'D') day_of_week,

to_char(sn.END_INTERVAL_TIME,'DD') day_of_month,

to_char(sn.END_INTERVAL_TIME,'DDD') day_of_year,

to_char(sn.END_INTERVAL_TIME,'HH24') hour_of_day,

to_char(sn.END_INTERVAL_TIME,'MM') month,

to_char(sn.END_INTERVAL_TIME,'Q') quarter,

to_char(sn.END_INTERVAL_TIME,'WW') week_of_year,

to_char(sn.END_INTERVAL_TIME,'W') week_of_month,

to_char(sn.END_INTERVAL_TIME,'YYYY') year,

...I ended up ignoring year because it is not a cyclical value. The rest have a range like 1 to 7 for day of week or 1 to 31 for day of month. Having all eight of these is probably overkill. I could just focus on day of week and hour of day and forget the other six. We have 6 weeks of AWR history so I’m not sure why I care about things like month, quarter, day of year because I don’t have multiple years of history to find a pattern.

My input data looks like this:

4 17 199 03 07 3 29 3 2024 15.4210261

4 17 199 04 07 3 29 3 2024 15.5799532

4 17 199 05 07 3 29 3 2024 26.2080227

4 17 199 06 07 3 29 3 2024 24.1532019

4 17 199 07 07 3 29 3 2024 23.2947767

4 17 199 08 07 3 29 3 2024 25.5198717

4 17 199 09 07 3 29 3 2024 26.029638

4 17 199 10 07 3 29 3 2024 27.1160204

4 17 199 11 07 3 29 3 2024 27.7076496Each line represents an AWR snapshot. The first 8 are the cyclical date and time input values or X. The last value is the host CPU utilization percentage or Y. The point of the program is to create a model based on this data that will take in the 8 values and put out a predicted CPU percentage. This code was used to make the predictions for the graph at the end of the train.py script:

predictions = model(new_X)When I first started working on this script it was not working well at all. I talked with ChatGPT about it and discovered that cyclical values like hour of day would work better with a PyTorch model if they were run through sine and cosine to transform them into the range -1 to 1. Otherwise PyTorch thinks that an hour like 23 is far apart from the hour 0 when really, they are adjacent. Evidently if you have both cosine and sine their different phases help the model use the cyclical date and time values. So, here is the code of the function which does sine and cosine:

def sinecosineone(dttmval,period):

"""

Use both sine and cosine for each of the periodic

date and time values like hour of day or

day of month

"""

# Convert dttmval to radians

radians = (2 * np.pi * dttmval) / period

# Apply sine and cosine transformations

sin_dttmval = np.sin(radians)

cos_dttmval = np.cos(radians)

return sin_dttmval, cos_dttmval

def sinecosineall(X):

"""

Column Number

day_of_week 7

day_of_month 31

day_of_year 366

hour_of_day 24

month 12

quarter 4

week_of_year 52

week_of_month 5

"""

...The period is how many are in the range – 24 for hour of the day. Here is the hour 23 and hour 0 example:

>>> sinecosineone(23.0,24.0)

(-0.25881904510252157, 0.9659258262890681)

>>> sinecosineone(0.0,24.0)

(0.0, 1.0)Notice how the two values are close together for the two hours that are close in time. After running all my input data through these sine and cosine procedures they are all in the range -1.0 to 1.0 and these were fed into the model during training. Once I switched to this method of transforming the data the model suddenly became much better at predicting the CPU.

You can play with the scripts in the zip. I really hacked the Python script together based on code from ChatGPT, and I think one function from my class. It isn’t pretty. But you are welcome to it.

I’m starting to get excited about PyTorch. There are a bunch of things you must learn initially but the fundamental point seems simple. You start with a bunch of existing data and train a model that can be used as a function that maps the inputs to the outputs just as they were in the original data. This post was about an example of inputting cyclical date and time values like time of day or day of week and outputting performance metrics like CPU used percentage. I don’t have a perfect script that I have used to great results in production, but I am impressed by PyTorch’s ability to predict database server host CPU percent based on date and time values. More posts to come, but I thought I would get this out there even though it is imperfect. I hope that it is helpful.

Bobby

Catching Myself up on AI: Useful Resources

In this post, I will describe the AI training resources that I am using in my quest to catch up with the current state of the art. My goal is to document the resources that I used for my own reference and to benefit others while explaining my reasoning along the way.

After studying AI back in the 1980s, I have not kept my AI skills up to date until recently when I started learning about newer things like ChatGPT. AI is in the news and every technical person should learn something about it for their own understanding and to help their career. Because I had a good overall understanding of the state of AI years ago, I think of it as “catching up” with the present-day standard. So, my approach makes sense for me given my history. But even for people who are not as old as I am or who don’t have the AI experience from their past the resources below could still be useful because of their overall quality.

Revisit AI Overview – 6.034The first thing I did was watch the lecture videos for Patrick Winston‘s 2010 MIT 6.034 class. I had used Winston’s textbook in my college AI class in the 1980s. So, I thought that a class taught my Winston might follow a similar outline to what I once knew about AI and help jog my memory and catch me up with what has changed in the last 30+ years. Just watching the lecture videos and not doing the homework and reading limited how much I got from the class, but it was a great high-level overview of the different areas of AI. Also, since it was from 2010 it was good for me because it was closer to present day than my 1980s education, but 14 years away from the current ChatGPT hoopla. One very insightful part of the lecture videos is lectures 12A and 12B which are about neural nets and deep neural nets. Evidently in 2010 Professor Winston said that neural nets were not that promising or could not do that much. Fast forward 5 years and he revised that lecture with two more modern views on neural nets. Of course, ChatGPT is based on neural nets as are many other useful AI things today. After reviewing the 6.034 lecture videos I was on the lookout for a more in depth, hands on, class as the next step in my journey.

edX Class With Python – 6.86xI had talked with ChatGPT about where to go next in my AI journey and it made various suggestions including books and web sites. I also searched around using Google. Then I noticed an edX class about AI called “Machine Learning with Python-From Linear Models to Deep Learning,” or “6.86x”. I was excited when I saw that edX had a useful Python-based AI class. I had a great time with the two earlier Python edX classes which I took in 2015. They were:

- 6.00.1x – Introduction to Computer Science and Programming Using Python

- 6.00.2x – Introduction to Computational Thinking and Data Science

This blog has several posts about how I used the material in those classes for my work. I have written many Python scripts to support my Oracle database work including my PythonDBAGraphs scripts for graphing Oracle performance metrics. I have gotten a lot of value from learning Python and libraries like Matplotlib in those free edX classes. So, when I saw this machine learning class with Python, I jumped at the chance to join it with the hope that it would have hands-on Python programming with libraries that I could use in my regular work just as the 2015 classes had.

Math PrerequisitesI almost didn’t take 6.86x because the prerequisites included vector calculus and linear algebra which are advanced areas of math. I may have taken these classes decades ago, but I haven’t used them since. I was afraid that I might be overwhelmed and not able to follow the class. But since I was auditing the class for free, I felt like I could “cheat” as much or as little as I wanted because the score in the class doesn’t count for anything. I used my favorite algebra system, Maxima, when it was too hard to do the math by hand. I had some very interesting conversations with ChatGPT about things I didn’t understand. My initial fears about the math were unfounded. The class helped you with the math as much as possible to make it easier to follow. And, at the end of the day since I wasn’t taking this class for any kind of credit, the points don’t matter. (like the Drew Carey improv show). What matters was that I got something out of it. I finished the class this weekend and although the math was hard at times I was able to get through it.

How to Finish Catching Up to 2024It looks like 6.86x is 4-5 years old so it caught me up to 2019. I have been thinking about where to go from here to get all the way to present day. Working on this class I found two useful Python resources that I want to pursue more:

- PyTorch – Neural Nets

- Hugging Face – Large Language Models

I walked away from both 6.034 and 6.86x realizing how important neural nets are to AI today and so I want to dig deeper into PyTorch which is a top Python library for neural nets originally developed by Facebook. With the importance of neural nets and the experience I got with PyTorch from the class it only makes sense to dig deeper into PyTorch as a tool that I can use in my database work. As I understand it Large Language Models such as those behind ChatGPT are built using tools like PyTorch. Plus, neural nets have many other applications outside of LLMs. I hope to find uses for PyTorch in my database work and post about them here.

Large Language Models with Hugging FaceEveryone is talking about ChatGPT and LLMs today. I ran across the Hugging Face site during my class. I can’t recall if the class used any of the models there or if I just ran across them as I was researching things. I would really like to download some of the models there and play with updating them and using them. I have played with LLM’s before but have not gotten very far. I tried out OpenAI’s API programming doing completions. I played with storing vectors in MongoDB. But I didn’t have the background that I have now from 6.86x to understand what I was working with. If I can find the time, I would like to both play with the models from Hugging Face and revisit my earlier experiments with OpenAI and MongoDB. Also, I think Oracle 23ai has vectors so I might try it out. But one thing at a time! First, I really want to dig into PyTorch and then mess with Hugging Face’s downloadable models.

RecapI introduced several resources for learning about artificial intelligence. MIT’s OCW class from 2010, 6.034, has lecture videos from a well-known AI pioneer. 6.86x is a full-blown online machine learning class with Python that you can audit for free or take for credit. Python library PyTorch provides cutting edge neural net functionality. Hugging Face lets you download a wide variety of large language models. These are some great resources to propel me on my way to catching up with the present-day state of the art in AI, and I hope that they will help others in their own pursuits of AI understanding.

Bobby

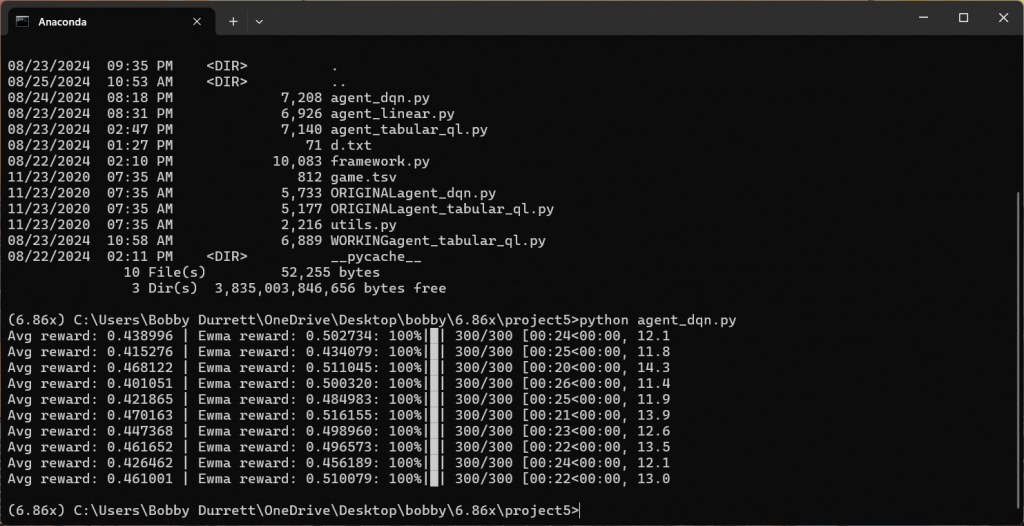

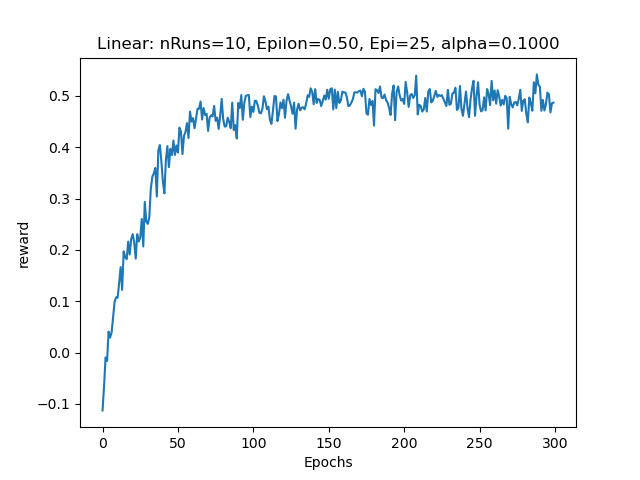

P.S. Here are a couple of fun screenshots from the last project in my 6.86x class. Hopefully it won’t give too much away to future students.

This is a typical run using PyTorch to train a model for the last assignment.

This is the graphical output showing when the training converged on the desired output.

Simple MySQL Range Scan Test

I was trying to tune a MySQL query this week. I ran the same query against Oracle with the same data and got a much faster runtime on Oracle. I couldn’t get MySQL to do a range scan on the column that Oracle was doing it on. So, I just started barely scratching the surface with a simple test of when MySQL will use an index versus a full table scan in a range query. In my test MySQL always uses an index except on extreme out of range conditions. This is funny because in my real problem query it was the opposite. But I might as well document what I found for what it’s worth. I haven’t blogged much lately.

Here is my testcase and its output:

https://www.bobbydurrettdba.com/uploads/mysqlindexuserangequeries.zip

This is on 8.0.26 as part of an AWS Aurora MySQL RDS instance with 2 cores and 16 gigabytes of RAM.

I created a simple test table and put 10485760 rows in it:

create table test

(a integer NOT NULL AUTO_INCREMENT,

b integer,

PRIMARY KEY (a));The value of b is always 1 and a ranges from 1 to 10878873.

This query uses a range query using the index:

select

sum(b)

from

test

where

a > -2147483648;This query uses a full table scan:

select

sum(b)

from

test

where

a > -2147483649;The full scan is slightly faster.

Somehow when you are 2147483650 units away from the smallest value of a the MySQL optimizer suddenly thinks you need a full scan.

There are a million more tests I could do like things with a million variables, but I thought I might as well put this out there. I’m not really any the wiser but it is a type of test that might be worth mentioning.

Bobby

Archivelog Space Needed Python Script

I wrote a script called archivelogspace.py to help size our Oracle archive log filesystems to support replication tools such as Fivetran, DMS, or GoldenGate which need a certain number of hours or days of archive log history at all times. In many cases we had backups that removed all the archive logs after they had been backed up once. So, that is essentially zero hours of history. If we only backed up once per day, it really peaked out at 24 hours of history, but the minimum was zero. Our replication products need 24 hours minimum in some cases. In other cases, we needed different numbers of hours. Also, the log backups and deletes run multiple times a day and on different schedules on some systems.

I based archivelogspace.py on a simplistic idea which I know is not perfect but so far it has been helpful. So, I thought I would share it here in case others can benefit. I would love any feedback, suggestions and criticism of the approach and implementation. The idea was to query V$ARCHIVED_LOG and see how full the filesystem would get if these same logs came in at the same times but with different retention times and different archive log filesystem sizes. I could try different settings and see the highest percentage that the filesystem hit.

I think this approach is imperfect because the past behavior recorded in V$ARCHIVED_LOG may not represent future behavior very well as things change. Also, the way I wrote it I assumed that the archive logs are laid down instantaneously. I.e. If the row in V$ARCHIVED_LOG has COMPLETION_TIME of 2/24/2024 16:11:15 then I assume that the filesystem gets (BLOCKS+1)*BLOCK_SIZE bytes fuller in that second. I also assume that the backups instantly remove all the logs which are beyond the retention.

I tested the script using the current settings for the archive log filesystem size and log backup run times and retention settings. I just compared the output percent full with reality. It was close but not exact. So, when I went to apply this for real, I padded the filesystem sizes so the expected percent full was less than 50%. So far so good in reality. I would like to build an emergency script that automatically clears out old logs if the filesystem gets full but so far, I have not. We do have alerting on archive log filesystem space getting too full.

If you run the script, you can see the arguments:

Arguments: oracle-username oracle-password tns-name configfile-name

Config file is text file with this format:

archivelog filesystem size in bytes

number of backups per day

one line per backup with 24-hour:minutes:seconds archivlog-retention-hours

for example:

8795958804480

6

02:15:00 168

06:15:00 168

10:45:00 168

14:15:00 168

18:15:00 168

22:15:00 168

The output is something like this:

2024-02-20 08:55:57 1.67%

2024-02-20 09:10:02 1.68%

2024-02-20 10:00:29 1.69%

2024-02-20 11:00:20 1.7%

2024-02-20 11:37:32 1.7%

2024-02-20 12:01:17 1.68%

2024-02-20 12:09:05 1.68%

2024-02-20 12:43:53 1.69%

2024-02-20 12:55:52 1.69%

Max percent used archivelog filesystem: 46.15%

Date and time of max percent: 2023-12-24 11:52:17

When your archive log filesystem is too small the Max percent is over 100%.

It’s not perfect or fancy but it is available if someone finds it useful.

Bobby

p.s. The script uses cx_Oracle so you will need to install that.

User Privileges Script

I keep running into situations on Oracle databases where I need to dump out the privileges an Oracle user has. I have been just manually putting together SQL statements like:

select * from dba_role_privs where grantee='MYUSER';

select * from dba_sys_privs where grantee='MYUSER';

select * from dba_tab_privs where grantee='MYUSER';

select * from dba_users where username='MYUSER';

This captures the three kinds of grants the user could have in the first three queries and the last query just shows if the user exists and things like whether it is locked. Really this simple set of queries is good enough in most cases.

But I had also wrote a script that would show all the system and object grants that were included in the roles. Because you can have roles granted to roles, you must loop through all the roles until you get down to the base system and object privileges. I rewrote this logic from scratch several times until I finally convinced myself to make a script and save it on my GitHub site. The current version of the script is here:

The interesting part of the script is where we keep looping through the roles in table my_role_privs deleting each role and then inserting the role’s system, object, and role privileges into the my_sys_privs, my_tab_privs, and my_role_privs tables. Eventually you run out of roles to delete and the loop finishes. I guess this works because you cannot have a circular role grant situation:

SQL> create role a;

Role created.

SQL> create role b;

Role created.

SQL> grant a to b;

Grant succeeded.

SQL> grant b to a;

grant b to a

*

ERROR at line 1:

ORA-01934: circular role grant detected

In the past I have put a loop counter in the code just in case there was something circular or a really long chain of roles, but this version does not have it.

To make the output useful I put it in three sections. The first section just has the direct grants and corresponds to the first three queries listed above.

Privileges granted directly to user MYUSER

Role privileges for user MYUSER

GRANTED_ROLE

--------------------

DBA

RESOURCE

System privileges for user MYUSER

PRIVILEGE

----------------------------------------

SELECT ANY TABLE

UNLIMITED TABLESPACE

Summarized table privileges for user MYUSER

OWNER PRIVILEGE COUNT(*)

-------------------- ---------------------------------------- ----------

SYS EXECUTE 1

Detailed table privileges for user MYUSER

PRIVILEGE OWNER TABLE_NAME

---------------------------------------- -------------------- -----------

EXECUTE SYS DBMS_RANDOMI put counts of each type of object grants in case there was a bunch. I called them table privileges because view is named dba_tab_privs but I really should have called them object privileges because they can be grants on objects which are not tables.

The second section has the output of the loop showing all the system and object privileges implied by the role grants as well as those granted directly to the user:

Privileges granted through a role or directly to user MYUSER

System privileges for user MYUSER

PRIVILEGE

----------------------------------------

ADMINISTER ANY SQL TUNING SET

ADMINISTER DATABASE TRIGGER

ADMINISTER RESOURCE MANAGER

...

Summarized table privileges for user MYUSER

OWNER PRIVILEGE COUNT(*)

-------------------- ---------------------------------------- ----------

AUDSYS EXECUTE 1

GSMADMIN_INTERNAL EXECUTE 1

OUTLN SELECT 3

SYS DELETE 11

SYS EXECUTE 169

SYS FLASHBACK 14

SYS INSERT 12

SYS READ 15

SYS SELECT 4759

...

Detailed table privileges for user MYUSER

PRIVILEGE OWNER TABLE_NAME

---------------------------------------- -------------------- ------------------------

DELETE SYS AUX_STATS$

DELETE SYS DBA_REGISTRY_SQLPATCH

DELETE SYS EXPIMP_TTS_CT$

DELETE SYS INCEXP

DELETE SYS INCFIL

...

I use this a lot of times to see if a user has CREATE SESSION either directly or through a role so that I will know whether the user can login.

Lastly, I included a couple of details about the user at the end:

Account status, last password change for user ZBL6050

ACCOUNT_STATUS LAST_PASSWORD_CHNG

-------------------------------- -------------------

OPEN 2023-10-10 11:01:01

You need to give the user that runs userprivs.sql SELECT on sys.user$ to get the last password changed date and time. Otherwise, this query returns an error.

I mainly use this script to validate if a user has the correct permissions and if they can log in, so putting this information at the end in addition to the grant information above just fills in some details I would have to query anyway. I.e., Is the user locked? How long since they changed their password?

I thought about bringing down some statement about CREATE SESSION here. As it is written now, I have to visually scan the system privileges for CREATE SESSION to get the full picture on the user’s ability to login. It might be nice to add a column “Has CREATE SESSION” to this screen.

There are probably fancier scripts and tools to do all this, but this is what I have been using and the reasoning behind it. Maybe it will be useful to others and a reminder to myself to document it here.

Bobby

MongoDB Atlas LangChain Vector Store

I was in a short programming contest at work for three days last week. My team got second place! We used a LangChain vector store in a MongoDB Atlas cluster so I thought I would at least document the links we referred to and videos I watched for others who are interested.

First I watched this video about LangChain:

I recommend watching the whole thing but the part about VectorStores starts at 25:22.

I got Python 3.9 setup in an Amazon EC2 instance and ran through these steps with the FAISS database:

https://python.langchain.com/docs/modules/data_connection/vectorstores/

Then I watched this video about the new MongoDB Atlas Vector Search feature:

This video is totally worth watching. I got a ton out of it. After watching the video I redid the VectorStore example but with MongoDB Atlas as the database:

https://python.langchain.com/docs/integrations/vectorstores/mongodb_atlas

I got in a discussion with ChatGPT about why they call them “vectors” instead of “points”. A vector is just an array or list of floating point numbers. In math this could be a point in some multi-dimensional space. ChatGPT didn’t seem to realize that software does use the vectors as vectors in a math sense sometimes. The MongoDB Atlas index we used cosine similarity which must be related to the vectors with some common starting point like all zeroes pointing towards the point represented by the list of numbers in the “vector”.

When I created the search index in MongoDB Atlas I forgot to name it and it did not work since the code has the index name. For the sample the index name has to be langchain_demo. By default index name is “default”.

LangChain itself was new to me. I watched the first video all the way through but there is a lot I did not use or need. I had played with OpenAI in Python already following the Python version of this quick start:

https://platform.openai.com/docs/quickstart/build-your-application

I edited the example script and played with different things. But I had never tried LangChain which sits on top of OpenAI and simplifies and expands it.

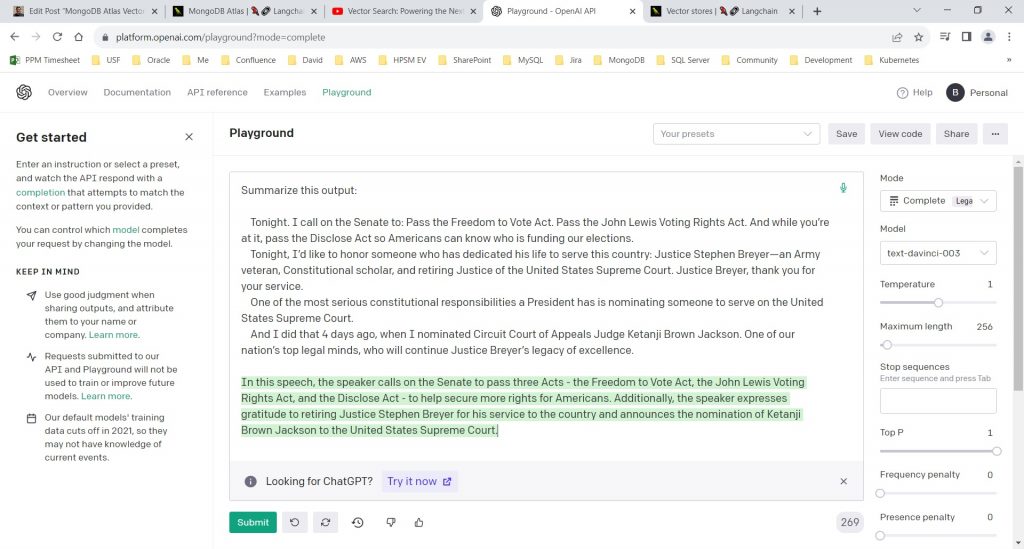

The project we worked on for the contest implemented the architecture documented at 28:57 in the MongoDB video above. If you look at the MongoDB Atlas vector store example this “information flow” would take the output from docsearch.similarity_search(query) and send it through OpenAI to summarize. If you take the piece of the President’s speech that is returned by the similarity search and past it into OpenAI’s playground the result looks like this:

So, our programming project involved pulling in documents that were split up into pieces and then retrieve a piece based on a similarity query using the vector store and then ran that piece through OpenAI to generate a readable English summary.

Install MySQL 5.7.38 from source on Oracle Linux 8

This is a follow up to my earlier post about installing various MySQL versions from source:

https://www.bobbydurrettdba.com/2019/05/22/mysql-source-installs-for-each-rds-version/

So, I am an Oracle database administrator. I have been for about 30 years starting with Oracle 7. But for the past few years we have been supporting MySQL in Amazon Web Services’ RDS version. Unfortunately, we have been forced to embrace the Aurora version of MySQL also which is proprietary to Amazon and does not have the full source code. But we still have several vanilla MySQL RDS instances that we support.

Working with Oracle for so many years I have tried to learn about its internals – how does it really work? This is difficult because I do not have access to Oracle’s source code and even if I did, I probably do not have enough years remaining in my life to ever fully understand it. Still, the idea of working with the MySQL community edition version that we have the full source code to has always intrigued me. This is similar to my fascination with the antique computer game Nethack that I play in a character-based mode exploring a dungeon and fighting monsters. It is a good game, but more importantly, I have the full source code.

Who knows? Maybe when we are working with MySQL we will run across a bug, and I will be able to dig into the part of the code that has the bug and it will help me figure out how to solve the problem. With Oracle it is always a guess what is going on. It can be an educated guess based on what Oracle reveals through various traces and logs. But maybe even though I do not have the time and motivation to be a hardcore MySQL internals developer there could be a situation where having the source code will help.

So, that leads me to want to download the exact version of the MySQL source code that we are using on AWS RDS and compile it, link it, install it on a test VM on the chance that someday I will need a working test MySQL database of the same version as one that is having a problem in production.

Things have changed since my 5/22/2019 post about setting up this kind of environment. At that time, I was working with an Oracle Linux 7 VM running on my work laptop with all its firewalls and Zscaler and all between my VM and the internet. Today I am using Oracle Linux 8 running on a VM on a personal laptop which is on my home network so there is nothing in the way of my downloading things from the internet like Linux rpm packages. One of the side effects of COVID-19 is that I am working from home full time. Also, not due to COVID, my middle daughter moved out leaving her bedroom open to be converted to an office. So, I have two “desks” setup with two laptops and big monitors on both. My home laptop sits to my left and I can run VirtualBox VMs on it without being on our corporate network. This is great for testing where I just need to setup a technology and I do not need or even want access to something on our corporate network.

So, with all this prelude let me tell you some of the things I had to do to get the MySQL 5.7.38 source code compiled on my Oracle Linux 8 VM.

I cloned a OEL 8 VM that I already had and then updated the packages. I think I used yum instead of dnf which was dumb, but it worked.

Once I had an up-to-date OEL 8 environment I had to get the source tree for MySQL with the right commit point for 5.7.38. I was following this document:

https://dev.mysql.com/doc/refman/5.7/en/installing-development-tree.html

I ran these git commands:

git clone https://github.com/mysql/mysql-server.git

git checkout 5.7

git pull

This gets 5.7.38:

git checkout c94ce78I installed Boost and Cmake like what I did in the earlier post. I got the rest of the development tools like gcc, make, bison in place using this dnf command:

dnf groupinstall "Development Tools"Then I had to get the cmake command to work:

cmake . -DWITH_BOOST=/home/bobby/boost_1_59_0I had to install several packages before I could get this to run without errors. I had to enable the “CodeReady Builder” repository in the file oracle-linux-ol8.repo:

[root@localhost yum.repos.d]# diff oracle-linux-ol8.repo oracle-linux-ol8.repo.06212023

20c20

< enabled=1

---

> enabled=0

[ol8_codeready_builder]

name=Oracle Linux 8 CodeReady Builder ($basearch) - Unsupported

baseurl=https://yum$ociregion.$ocidomain/repo/OracleLinux/OL8/codeready/builder/$basearch/

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-oracle

gpgcheck=1

enabled=1Once that repository was enabled, I was able to add the needed packages.

dnf install ncurses-devel

dnf install libtirpc-devel

dnf install rpcgenEventually I realized that after messing with adding the new repository and packages I needed to go back and clean everything up and run cmake again:

make clean

rm CMakeCache.txt

cmake . -DWITH_BOOST=/home/bobby/boost_1_59_0

make

su -

make installOther than that, it is just the normal steps to create the database and run it, which I think is documented in my earlier post and in the MySQL docs.

I thought it couldn’t hurt to document the things I had to do if nothing else for myself. I use this blog as a reference for myself, so it is not just something for other people to read. Anyway, I’m glad I could get this down and maybe someone else will benefit.

Bobby

Full Database Restore and Recovery