Jan Kettenis

Jan Kettenishttp://www.blogger.com/profile/14146264706360751350noreply@blogger.comBlogger159125

Updated: 1 month 1 week ago

OIC: Identity Propagation In Structured Process

When a process calls a service you sometimes have a requirement that some user identity needs to be propagated to the service call. This article describes how you can propagate the identity (but alas not the principle) of a user on behalf of whom a service call is executed.

Updated on June 22 to add a missing, last step.

When calling a service in a structured process you sometimes must pass on the identity of the user that called the service. This could be the case when that service call is done to a SaaS application and it is required to track on behalf of whom that service is called. The identity (user name) only is not enough when authentication must happen using the principle (security token) of the user, but there are applications that can handle this using some combination of a system user (or client id plus secret) with an on behalf of user. And there are situations where having an on behalf of user only is enough, like when storing data in a database table with audit columns (you don’t want all the end users also to be database users so passing on the user’s principle to the DB would not make sense).

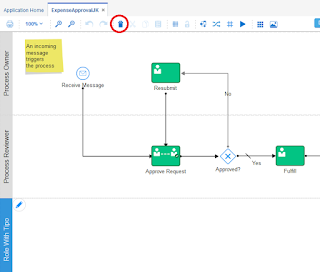

It is not always trivial who that on behalf of user should be. Take for example the following process model:

Now how to achieve this? In short:

In Detail I work with one single process payload data object as much as possible. Let’s call that processPayload. You can add an element to that called onBehalfOfuser. In the Start event you can instantiate that with the creator predefined variable:

In the output mapping of every Human Task map the execData.systemAttributes.updatedBy.id to the processPayload.onBehalfOfUser:

In case of an OIC Integration (or ORDS REST Handler) you can pass that on as a custom header element. In case of an Integration you configure that in the trigger activity.

In case of an ORDS REST Handler you can configure that as an HTTP header parameter:

When calling the service, you map the payload.onBehalfOfUser to the header parameter, which either will have the value of the creator of the process, or that user id of the last Human Task that has been executed.

Updated on June 22 to add a missing, last step.

When calling a service in a structured process you sometimes must pass on the identity of the user that called the service. This could be the case when that service call is done to a SaaS application and it is required to track on behalf of whom that service is called. The identity (user name) only is not enough when authentication must happen using the principle (security token) of the user, but there are applications that can handle this using some combination of a system user (or client id plus secret) with an on behalf of user. And there are situations where having an on behalf of user only is enough, like when storing data in a database table with audit columns (you don’t want all the end users also to be database users so passing on the user’s principle to the DB would not make sense).

It is not always trivial who that on behalf of user should be. Take for example the following process model:

- On the top a flow implementing the 4-eye principle. The first user does something after which the second user reviews it. Which identity should be passed on, that of the first or the second user?

- Below that a flow where the user activity is optional. When that user activity is not executed, what identity should be passed on?

- After that an exception flow to handle a fault with calling the service. This is handled by some Applications Administrator that may choose to retry. Which identity should be passed on, that of the Process Owner or the administrator?

Now how to achieve this? In short:

- In the Start event of the process set some “user” payload element to the creator of the process.

- Whenever a Human Task is finished, replace that with the id of the user that did that.

- When the service is called, pass it on as a custom header element.

In Detail I work with one single process payload data object as much as possible. Let’s call that processPayload. You can add an element to that called onBehalfOfuser. In the Start event you can instantiate that with the creator predefined variable:

In the output mapping of every Human Task map the execData.systemAttributes.updatedBy.id to the processPayload.onBehalfOfUser:

In case of an OIC Integration (or ORDS REST Handler) you can pass that on as a custom header element. In case of an Integration you configure that in the trigger activity.

In case of an ORDS REST Handler you can configure that as an HTTP header parameter:

Finally, to make the Integration backward compatible you can use an choose-when-otherwise construction to default the identity with the invokedBy meta data element:

OIC: How to Find Human Task by Correlation and How to Abort a Parallel Task

v\:* {behavior:url(#default#VML);}

o\:* {behavior:url(#default#VML);}

w\:* {behavior:url(#default#VML);}

.shape {behavior:url(#default#VML);}

Normal

0

false

21

false

false

false

NL

X-NONE

X-NONE

/* Style Definitions */

table.MsoNormalTable

{mso-style-name:"Table Normal";

mso-tstyle-rowband-size:0;

mso-tstyle-colband-size:0;

mso-style-noshow:yes;

mso-style-priority:99;

mso-style-parent:"";

mso-padding-alt:0cm 5.4pt 0cm 5.4pt;

mso-para-margin-top:0cm;

mso-para-margin-right:0cm;

mso-para-margin-bottom:8.0pt;

mso-para-margin-left:0cm;

line-height:107%;

mso-pagination:widow-orphan;

font-size:11.0pt;

font-family:"Calibri",sans-serif;

mso-ascii-font-family:Calibri;

mso-ascii-theme-font:minor-latin;

mso-hansi-font-family:Calibri;

mso-hansi-theme-font:minor-latin;

mso-bidi-font-family:"Times New Roman";

mso-bidi-theme-font:minor-bidi;

mso-fareast-language:EN-US;}

This article explains how you can find an

instance of a Human Task of process instance in the Oracle Integration Cloud

(OIC) without knowing its task number, and how you can use that for example to

withdraw a parallel task. You can use the same mechanism for other use cases as

well, like to get a specific task instance for a specific process instance in a

custom Workspace, etc.

When a

Human Task is scheduled in a process there is no out-of-the-box way for the

process instance to “know” its task number, because scheduling a task concerns an

asynchronous call (so you don’t get an immediate response with the task number).

So, although the task number is visible in the process flow trace (as shown

below), the process instance itself does not know it.

The sample

process below has two parallel tasks. The outcome of Parallel Task 2 is

either CONTINUE or DONE. When DONE, Parallel Task 1 must be withdrawn. I will

use this as a use case to illustrate how setting some “correlation id” on a

task can be used to achieve that.

For those

of you who remember the on-premise BPM Suite may be aware of the Update Task activity

you could use to do just that, but such an activity does not exist in OIC. Instead

you will have to use the PUT operation of the /ic/api/process/v1/tasks/{id} API.

But as you can see this requires an id, which is the task number that you don’t

have. To get that you can use the GET operation on /ic/api/process/v1/tasks

first. However, there can be many instances of the same process, implying many

instances of the Parallel Task 1, so how to find that one you are looking for?

The clue is

that you must map some unique key to the task when scheduling Parallel Task 1

so that you can use the GET tasks operation to query tasks on that key. The GET

supports a keyword query parameter to do this. You can include the unique key

in the task title (which is indexed and used in the search by keyword), but

that clutters the task title with some technical id.

Fortunately,

Human Tasks have a specific request element you can use to do just that, which

is the identificationKey. In the

example below I a have mapped some idPrefix that is unique for the process

instance, and post-fixed that with “-1” to make the identificationKey unique

over all Human Tasks instances.

Now in the Get

Task activity I use the GET operation to search tasks by keyword equal to my

unique key:

The response

is a list of tasks with an “items” element that will contain the (one) task

instance I’m interested in. I created the Tasks Business Type for the response

of the API. To get the instance of the task I will have to map the “items” of

that response to an array of items first, for which I created the TaskItems Business

Type, and then map the first item in the array to a Task Business Type I

created. So, Tasks -> Task Items -> Task as shown below:

The mapping

from the response of the API to my TaskItems is done using a Transformation. As

I’m only interested in the task number I have left all other elements out of

the TaskItems, except for the state:

To map first

element of the TaskItems to a variable of my Task Business Types I also use

a Transformation:

Now I have

a hold on the task number so I can call the PUT operation to withdraw the task

in the Withdraw Task activity:

When I

start an instance of this process, and go the Workspace I will see both tasks:

I can then submit

the instance of Parallel Task 2 by choosing DONE.

And as a

result, the instance of Parallel Task 1 is withdrawn and the process ends:

In a real

process application, I would have made this a bit more robust and would check

if the Get Tasks returns a task instance and that the state of that task is

ASSIGNED. I probably would even create a (reusable) Integration to withdraw any task from any process by

identificationKey. But you get the draft.

OCI: When and How to Create an Integration to Call a Service from a Process?

With the Oracle Integration Cloud, when you have to call a service from a Process you can choose to call an external service directly or you can put an Integration in between. This article gives some directives why you may want to do the latter, and how to prevent a pitfall that is easy to step in to.

To call a service you have to import the WSDL with it's XSD's. With that Business Types are auto-generated for all complexTypes in that XSD. Recently I was refactoring a case where this resulted in some 220 (!) Business Types being generated from 1 single external service, of which only a few were actually used. Granted, it concerned a service with a very complex interface, but for some reason all the external SOAP services we have to consume are moderate to very complex and easily generate 50+ Business Types. Not only that, they also use relatively long namespaces. Can you imagine what will happen when you have to call 5 of these services from the same Process application! You barely can see the forest from the trees, and you may find it pretty difficult to identify the correct Business Type to use for your request.

The following example shows the selection list showing the types to chose from when creating a data object for one of the most simple cases we have.

Another issue might be that your Process is tightly coupled to the external service. When it's interface changes you will have to re-import the WSDL. There is a risk that it will not be back-ward compatible which may result in a situation that you first have to completely remove the existing WSDL with all its resources, but can't do so until you have to removed all references to it from data objects (variables) that were defined using those Business Types. That can become quite a challenge.

All this can to a great length be prevented by creating an Integration as a proxy between the external service and the Process application. But you will solve nothing when you use the same WSDL for both the Invoke as well as the Trigger Connection. You must take the extra step to create another WSDL with an XSD for the trigger connection, which offers an as much simplified interface as possible to be consumed by the process. This is nothing less than an example of applying the basic principle of decoupling.

What I personally do is, starting from the parent element of the request and response type, copying the complex types I need from the XSD of the target service, snip all elements I don't need from it, simplify the data types (e.g. change normalizedString to string) and remove all attributes I don't need, or replace those that I do by an element instead. And so work my way down to the child elements as far as required. And then create a Trigger Connection based on the simplified WSDL to be used for the Integration that will be consumed by the Process, as shown in the following picture.

In the example of the 220 Business Types I mentioned before, I was able to reduce that to just 22 Business Types.

The following example shows the selection list showing the types to chose from when creating a data object for one of the most simple cases we have.

Another issue might be that your Process is tightly coupled to the external service. When it's interface changes you will have to re-import the WSDL. There is a risk that it will not be back-ward compatible which may result in a situation that you first have to completely remove the existing WSDL with all its resources, but can't do so until you have to removed all references to it from data objects (variables) that were defined using those Business Types. That can become quite a challenge.

All this can to a great length be prevented by creating an Integration as a proxy between the external service and the Process application. But you will solve nothing when you use the same WSDL for both the Invoke as well as the Trigger Connection. You must take the extra step to create another WSDL with an XSD for the trigger connection, which offers an as much simplified interface as possible to be consumed by the process. This is nothing less than an example of applying the basic principle of decoupling.

What I personally do is, starting from the parent element of the request and response type, copying the complex types I need from the XSD of the target service, snip all elements I don't need from it, simplify the data types (e.g. change normalizedString to string) and remove all attributes I don't need, or replace those that I do by an element instead. And so work my way down to the child elements as far as required. And then create a Trigger Connection based on the simplified WSDL to be used for the Integration that will be consumed by the Process, as shown in the following picture.

In the example of the 220 Business Types I mentioned before, I was able to reduce that to just 22 Business Types.

OIC Integration: defining and using constants

For integrations there are two ways to define constants in the Oracle Integration Cloud:

- Lookups

- Variables

Lookups are initially meant to support mapping of values from one domain to another. For example, one domain has country code using two letters ("NL") whereas another domain uses three letters ("NLD"). The lookup can then be used to "translate" the value from one to the other ("NL" <-> "NLD"). The same feature can also be used to support configurable constants by providing a list of name-value pairs. For example, in the following SMColor lookup two different name-value pairs have been stored, "YELLOW" with value "yellow", and "BLUE" with value "blue":

In an integration you can use this lookup to get the value by name using an XPath lookup function. As I will show hereafter, there are two different XPath expressions, each being used in a different context.

Variables are set in an Integration using the Assign activity. You can define a variable with a specific name and a value. You can also use a combination of the two. In the following example you see the variable "red" being defined with value "red" and the variable "blue" is initiated using an XPath expression that on its turn gets the value from the lookup name-value pair with name "BLUE":

Now let's have a look at how this can be used in a Mapper activity. In the following picture you see three different elements being mapped from respectively the lookup, the variable and the combination of both:

The response of my service looks as follows:

{

"statusFromLookup": "yellow",

"statusFromVariable": "red",

"statusFromBoth": "blue"

}

Note that the XPath expression used to initiate the variable, is different from the Mapper activity. The difference of expression is whether the lookup function is used from XSLT as the Mapper activity does, or not.

When used in a Mapper activity use an expression like the following:

dvm:lookupValue ("tenant/resources/dvms/SMColors", "Name", "YELLOW", "Value", "not found")

Otherwise use an expression like this:

dvm:lookupValue('oramds:/apps/ICS/DVM/SMColors.dvm','Name','BLUE','Value','not found')

Now when to use what? Some pointers:

- To change a variable in an integration, you will have to modify the integration and reactivate it. You can do that as a new, minor version to prevent downtime. Not a task for any type of OIC user.

- The threshold for someone to change a Lookup is lower. More suitable for values that need to be changed run-time (you don't have to re-activate the using integrations) for example by an Application Administrator.

- It is easy to make a mistake in the XPath expression. So when you have to use it in an integration multiple times, consider using the combination as mapping a variable is simple.

- Execution of an XPath expression has a small, but still a performance penalty.

For Structured and Dynamic Process there also is more than one way, which I will also discuss in some next blog topic. None of the above solutions support "versioned" parameters. I will discuss how to do that as well.

OIC Process: Auto-Mapping Elements in the Data Mapper

Hereafter I describe a 'hidden feature' regarding data mapping in Oracle Integration Cloud - Process.

When mapping data in the Oracle Integration Cloud (or OIC for short) you sometimes discover that elements you want to map from are not always available as a source on the left-hand side. As I recently found out (thank you Eduardo Chiocconi!) that does not necessarily mean that they are not available for mapping.

An example might be including some elements of the request in the title of the process instance. Until now I always did this by including a Data Mapper right after the Start Event. However, the same I could have achieved in the Start Activity itself.

The following figure shows how I map the value of the "title" predefined variable to itself, concatenated with some values from the request (customer name and id):

As you can see in the Processes tab of the Workspace both the title and the concatenated values are visible. Saves you Data Mapper activity :-)

When mapping data in the Oracle Integration Cloud (or OIC for short) you sometimes discover that elements you want to map from are not always available as a source on the left-hand side. As I recently found out (thank you Eduardo Chiocconi!) that does not necessarily mean that they are not available for mapping.

An example might be including some elements of the request in the title of the process instance. Until now I always did this by including a Data Mapper right after the Start Event. However, the same I could have achieved in the Start Activity itself.

The following figure shows how I map the value of the "title" predefined variable to itself, concatenated with some values from the request (customer name and id):

As you can see in the Processes tab of the Workspace both the title and the concatenated values are visible. Saves you Data Mapper activity :-)

Microprocess Considerations

In this article I discuss some considerations when applying the Microprocess Architecture, and how those can impact the design of the process.

This article has been updated on November 11 2019 after feedback from Luc Gorissen, and on December 28 after feedback from Sushil Shukla.

As pointed out in the article about the Microprocess Architecture, one should carefully consider if this is the right architecture for the process application to create. Considering the implications (for example one single business process can end up comprising multiple process applications) it should not be considered to be a "one-size fits all" kind of architecture.

Guidelines that can help you to determine if and where is a fit, are the following:

If you answered one or more of these questions with yes, it probably is a good candidate. If not, probably not. As I will discuss hereafter you do not have to implement the Microprocess Architecture on all parts of the application. There are also alternative solutions like abandoning a running instance and handle it manually, or restarting a process instance. Such alternatives are out-of-scope of this article though. Maybe I will discuss them at some point in the future.

Before explaining the rationale behind these criteria, let me first explain that instance migration refers to moving a running stance of a process from one version of the application to the next. For this to be possible, the next version needs to be backward compatible with the one in which the instance runs. At the time of writing of this article, The Oracle Integration Cloud (OIC) does not yet support instance migration, but will soon. But even when it does, there will be limitations. As it is yet to be seen what those are, I can say not much about them but you can imagine that an instance which is in a Receive activity (waiting to be called) cannot be migrated to a version from which that activity has been removed.

Now lets discuss the criteria that makes (part of) your application a candidate for the Microprocess Architecture in more detail.

Point 1 is a clear indicator, as you cannot assume instances can always be migrated to the new version that has the changes incorporated. An example is a long-running legal processes that has to cater for changing procedures and laws. Or a move house process initiated by the customer some time before the move will actually happen, and in the meantime the organization or customer situation may have change changed, requiring the move house to be handled differently. As long as the top-level process is not changed in a non-backward compatible way, the applying the Microprocess Architecture may support this at a great length.

Point 2 might be less obvious unless you start thinking about what it means when you have changed the process and there are instances running in previous version that cannot be migrated. You should try to avoid having multiple versions (or revisions as you would call them) of the same process application being deployed, but may be forced so. This will have impact on the process engine, not only from a performance but also operations perspective. Someone who has to analyze the flow of the process will have to be aware there can be many revisions for (part of the application) that all work differently.

Point 3 addresses the level of functional modularization that can be achieved. Often it is already a pretty natural way of development to implement isolated business functions in modules, or in the context of this article, microprocesses of their own that also can be maintained and deployed isolated from each other. An example is some generic omni-channel notification feature to inform customer about the status of something like an order, service request, or complaint. Another example is a reusable process to handle technical faults. The microprocesses can be reused, but there still is a top-level process that determines the orchestration or choreography of the microprocesses. In case of a Dynamic Process, the business rules determining the choreography can be also be implemented as modules of their own that (currently) only can be changed dynamically as long as the rules are data-driven, and the interface of those rules do not change. All in all the Microprocess Architecture mainly addresses flexibility to the microprocesses, and less to the top-level process. One therefore should strive to have as little business logic in the (long-running) top-level process as possible, and delegate this to the microprocesses and rules.

Point 4 is a very clear indicator. Whenever parts of a business process are executed by different business units it always will be a good idea to group business functions by business units in such a way that they are isolated from each other, resulting in microprocesses of their own. Obvious rationale that a business unit can then execute its own roadmap for changes of the process without unnecessary interference of the roadmaps of other business units.

Now let's discuss how this can be applied to a process application or parts of it.

When a process starts there may be quite a few activities that are executed before it has to pause for a longer period of time. Or said differently, before it reaches a human activity that may take days or weeks before it will be executed, or a point where it has to wait for a message from some external application, organization of organizational unit (in BPM-speak these activities would be in a different pool). The implementation of the activities up to that point may not require to be microprocesses of their own. After all, once started any change to the process cannot be applied to those activities anymore, they will already be done. In contrast, for all activities coming after that you still have an opportunity to execute those differently. In other words, any point where the process can be paused for a longer period of time should be considered to be the end of a microprocess, and the first activity after that to be the start of a new one.

An activity may represent a business capability, that may consist of several smaller but strongly related steps. It would be wise to isolate these from the rest of the process, so that this set can be maintained and and operated separately. This then will be a microprocess of its own, or even a microservice.

Finally, as argued, changes in the top-level process will impose a challenge at some point. To some extend this can be addressed by letting the cross-over from one phase to the other be the start of a new microprocess. For example, the first phase may concern the sales cycle to a customer. The customer may need some time to consider the offer. Once the product has been sold the delivery process can start. This can be a good opportunity to start a new microprocess, implying a split of the main process into two separate ones, a "Sales" and a "Delivery" process.

This article has been updated on November 11 2019 after feedback from Luc Gorissen, and on December 28 after feedback from Sushil Shukla.

As pointed out in the article about the Microprocess Architecture, one should carefully consider if this is the right architecture for the process application to create. Considering the implications (for example one single business process can end up comprising multiple process applications) it should not be considered to be a "one-size fits all" kind of architecture.

Guidelines that can help you to determine if and where is a fit, are the following:

- Do process instances have a longer time span during which one must be able to incorporate changes to the process (in one way or another)?

- Is the process expected to change often?

- Does it concern a complex business process, where business functions can be executed isolated from each other (and with that potentially can be reused)?

- Are multiple business units involved in the flow?

If you answered one or more of these questions with yes, it probably is a good candidate. If not, probably not. As I will discuss hereafter you do not have to implement the Microprocess Architecture on all parts of the application. There are also alternative solutions like abandoning a running instance and handle it manually, or restarting a process instance. Such alternatives are out-of-scope of this article though. Maybe I will discuss them at some point in the future.

Before explaining the rationale behind these criteria, let me first explain that instance migration refers to moving a running stance of a process from one version of the application to the next. For this to be possible, the next version needs to be backward compatible with the one in which the instance runs. At the time of writing of this article, The Oracle Integration Cloud (OIC) does not yet support instance migration, but will soon. But even when it does, there will be limitations. As it is yet to be seen what those are, I can say not much about them but you can imagine that an instance which is in a Receive activity (waiting to be called) cannot be migrated to a version from which that activity has been removed.

Now lets discuss the criteria that makes (part of) your application a candidate for the Microprocess Architecture in more detail.

Point 1 is a clear indicator, as you cannot assume instances can always be migrated to the new version that has the changes incorporated. An example is a long-running legal processes that has to cater for changing procedures and laws. Or a move house process initiated by the customer some time before the move will actually happen, and in the meantime the organization or customer situation may have change changed, requiring the move house to be handled differently. As long as the top-level process is not changed in a non-backward compatible way, the applying the Microprocess Architecture may support this at a great length.

Point 2 might be less obvious unless you start thinking about what it means when you have changed the process and there are instances running in previous version that cannot be migrated. You should try to avoid having multiple versions (or revisions as you would call them) of the same process application being deployed, but may be forced so. This will have impact on the process engine, not only from a performance but also operations perspective. Someone who has to analyze the flow of the process will have to be aware there can be many revisions for (part of the application) that all work differently.

Point 3 addresses the level of functional modularization that can be achieved. Often it is already a pretty natural way of development to implement isolated business functions in modules, or in the context of this article, microprocesses of their own that also can be maintained and deployed isolated from each other. An example is some generic omni-channel notification feature to inform customer about the status of something like an order, service request, or complaint. Another example is a reusable process to handle technical faults. The microprocesses can be reused, but there still is a top-level process that determines the orchestration or choreography of the microprocesses. In case of a Dynamic Process, the business rules determining the choreography can be also be implemented as modules of their own that (currently) only can be changed dynamically as long as the rules are data-driven, and the interface of those rules do not change. All in all the Microprocess Architecture mainly addresses flexibility to the microprocesses, and less to the top-level process. One therefore should strive to have as little business logic in the (long-running) top-level process as possible, and delegate this to the microprocesses and rules.

Point 4 is a very clear indicator. Whenever parts of a business process are executed by different business units it always will be a good idea to group business functions by business units in such a way that they are isolated from each other, resulting in microprocesses of their own. Obvious rationale that a business unit can then execute its own roadmap for changes of the process without unnecessary interference of the roadmaps of other business units.

Now let's discuss how this can be applied to a process application or parts of it.

When a process starts there may be quite a few activities that are executed before it has to pause for a longer period of time. Or said differently, before it reaches a human activity that may take days or weeks before it will be executed, or a point where it has to wait for a message from some external application, organization of organizational unit (in BPM-speak these activities would be in a different pool). The implementation of the activities up to that point may not require to be microprocesses of their own. After all, once started any change to the process cannot be applied to those activities anymore, they will already be done. In contrast, for all activities coming after that you still have an opportunity to execute those differently. In other words, any point where the process can be paused for a longer period of time should be considered to be the end of a microprocess, and the first activity after that to be the start of a new one.

An activity may represent a business capability, that may consist of several smaller but strongly related steps. It would be wise to isolate these from the rest of the process, so that this set can be maintained and and operated separately. This then will be a microprocess of its own, or even a microservice.

Finally, as argued, changes in the top-level process will impose a challenge at some point. To some extend this can be addressed by letting the cross-over from one phase to the other be the start of a new microprocess. For example, the first phase may concern the sales cycle to a customer. The customer may need some time to consider the offer. Once the product has been sold the delivery process can start. This can be a good opportunity to start a new microprocess, implying a split of the main process into two separate ones, a "Sales" and a "Delivery" process.

The Process Group Pattern

Updated on 2019-09-16 to include screenshot of processes in Workspace.

For a

somewhat more complex process, and especially when applying the Microprocess Architecture, you may have more than one process and probably

several integration applications that make up the implementation of one single

business process. This implies that when executing a business process there

will be 2 or more instances of the process, and integration applications. Now

how can a business user or Applications Administrator correlate all these

instances to monitor the flow of the business process?

The

on-premise Oracle BPM Suite (and SOA Suite) has the concept of

"flowId" which is an id that is generated by the BPM engine at the

start of the first instance and then "passed on" from one instance to

the other. Using Enterprise Manager, by means of the flowId one can easily

follow how one process or integration calls the other, and by putting it in the

process or integration instance title, also in the Workspace. The Oracle

Integration Cloud (OIC) does not have the concept of flowId, as least not yet.

Now what to do? Here comes the Process Group to the rescue.

The Process

Group Pattern is relatively simple. It includes a unique

"processGroupId" that, like the flowId, is generated at the start of

a process flow and then passed on from one process or integration to the other.

A robust way to get a processGroupId is by using the GUID you can get from OIC

(or the BPM Suite).

However,

unlike the flowId, the processGroupId is unique over all engine instances you

may have. When using a GUID, it is even unique over the world. Uniqueness over

engine instances becomes important when at any point in time you have two or

more of those and when some of the components of the process application are

deployed on a different instance than the one starting it. Also unlike the

flowId, the processGroupId is persisted in a custom database and can be kept persisted

beyond the life cycle of the business process (which after purging will have

disappeared from the engine's database). Finally, you can return the processGroupId as a

response to the start operation of the process, allowing the starting

application storing a cross-reference to the Process Group instance.

To support

that instances can be queried by processGroupId in the Workspace, you can set

the title of the instance as a first activity in every individual process application.

For OIC integrations you can make it one of the tracking variables. The below shows how in OIC this would look for process instances:

Next to the processGroupId, you can also persist more meta data information about the business process, ending up with a business object looking like the following:

The

combination processGroupType and businessId should be unique, at least for

running instances. By storing this combination together with the processGroupId

you have a mechanism to prevent duplicate instances of the same business

process to be started.

For an even

more complex business proceess consisting of a main and a few functional

subprocesses, you can introduce an extra layer by adding a Process Group Instance

business object. This might come handy for example when you have a stand-alone,

reusable business process (like a Signing process) that is called by the main

process.

More

formally:

Context:

The

implementation of a business process is made up of several components (process

or integration applications) and there is no out-of-the-box way to relate the

instances of these components to each other in a (custom) Workspace. One might

also want to have a recording persisted of meta data of the business process

after its instance(s) have been purged from the engine's database. Or one might

need a means to prevent duplicate instances of the same business process to be

started.

Solution:

A Process Group is the collection of instances of components that make up the flow of one single business process. It includes a unique

processGroupId that is generated at the very beginning of the business process,

which is persisted in a custom database, together with a combination of a

businessId and processGroupType. There can only be one combination of businessId

and processGroupType for any running Process Group instance at any time. The

processGroupId can be returned by the start operation to support

cross-reference from the starting application.

For more complex process applications an extra Process Group Instance layer can be added as a child of the Process Group, to support business process applications made up of two or more functional process applications. A Process Group Instance is the collection of one or more instances of tightly coupled components that together make up one single process application, which in principle is reusable.

For more complex process applications an extra Process Group Instance layer can be added as a child of the Process Group, to support business process applications made up of two or more functional process applications. A Process Group Instance is the collection of one or more instances of tightly coupled components that together make up one single process application, which in principle is reusable.

Implication:

A custom

database is required for storing the Process Group and Process Group Instance. A

function is required that returns a processGroupId that is guaranteed unique

over process engine instances when (at some point in the future) components of

the business process need to be deployed on two or more engines.

OIC: Making a REST Integration Returning a 404 instead of 500

In this article I describe how to return a HTTP 404 (resource) Not Found with a REST integration that on its turn calls another REST service that returns a 404.

This article is superseded by my article Fault Handling in OIC, which gives you the proper way to do this.

When an integration invokes the GET action on a REST service that returns a 404, the integration will raise an APIInvocationError. As a result, the integration on its turn will respond with a HTTP 500 error, which is typically not what you want.

Embedding the invoke in a Scope gives you the option to add a Fault Handler:

Chosing the APIInvocationError gives you the option to configure how any APIInvocationError should be handled. As you can see below, I have configured it to use a Switch, where the top flow will make it return a 404:

In all honesty this is not watertight, because I filter on all APIInvocationErrors where the type is empty (“”). The reason being that all the elements, type, title, detail and errorCode are empty, so I cannot filter on anything.

As I found out, you will also run into this situation when the URL of the Connection used for the invoke is wrong, and probably a few other situations as well. I rely on the assumption that my integration is properly configured, so that the most likely cause of an APIInvocationError indeed is that it concerns a 404.

To make my integration return a 404, I map this as a hard-coded value to the errorCode:

Except for the errorCode I have hard-coded the other elements as well. Probably not exactly according to the specifications, but good and especially clear enough for me:

In the meantime, in the background there is a SOAP fault with a reason containing the HTTP 404, so at some point I hope this will be exposed so that I can filter in a reliable way:

OIC: Handling Optional Elements in a REST Integration

This article is a follow-up of the a previous article where I discuss how to handle optional elements in case of XML in the Oracle Integration Cloud (OIC). In the following I discuss how to create an integration that invokes VBCS REST service and works in (almost) the same way as the VBCS REST service itself.

A challenge with mapping is always how to handle optional elements. In the previous posting that I refer to above, I describe a way how to deal with this in case of XML messages. As I found out (the hard way) this is cannot be applied 100% to JSON.

I have made it work for an integration that invokes the REST service on a VBCS business object. As there are challenges especially with numeric fields and references (foreign keys), I have used a simple BO called Detail having a string field, a number field and a reference. With VBCS BO’s the latter implies a number field that references the (number) id field of another BO it refers to, which in this case is called Master.

The Master BO looks as follows (ignore the create/update fields, those are generated by default and for the example not relevant):

The Detail BO looks as follows:

As you can see, name (string), master (reference to Master.id, number) and age (number) are all optional.

I created a single REST integration that, using the OIC Pick Action feature has a POST, GET and PATCH action to create, get and update a Detail:

Use if-function for Mapping InputExcept for the PATCH action, all mappings to the requests of the invoke use the if-function to check if the source has a value, and only if it has maps it to the target:

Use string-length() for Mapping OutputThe mappings from the invokes to the response of the integration all use the string-length() function to check if the response element of the invoke has a value, and if so maps it to the target.

I make use of the fact that internally JSON is transformed into XML and there is no payload validation, so numbers also can be checked using string-length(). By doing it like this the element will be left out completely, instead of being returned as an empty string “” or failing in case of a number. This is not 100% as the VBCS service works (which will return null instead of leaving the field out), but for me that is not an issue when using the integration.

Special Case: PATCHIn case of a PATCH I need my integration working so that left-out elements are not updated (i.e. stay untouched) and that I can nullify them by passing null as a value. For the invoke to the VBCS REST service this will fail for number elements (with JSON a number is a primitive type that cannot be null). I therefore apply a trick by using a JSON sample payload that treats all elements as string, including the master and age (both number):

{"name": "Huey", "master": "1", "age": "15"}

This, in combination with the if-function when mapping the request to the PATCH invoke, makes it work the same way as the VBCS REST service works. For the response I use the string-length() as described above.

The picture on the left side shows an example of an invoke to the PATCH action with all elements present, and on the right side where all elements are nullified:

As you can see, nullifying the master results in a reference to a row with Master.id = 2, which happens to be the only Master row with no name. The VBCS REST service works the same way, so apparently some ‘intelligence’ is applied here. When adding an extra Master with no name so that now I have two of those, VBCS can no longer decide which one to take and nullifies the reference to the master altogether:

When I leave out any element in the request, the field in the BO is not touched. It’s a bit boring to see, so I will spare you the screenshots. You will have to trust me on that.

A challenge with mapping is always how to handle optional elements. In the previous posting that I refer to above, I describe a way how to deal with this in case of XML messages. As I found out (the hard way) this is cannot be applied 100% to JSON.

I have made it work for an integration that invokes the REST service on a VBCS business object. As there are challenges especially with numeric fields and references (foreign keys), I have used a simple BO called Detail having a string field, a number field and a reference. With VBCS BO’s the latter implies a number field that references the (number) id field of another BO it refers to, which in this case is called Master.

The Master BO looks as follows (ignore the create/update fields, those are generated by default and for the example not relevant):

The Detail BO looks as follows:

As you can see, name (string), master (reference to Master.id, number) and age (number) are all optional.

I created a single REST integration that, using the OIC Pick Action feature has a POST, GET and PATCH action to create, get and update a Detail:

Use if-function for Mapping InputExcept for the PATCH action, all mappings to the requests of the invoke use the if-function to check if the source has a value, and only if it has maps it to the target:

Use string-length() for Mapping OutputThe mappings from the invokes to the response of the integration all use the string-length() function to check if the response element of the invoke has a value, and if so maps it to the target.

I make use of the fact that internally JSON is transformed into XML and there is no payload validation, so numbers also can be checked using string-length(). By doing it like this the element will be left out completely, instead of being returned as an empty string “” or failing in case of a number. This is not 100% as the VBCS service works (which will return null instead of leaving the field out), but for me that is not an issue when using the integration.

Special Case: PATCHIn case of a PATCH I need my integration working so that left-out elements are not updated (i.e. stay untouched) and that I can nullify them by passing null as a value. For the invoke to the VBCS REST service this will fail for number elements (with JSON a number is a primitive type that cannot be null). I therefore apply a trick by using a JSON sample payload that treats all elements as string, including the master and age (both number):

{"name": "Huey", "master": "1", "age": "15"}

This, in combination with the if-function when mapping the request to the PATCH invoke, makes it work the same way as the VBCS REST service works. For the response I use the string-length() as described above.

The picture on the left side shows an example of an invoke to the PATCH action with all elements present, and on the right side where all elements are nullified:

As you can see, nullifying the master results in a reference to a row with Master.id = 2, which happens to be the only Master row with no name. The VBCS REST service works the same way, so apparently some ‘intelligence’ is applied here. When adding an extra Master with no name so that now I have two of those, VBCS can no longer decide which one to take and nullifies the reference to the master altogether:

When I leave out any element in the request, the field in the BO is not touched. It’s a bit boring to see, so I will spare you the screenshots. You will have to trust me on that.

The Fault Encapsulation Pattern

This posting discusses an integration pattern where you return a fault as a message instead of as a fault, to prevent that the execution of the integration is indicated as having errored.

There are a couple of situations where you may not want a synchronous integration to return a fault to its consumer. Examples are:

- Some back-end system is raising a fault which is not really a fault but a way to give the consumer a particular outcome. Like a credit limit check that returns OK when the limit is not reached, but otherwise gives a CreditLimitReached fault.

- A call to the back-end system may time out, telling the integration that the system is not available, which may be a regular state. For example, the integration calls the system to check if it is still running, and if it is tell it to shut down. When the system is already shut down the call will time out.

The reason you may not want to return a fault to the consumer of your integration, at least not as a fault, might be that this flags the execution of the integration to be errored. For example, integrations in the Oracle Integration Cloud (OIC) will show up in the Dashboard as errored instances. That on its turn should trigger Systems Administration to have a look why it failed, only to find out it did not as that is normal behavior. Before you know it, Systems Administration stops having a look, also when there is something seriously wrong with your integration.

To prevent this from happening you may want to handle the fault as an alternate flow instead of an exception flow. This is what the Fault Encapsulation pattern is about.

Fault Encapsulation PatternIn simple terms, when applying the Fault Encapsulation pattern, you don't return an error for business faults, but instead encapsulate the error in a "message" element of the response which is an optional part of the normal response.

The following "BPEL-ish" diagram shows how this looks.

The invoke to the back-end system is a scope with a catch block that catches the error, wraps it in a normal message and then returns the response. In OIC this works in a similar way.

More formally:

Context:

A business fault in a synchronous service operation should not stop its processing, to allow returning other information than the fault alone.

A business fault caught by a synchronous service operation that otherwise executed properly, should not flag the operation as failed to prevent false positive error notifications. Instead handling of the fault should be part of normal process execution by the consumer.

Solution:

The fault in the synchronous service operation is caught using an exception handler that wraps the fault in a message element. The message element is an optional part of the regular response message of the synchronous service operation. System faults in the processing of the synchronous service operation itself are handled as regular faults, in case of SOAP by raising a SOAP Fault, or in case of REST by returning a 4xx or 5xx HTTP status code.

Implication:

The consumer cannot use any regular fault handling mechanisms to handle the business fault. Instead it will have to check for the message element being present in the response and act on that.

Oracle Integration Cloud: How to Rename or Delete a Swimlane Role

In the category "it was right in front of me, but I was too blind to see" below how you can "rename" and delete a swimlane (application) role. The documentation Work with Process Roles and Swimlanes for example does not discuss this, and Googling it did not help me either. So here you go...

Deleting a swim-lane is easy, you select it and press Delete or the delete icon at the top.

However, this does not delete the role itself. The issue is that when you activate the application, it will turn up in the Workspace (Administration -> Manage Roles). You can delete it there but with the next activation it is back again.

The way to do it is by going to the small icon on the top-right corner (just above the "hamburger menu") which reveals the "General Properties". There below is the link to "Organization", which takes you to a pop-up where you can delete the role

Make sure you don't use it anywhere before deleting. Otherwise the swimlane will change to "Unassigned role" which will not result in a validation error, can be activated, resulting in an application role in Workspace with name "Unassigned role". Then you have to delete that in two places (Composer and Workspace).

You cannot rename a role. For example when I want to rename the role with name "Role With Tipo" into "Role Without Typo" I have to add the latter and then delete the first.

Deleting a swim-lane is easy, you select it and press Delete or the delete icon at the top.

However, this does not delete the role itself. The issue is that when you activate the application, it will turn up in the Workspace (Administration -> Manage Roles). You can delete it there but with the next activation it is back again.

The way to do it is by going to the small icon on the top-right corner (just above the "hamburger menu") which reveals the "General Properties". There below is the link to "Organization", which takes you to a pop-up where you can delete the role

Make sure you don't use it anywhere before deleting. Otherwise the swimlane will change to "Unassigned role" which will not result in a validation error, can be activated, resulting in an application role in Workspace with name "Unassigned role". Then you have to delete that in two places (Composer and Workspace).

You cannot rename a role. For example when I want to rename the role with name "Role With Tipo" into "Role Without Typo" I have to add the latter and then delete the first.

Oracle Dynamic Process Calling Structured Process Caveat

When implementing a Dynamic Process, there currently are three options to implement a case activity: Human Task, Service (or Integration), and Process. At least up to version 18.4.5.0.0 there is limitation when defining the interface in case of a Process Activity making that you cannot use a Business Type which is based on an XSD element, which on its turn in based on a complexType. The below describes the problem you will run into, and a suggestion of how to work-around this.

When developing XSD's for web services you may have developed the practice of defining a complexType with an element based on that complexType, for example as in the below XSD.

Reason could be that you developed with the (on-premise) SOA or BPM Suite and found this to give the best flexibility, especially when integrating with Oracle Business Rules.

However, when you base the input argument of a Structured Process on an element that on its turn is based on a complex type, you will find this does not work when using it to implement the input argument of a Process Activity. You will run in to an error similar to the following:

In the example below the "First" activity is implemented as a Process activity with name "SRElementStart".

The Dynamic Process has a start argument based on the Business Type "RequestElement" that is created using the "request" element from the XSD:

Also the Structured Process has a start argument based on "RequestElement":

For the Process activity with name "First" the process input argument is mapped to the input argument of the Structured Process:

When running this application it fails in the Start event of the Structured Process with the error mentioned at the beginning.

The problem being that when invoking the Structured Process, the Dynamic Process uses the name of the Business Type instead of the name of the argument to invoke it.

The solution is to define the input argument using a Business Type that is based on the complexType (instead of the element).

So far this is the only place where I ran into this issue. After fixing it I can map the of the Structured Process input argument backed by the complexType to a (local) Business Object backed by the element without an issue. I can also map the same Business Object back to the Dynamic Process Business Object (backed by the element) without an issue. Business Object backed by an element can be mapped 1:1 on a Business Object backed by a complexType.

You can prevent the issue by defining all your Business Types on complexTypes, or only for the input argument of Structured Processes. So far I have not found any limitations to do the first, so that probably is the easiest to do.

Many thanks to Luc Gorissen who helped my to discover the solution, and let's hope that with some next version this restriction is gone.

When developing XSD's for web services you may have developed the practice of defining a complexType with an element based on that complexType, for example as in the below XSD.

Reason could be that you developed with the (on-premise) SOA or BPM Suite and found this to give the best flexibility, especially when integrating with Oracle Business Rules.

However, when you base the input argument of a Structured Process on an element that on its turn is based on a complex type, you will find this does not work when using it to implement the input argument of a Process Activity. You will run in to an error similar to the following:

In the example below the "First" activity is implemented as a Process activity with name "SRElementStart".

The Dynamic Process has a start argument based on the Business Type "RequestElement" that is created using the "request" element from the XSD:

Also the Structured Process has a start argument based on "RequestElement":

For the Process activity with name "First" the process input argument is mapped to the input argument of the Structured Process:

When running this application it fails in the Start event of the Structured Process with the error mentioned at the beginning.

The problem being that when invoking the Structured Process, the Dynamic Process uses the name of the Business Type instead of the name of the argument to invoke it.

The solution is to define the input argument using a Business Type that is based on the complexType (instead of the element).

So far this is the only place where I ran into this issue. After fixing it I can map the of the Structured Process input argument backed by the complexType to a (local) Business Object backed by the element without an issue. I can also map the same Business Object back to the Dynamic Process Business Object (backed by the element) without an issue. Business Object backed by an element can be mapped 1:1 on a Business Object backed by a complexType.

You can prevent the issue by defining all your Business Types on complexTypes, or only for the input argument of Structured Processes. So far I have not found any limitations to do the first, so that probably is the easiest to do.

Many thanks to Luc Gorissen who helped my to discover the solution, and let's hope that with some next version this restriction is gone.

Understanding Mapping Optional Elements in OIC Integration

There are some easy to make mistakes to make when mapping messages with optional elements in OIC Integrations. This article describes how optional elements are being handled, and a way to make this work the way you want.

OIC Integration handles optional elements the same for both XML as well as JSON based elements, including mapping from XML to JSON and vise verse. The reason being that internally OIC will map JSON to XML. The examples hereafter therefore are based on XML.

I will discuss the examples on the following XSD that is used in an integration that maps all elements 1:1 and echoes the result back.

"Optional" in this context means that the element can be completely left out using 'minOccurs="0"'. Apart from that one can also specify if a null value can be assigned to the element using 'nillable="true"'. This means that an empty tag is allowed in the message (e.g. <optional/> or <optional></optional>).

When only the mandatory elements of the master are passed on you will find that all optional elements are echoed as empty, even those of the child:

The first mistake you could have made is to expect all elements that are not provided with the request not to be in the response either. Not a strange assumption considering that in XML Schema the default for the nillable attribute is "false", so strictly speaking, according to the XSD the response is not valid XML.

The reason OIC handles it like this is one of fault tolerance as in case of a 1:1 mapping where the source is not present, the alternative would be giving a selectionFailure (the equivalence of a NullPonterException).

Although appreciating this fault-tolerant way of mapping for ease of use for more 'Citizen Developer' type of users, it might not be what you want. It will specifically result in challenges when you are dealing with external systems that rely on the conceptual difference between an element that is left out to mean: "we don't know the value", versus empty to mean: "we know there is no value" (e.g. it may not be applicable in the context of usage). Another reason for leaving empty elements out of the message may be to keep the size of the message as small as possible.

There is a first step to work-around this, which is making use of if-function (coming from XSLT / XPath which is the technology used under the hood) for all optional elements:

With the echo service this results in the following:

You now my have ran into the second mistake (I did) as although none of the optional root elements are present, the root element <details/> still is. This can be resolved by also using the if-function for that element:

There still may be a challenge to overcome. You may have a similar issue in OIC Processes that, unlike with OIC Integrations, currently does not have the possibility to conditionally map elements, and leave empty elements out. So when you call an Integration from OIC you also may have to deal with empty elements as well. For that you can use a trick where the if-function is used in combination with the string-length(). Using this function on empty elements will result in "false" what also works for number elements (as these will automatically be converted to string). In the following both have been applied on the master.optional element:

You can read this as: if the element is present, then if it has a string length (meaning it is or could be converted to a string, so it is not empty), then map its value.

Some next time I will blog about a new feature to come in OIC Process to handle conditional mappings.

OIC Integration handles optional elements the same for both XML as well as JSON based elements, including mapping from XML to JSON and vise verse. The reason being that internally OIC will map JSON to XML. The examples hereafter therefore are based on XML.

I will discuss the examples on the following XSD that is used in an integration that maps all elements 1:1 and echoes the result back.

"Optional" in this context means that the element can be completely left out using 'minOccurs="0"'. Apart from that one can also specify if a null value can be assigned to the element using 'nillable="true"'. This means that an empty tag is allowed in the message (e.g. <optional/> or <optional></optional>).

When only the mandatory elements of the master are passed on you will find that all optional elements are echoed as empty, even those of the child:

The first mistake you could have made is to expect all elements that are not provided with the request not to be in the response either. Not a strange assumption considering that in XML Schema the default for the nillable attribute is "false", so strictly speaking, according to the XSD the response is not valid XML.

The reason OIC handles it like this is one of fault tolerance as in case of a 1:1 mapping where the source is not present, the alternative would be giving a selectionFailure (the equivalence of a NullPonterException).

Although appreciating this fault-tolerant way of mapping for ease of use for more 'Citizen Developer' type of users, it might not be what you want. It will specifically result in challenges when you are dealing with external systems that rely on the conceptual difference between an element that is left out to mean: "we don't know the value", versus empty to mean: "we know there is no value" (e.g. it may not be applicable in the context of usage). Another reason for leaving empty elements out of the message may be to keep the size of the message as small as possible.

There is a first step to work-around this, which is making use of if-function (coming from XSLT / XPath which is the technology used under the hood) for all optional elements:

With the echo service this results in the following:

You now my have ran into the second mistake (I did) as although none of the optional root elements are present, the root element <details/> still is. This can be resolved by also using the if-function for that element:

There still may be a challenge to overcome. You may have a similar issue in OIC Processes that, unlike with OIC Integrations, currently does not have the possibility to conditionally map elements, and leave empty elements out. So when you call an Integration from OIC you also may have to deal with empty elements as well. For that you can use a trick where the if-function is used in combination with the string-length(). Using this function on empty elements will result in "false" what also works for number elements (as these will automatically be converted to string). In the following both have been applied on the master.optional element:

You can read this as: if the element is present, then if it has a string length (meaning it is or could be converted to a string, so it is not empty), then map its value.

Some next time I will blog about a new feature to come in OIC Process to handle conditional mappings.

Dynamic Process, Conditions and Scope

In Oracle Integration Cloud's Dynamic Processes activation/termination conditions can be based on case events. These events are related to the scope of the components they relate to, which implies some restrictions. The below explains how this works, and how to work around these restrictions.

A Dynamic Process or Case (as I will call it in this article) in the Oracle Integration Cloud consists of four component types: the Case itself, Stages (phases), Activities, and Milestones. An Activity or Milestone is either in a particular Stage (in the picture below Activities A to H are), or global (Activities X and Y). Cases, Processes, Stages, Activities and Miletones cannot be nested (but a Case can initiate a sub-Case via an Activity, which I will discuss another time).

Except for the case itself, all other components can explicitly be activated/enabled or terminated/completed based on conditions. For example in the dynamic process above Milestone 1 is activated once Activity A is completed, and Stage 2 is to be activated once Stage 1 is completed.

A Stage implicitly completes when all work in that stage is done (i.e. all Activities), and a Case implicitly completes when all work in the case is done. Currently the status of a Case cannot be explicitly set using conditions, but I would expect this to become possible in some next version. In the meantime there is a REST API that can be used to close or complete a case.

There are two types of conditions for explicit activation/termination:

- (case) Events, for example completion of an activity

- (case) Data Driven, for example "status" field gets value "started"

The scope of an Event is its container, meaning:

- A Stage can only be activated or terminated by a condition based on an Event concerning another Stage or a Global Activity.

- An Activity can only be activated or terminated by a condition based on an Event concerning another Activity or Milestone in the same Stage.

- A Milestone can only be completed by a condition based on an Event concerning an Activity or another Milestone in the same Stage.

- A Global Activity can only be enabled or terminated by a condition based on an Event concerning a Stage, or Global Milestone or another Global Activity

- A Global Milestone can only be enabled or terminated by a condition based on an Event concerning a Stage, a Global Activity, or another Global Milestone

Let's assume that in the example Stage 2 is only to be activated when Milestone 1 is completed and otherwise Stage 2 is to be skipped and the case should directly go to Stage 3. Because of the way events are limited by their scope, you cannot create a condition for Stage 2 to be skipped based on the completion of Milestone 1 (which is in Stage 1 and therefore not visible outside).

The work-around is to use a Data Driven condition instead. You can for example have a "metaData.status" field that you can set to something like "skip phase 2" and use that instead.

In general, it probably always is a good idea to let your case have some complex data element for example called "metaData" consisting of fields like "dateStarted" and "status", which that you fill out via the activities, and if needed can be used in conditions everywhere.

Oracle Integration Cloud: New! The Data Mapper Activity

In a previous blog I discussed a work-around for not having a Script activity in Oracle Integration Cloud's Process Builder. In this blog I will discuss another work-around which is actually not a work-around, but the real thing: the Data Mapper!

As you can read in a previous blog about the matter, not having the equivalent of the Script activity of the on-premise BPM Suite, was an omission that we often had to find a work-around for. The one I used was the Business Rule activity. However, some weeks ago the Business Rule activity got deprecated (you could clearly see that).

With the latest release of OIC (which may not yet be public available when you read this) the Business Rule activity has vanished. At the same time the Data Mapper activity has been added.

The Data Mapper activity has no properties other than that you can put it in draft mode.

The implementation is as simple as you might expect: there is only an Output tab on which you can map data from Data Objects, Predefined Variables and Business Parameters on one hand, to Data Objects and Predefined Variables on the other.

Next to simple mappings, you can also create and use (reusable) transformations to map Data Objects (or attributes) of which the types don't match.

I hope I don't have to write this any time in the future again, but if you used my work-around I got you into trouble if you want to export and import an application, because import with a Business Rule activity in the application is not supported! Sorry :-D

As you can read in a previous blog about the matter, not having the equivalent of the Script activity of the on-premise BPM Suite, was an omission that we often had to find a work-around for. The one I used was the Business Rule activity. However, some weeks ago the Business Rule activity got deprecated (you could clearly see that).

With the latest release of OIC (which may not yet be public available when you read this) the Business Rule activity has vanished. At the same time the Data Mapper activity has been added.

The Data Mapper activity has no properties other than that you can put it in draft mode.

The implementation is as simple as you might expect: there is only an Output tab on which you can map data from Data Objects, Predefined Variables and Business Parameters on one hand, to Data Objects and Predefined Variables on the other.

Next to simple mappings, you can also create and use (reusable) transformations to map Data Objects (or attributes) of which the types don't match.

I hope I don't have to write this any time in the future again, but if you used my work-around I got you into trouble if you want to export and import an application, because import with a Business Rule activity in the application is not supported! Sorry :-D

Oracle Integration Cloud: Customer Managed & Patching

Currently the Oracle Integration Cloud (OIC) only comes as "customer managed". Among others this means that you as a customer have access to management consoles. It also means that you determine when to apply patches, as Oracle does not do that for you. The following describes how easy that is.

Oracle Cloud solutions can come in two flavors: Oracle Managed and Customer Managed. The first means that maintenance, including patching is done by Oracle. You don't have to ask for nor to initiate it as it all happens "automatically", typically during non-business hours (like Friday evening). It also means that you don't have any control over it. Now that probably is exactly what you want. However, in case of OIC that currently only comes as Customer Managed. This means that you have access to the Weblogic Service Console and the Fusion Middleware Console (although not with all the features that you for example would have with the on-premise version of the BPM Suite). I expect these consoles not to be available in the Oracle Managed flavor to come soon.

Another difference will be the way it is provisioned. With the Customer Managed flavor you have to provision a Storage Cloud yourself, and - depending on the type of template you use - also the Database Cloud.

With Oracle Managed I expect this to happen in one blow but that is yet to be seen. With Customer Managed you also have to think about how to configure the Stack that you want to use. A Stack is based on a Stack Template, which specifies the amount of nodes, OCPU's, memory, database version and edition of a node (and a few other things). A Stack is a provisioned instance of a template. After provisioning you cannot change the instance or use another template. However you can provision more instances based on the same stack. Another thing to point out is that with the Customer Managed flavor you need to indicate if and how you want it to be backed up.

Apart from some complexity but also flexibility that comes with determining your Stack Template, after provisioning there is little difference with the Oracle managed flavor. You can use it the same way, and if you have it configured to automatically do backups you don't have to think about that either. You do have to keep a keen eye on patches that may have become available, though.

If a patch is available, that will be shown on the Service Console:

You can start patching by clicking the link, which brings you to the Patch tab. In my case this gives a warning that I have no backup configured. It is a trial-only instance so I did not bother to do so. For a Production instance you should have done that (obviously). I don't know if I can still change that for my instance, but I don't think so. On the right-hand side there is a menu with two options: Precheck and Patch.

With the Precheck option you can let OIC verify if your instance is ready to apply the patch to. In my case it is.

With the Patch option from the menu you initiate the actual patching. In my case the patch can be done rolling what means with the instance up-and-running. As a matter of fact, the patch cannot be applied with one or more instances being shut down.

There also was a patch for the DB instance available, which required a restart. I could only apply that after shutting down the OIC instance, but that is indicated clearly.

Just for the fun of it I did the precheck of the patch after applying it. It failed, what I expected because it was already applied. The results were not very clear though.

Oracle Cloud solutions can come in two flavors: Oracle Managed and Customer Managed. The first means that maintenance, including patching is done by Oracle. You don't have to ask for nor to initiate it as it all happens "automatically", typically during non-business hours (like Friday evening). It also means that you don't have any control over it. Now that probably is exactly what you want. However, in case of OIC that currently only comes as Customer Managed. This means that you have access to the Weblogic Service Console and the Fusion Middleware Console (although not with all the features that you for example would have with the on-premise version of the BPM Suite). I expect these consoles not to be available in the Oracle Managed flavor to come soon.

Another difference will be the way it is provisioned. With the Customer Managed flavor you have to provision a Storage Cloud yourself, and - depending on the type of template you use - also the Database Cloud.