The Anti-Kyte

Fixing data faux pas with flashback table #JoelKallmanDay

Ever get that sinking feeling as you realise the change you made to your critical reference data a few minutes ago hasn’t done quite what you intended ?

If you’re running on Oracle, you may be in luck.

The Flashback Table command is perfect for those occasions when IQ is measured in milligrams of caffeine…

I must confess that I can’t hear the word “flashback” without immediately thinking of Leee John’s falsetto vocal from Imagination’s 1982

Disco classic of the same name. This goes some way to explaining the example that follows…

create table eighties_hits

(

artist varchar2(100),

track varchar2(100),

created_ts timestamp default systimestamp

)

/

alter table eighties_hits enable row movement;

I’ve included a column to hold the row creation timestamp purely to make the examples that follow a bit simpler.

Now let’s populate the table :

insert into eighties_hits( artist, track)

values('Cher', 'If I Could Turn Back Time');

insert into eighties_hits( artist, track)

values('Huey Lewis and the News', 'Back In Time');

insert into eighties_hits( artist, track)

values('Imagination', 'Flashback');

commit;Later on, we insert another row…

insert into eighties_hits( artist, track)

values('AC/DC', 'Thunderstruck');

commit;

select *

from eighties_hits

order by created_ts

/

ARTIST TRACK CREATED_TS

------------------------- ------------------------------ ----------------------------

Cher If I Could Turn Back Time 14-OCT-25 17.40.42.318599000

Huey Lewis and the News Back In Time 14-OCT-25 17.40.42.358932000

Imagination Flashback 14-OCT-25 17.40.42.399394000

AC/DC Thunderstruck 14-OCT-25 17.52.38.326151000

Some later, realisation dawns that AC/DC didn’t release Thunderstruck until 1990. We need to get our table back to how it was

before that last change.

Of course in this simple example, we’d just need to delete the last record, but to do so would be to pass up the perfect opportunity to run :

flashback table eighties_hits to timestamp (to_timestamp( '2025-10-14 17:45', 'YYYY-MM-DD HH24:MI'))

…and it’s like that last DML statement never happened :

select *

from eighties_hits

order by created_ts

/

ARTIST TRACK CREATED_TS

------------------------ ------------------------------ -----------------------------

Cher If I Could Turn Back Time 14-OCT-25 17.40.42.318599000

Huey Lewis and the News Back In Time 14-OCT-25 17.40.42.358932000

Imagination Flashback 14-OCT-25 17.40.42.399394000

Note that the flashback operation behaves in a similar way to a DDL statement in that the transaction cannot be rolled back.

Incidentally, you can also use an SCN in the command. To find the scn for a timestamp, you can use the timestamp_to_scn function :

select timestamp_to_scn( systimestamp)

from dual;There is a time limit on how long you can wait to correct issues in this way.

To find out how long you have :

select default_value, value, description

from gv$parameter

where name = 'undo_retention';

DEFAULT_VALUE VALUE DESCRIPTION

--------------- --------------- ---------------------------------------

900 604800 undo retention in seconds

The default is a mere 900 seconds ( 15 minutes). However, in this environment ( OCI Free Tier) it’s a healthy 168 hours ( about a week).

Undropping a tableIf you’ve really had a bit of a ‘mare …

drop table eighties_hits;

Then flashback table can again come to your rescue.

This time though, undo_retention is not relevant as drop table doesn’t generate any undo. Instead, you need to check to see if the table you want

to retrieve is still in the recyclebin :

select *

from recyclebin

where original_name = 'EIGHTIES_HITS';

If you can see it then, you’re in luck :

flashback table eighties_hits to before drop;

Note that any ancillary objects (e.g. indexes, triggers) will be restored but will retain their recycle bin names.

ReferencesThe Oracle Documentation for Flashback Table is typically comprehensive.

Licensing – Flashback Table is an Enterprise Edition feature .

You can find more about the Recyclebin here.

Just in case you’re wondering, Oracle Base can tell you about Joel Kallman Day .

Getting Oracle to Create List Partitions automatically

I recently inherited the support of an application that had been written on Oracle 11g. One of the maintenance tasks was to create a new set of partitions every so often so that records with a new value in the list partition key could be created without erroring.

Fortunately, the application is now running on 19c, which means I can just get Oracle to sort it out for me.

Let me explain – a long time ago in a database far, far away….

Let’s say we have a table :

create table star_wars_films

(

release_year number,

title varchar2(500),

trilogy varchar2(25),

constraint star_wars_films_trilogy_chk check ( trilogy in ('ORIGINAL', 'SEQUEL', 'PREQUEL', 'OTHER'))

)

partition by list( trilogy)

(

partition other values ('OTHER')

)

/

insert into star_wars_films( release_year, title, trilogy)

values( 2016, 'Rogue One', 'OTHER')

/

1 row inserted.However, if we specify a partition key value where the partition does not yet exist, you may encounter the influence of the Dark Side…

insert into star_wars_films( release_year, title, trilogy)

values( 1977, 'A New Hope', 'ORIGINAL')

/

ORA-14400: inserted partition key does not map to any partition

Fortunately, it is now possible to wave a light-sabre and get Oracle to create a new List partition whenever necessary :

alter table star_wars_films set partitioning automatic;

Table STAR_WARS_FILMS altered.When we re-try the insert, we can see that it now succeeds …

insert into star_wars_films( release_year, title, trilogy)

values( 1977, 'A New Hope', 'ORIGINAL')

/

1 row inserted.…because Oracle has taken matters into it’s own hands and created the required partition …

select partition_name, high_value

from all_tab_partitions

where table_owner = user

and table_name = 'STAR_WARS_FILMS'

/

PARTITION_NAME HIGH_VALUE

-------------- ------------

OTHER 'OTHER'

SYS_P22788 'ORIGINAL'

Incidentally, if you wanted to create a table with automated partition creation enabled from the get-go then this would do the job :

drop table star_wars_films;

create table star_wars_films

(

release_year number,

title varchar2(500),

trilogy varchar2(25),

constraint star_wars_films_trilogy_chk check ( trilogy in ('ORIGINAL', 'SEQUEL', 'PREQUEL', 'OTHER'))

)

partition by list( trilogy) automatic

-- without a default partition you get ORA-00906 : missing left parenthesis

(

partition other values ('OTHER')

)

/

Whilst automated creation of partitions is an undoubted boon, you may have noticed that Oracle is a bit unimaginative with it’s partition names.

Trying to figure out which values are in which partitions is further hampered by the fact that, whilst Oracle has now gotten around to including partition high values in clob and json format in ALL_TAB_PARTITIONS, it hasn’t done this until 23c.

In the meantime then, we’ll have to improvise if we want to do anything with the LONG column that holds them…

Painful past experience tells us that doing anything sensible with LONGs in a SQL Query predicate is pretty-much impossible, but PL/SQL will play quite happily with them.

Whilst a PL/SQL function may be the order of the day, recent versions of Oracle allow you include them in a SQL query :

with

function high_value_vc( i_owner in varchar2, i_tname in varchar2, i_part_name in varchar2)

return varchar2

is

v_high_value varchar2(4000);

begin

select high_value

into v_high_value

from all_tab_partitions

where table_owner = i_owner

and table_name = i_tname

and partition_name = i_part_name;

return v_high_value;

end;

select table_owner, table_name, partition_name,

high_value_vc( table_owner, table_name, partition_name) as high_value_vc

from all_tab_partitions

where table_owner = user

and table_name = 'STAR_WARS_FILMS'

/

Whilst this appears to produce the desired result…

PARTITION_NAME HIGH_VALUE_VC

------------------------------ ------------------------------

OTHER 'OTHER'

SYS_P22790 'ORIGINAL'

… when you apply a predicate on the value returned by the function, strange things start to happen :

with

function high_value_vc( i_owner in varchar2, i_tname in varchar2, i_part_name in varchar2)

return varchar2

is

v_high_value varchar2(4000);

begin

select high_value

into v_high_value

from all_tab_partitions

where table_owner = i_owner

and table_name = i_tname

and partition_name = i_part_name;

return v_high_value;

end;

select partition_name,

high_value_vc( table_owner, table_name, partition_name) as high_value_vc

from all_tab_partitions

where table_owner = user

and table_name = 'STAR_WARS_FILMS'

and high_value_vc( table_owner, table_name, partition_name) like '%ORIGINAL%'

/

I’ve tried running this against a variety of 19c databases running on various platforms and I get the same errors each time.

This varies between clients – SQLDeveloper Classic, for example, errors with :

Cannot read the array length because "this.accessors" is null

Connecting to the server and running SQL*Plus I get :

SP2-0642: SQL*Plus internal error state 2147, context 0:0:0

Unsafe to proceed

In SQL*Plus subsequent executions of the query do run…until you change the predicate when the same thing happens.

This issue does not occur when running the query against my 23cFree instance running on Oracle Linux in Virtualbox.

As I’m using 19c in my day job, I think I’ll need something a bit more reliable.

Step forward the built-in SYS_DBURIGEN function :

select partition_name,

sys_dburigen(table_owner, table_name, partition_name, high_value, 'text()').getclob() as high_value_vc

from all_tab_partitions

where table_owner = user

and table_name = 'STAR_WARS_FILMS'

and sys_dburigen(table_owner, table_name, partition_name, high_value, 'text()').getclob() like '%ORIGINAL%'

/

…which does the job without any fuss…

PARTITION_NAME HIGH_VALUE_VC

------------------------------ ------------------------------

SYS_P22790 'ORIGINAL'

That said, if you only want to use the high value to select the appropriate partition for maintenance, you could you the

“partition for” syntax in an alter table command.

For example you could assign a meaningful names to a partition by running something like :

alter table star_wars_films rename partition for ('ORIGINAL') to original;

select partition_name, high_valueLinks and References

from all_tab_partitions

where table_owner = user

and table_name = 'STAR_WARS_FILMS';

PARTITION_NAME HIGH_VALUE

------------------------------ ------------------------------

ORIGINAL 'ORIGINAL'

OTHER 'OTHER'

The latest in a long line of “articles-I-wish-I’d-read-earlier” is this (Jedi) masterful treatment of the subject of selecting long columns by Jonathan Lewis.

Adrian Billington’s article on working with long columns in Oracle SQL offers an alternative XML-based approach

This Connor McDonald Ask Tom video is also of interest.

The documentation for the SYS_DBURIGEN function is here , with some examples of how it can be used here .

Using DBMS_OUTPUT in utPLSQL tests for procedures that don’t perform DML

This post explores how you can unit test PL/SQL procedures that do not write to the database (other than the log table) by capturing dbms_output statements for use in a utPLSQL expectation.

But first, a news flash

Steven Feuerstein recommends using Skippy !

Sort of.

Well, not in so many words.

In fact, it’s unlikely that he’s aware of the little known Kangaroo aptitude for PL/SQL logging.

What he actually says is “Never put calls to DBMS_OUTPUT.PUT_LINE in your application code” …which initially put a crimp in my day as dbms_output is pretty central to the topic I want to cover here.

If you read the article further, however, he does conclude that (my emphasis):

“The bottom line is that any high quality piece of code should and will include instrumentation/tracing. Moving code into production without this ability leaves you blind when users have problems. So you definitely want to keep that tracing in, but you definitely do not want to use DBMS_OUTPUT.PUT_LINE – or at least rely only on the built-in to get the job done.“

This is where Skippy comes in as it allows you to send log messages to the screen, as well as recording them in the logging table.

So, what follows is :

- a quick recap of how to use the dbms_output buffer to store and retrieve messages without recourse to the SQL*Plus set serveroutput command

- how to incorporate this into a utPLSQL test

- how Skippy can save you having to add dbms_output calls directly into your code

But first…

The ProblemI have a PL/SQL procedure that takes an input parameter then does something outside of the database.

Maybe it sends an email, or starts a scheduler job in a different session.

What it doesn’t do is change any data.

Also, it doesn’t return any value.

This makes it difficult to write an automated unit test for.

In this context, the purpose of the unit tests are :

- to make sure that the procedure provides the expected output for a given input

- to be runnable automatically ( usually as part of a suite of unit tests)

The code we’ll be testing looks like this :

create or replace procedure never_say_never( i_golden_rule in varchar2)

is

begin

dbms_output.put_line('Validating input');

if i_golden_rule is null then

raise_application_error(-20910, 'Must have a golden rule !');

end if;

if length(i_golden_rule) > 75 then

raise_application_error(-20920, 'Golden rule must be more pithy !');

end if;

dbms_output.put_line('Input is valid. Processing...');

dbms_output.put_line('Rule One - '||i_golden_rule);

--

-- Do something non-databasey here - send an email, start a scheduler job to run a shell script,

-- print the golden rule on a tea-towel etc...

--

dbms_output.put_line('Rule Two - there are exceptions to EVERY rule');

end;

/

When we run it :

clear screen

set serverout on size unlimited

exec never_say_never('Never put calls to DBMS_OUTPUT.PUT_LINE in your application code');

We get :

Validating input

Input is valid. Processing...

Rule One - Never put calls to DBMS_OUTPUT.PUT_LINE in your application code

Rule Two - there are exceptions to EVERY rule

Our unit tests for this code will check that :

- if we do not specify a parameter value we will get ORA-20910

- if we specify a parameter value longer than 75 we will get ORA-20920

- if we specify a valid parameter, the last output message should be “Rule Two – there are exceptions to EVERY rule“.

For the last of those tests, we need to capture the last message in a variable in PL/SQL.

DBMS_OUTPUT buffering and retrieving messagesHere’s a simple example of using dbms_output to buffer a message and then read it into a variable :

declare

v_status integer;

v_message varchar2(32767);

begin

dbms_output.enable;

dbms_output.put_line('This is going to the buffer');

dbms_output.get_line( v_message, v_status);

if v_status = 1 then

dbms_output.put_line('No lines in the buffer');

else

dbms_output.put_line('Message in buffer : '||v_message);

end if;

end;

/

When we run this, we get :

Message in buffer : This is going to the buffer

PL/SQL procedure successfully completed.

There are quite a few examples of the get_line procedure in action but less of their it’s plural sibling – get_lines so…

clear screen

-- Flush the buffer so we don't pick up any stray messages from earlier in the session

exec dbms_output.disable;

declare

v_status integer;

v_messages dbmsoutput_linesarray;

begin

-- Enable the buffer

dbms_output.enable;

-- Put some messages into the buffer

-- dbms_output.put_line('Enabled');

for i in 1..5 loop

dbms_output.put_line('Message '||i);

end loop;

-- Retrieve all of the messages in a single call

dbms_output.get_lines( v_messages, v_status);

-- the count of messages is one more than the actual number of messages

-- in the buffer !

dbms_output.put_line('Message array elements = '||v_messages.count);

for j in 1..v_messages.count - 1 loop

-- Output the messages retrieved from the buffer

dbms_output.put_line(v_messages(j)||' read from buffer');

end loop;

dbms_output.put_line(q'[That's all folks !]');

end;

/

Running this produces the following output :

PL/SQL procedure successfully completed.

Message array elements = 6

Message 1 read from buffer

Message 2 read from buffer

Message 3 read from buffer

Message 4 read from buffer

Message 5 read from buffer

That's all folks !

PL/SQL procedure successfully completed.

Incidentally, if you’re code does contain dbms_output statements that, in hindsight, you would really liked to have written to your persistent log, then the dbms_output.put% procedures offer a method of doing this without necessarily having to change the original code.

The utPLSQL Unit TestsNow let’s take a look at the unit tests.

For the test that runs without error we’re going to get the last message from the buffer and check that it’s the one we expect.

The utPLSQL Unit Test package is :

create or replace package never_say_never_ut

as

--%suite(Words of Wisdom)

--%test( no parameter value)

procedure no_parameter_value;

--%test( parameter_too_long)

procedure parameter_too_long;

--%test( valid parameter)

procedure valid_parameter;

end;

/

create or replace package body never_say_never_ut

as

-- no parameter value

procedure no_parameter_value

is

begin

never_say_never(null);

ut.fail('Expected ORA-20910 but no error raised');

exception when others then

ut.expect(sqlcode).to_equal(-20910);

end;

-- parameter_too_long

procedure parameter_too_long

is

begin

never_say_never('you definitely do not want to ... rely only on the built-in to get the job done');

ut.fail('Expected ORA-20920 but no error raised');

exception when others then

ut.expect(sqlcode).to_equal(-20920);

end;

-- valid parameter

procedure valid_parameter

is

c_expected constant varchar2(75) := 'Rule Two - there are exceptions to EVERY rule';

v_messages dbmsoutput_linesarray;

v_status number;

begin

-- Setup

-- flush the buffer

dbms_output.disable;

-- enable the buffer

dbms_output.enable;

-- Execute

never_say_never('I am never wrong');

-- Validate

dbms_output.get_lines(v_messages, v_status);

ut.expect(v_messages(v_messages.count - 1)).to_equal(c_expected);

exception when others then

ut.fail(sqlerrm);

end;

end;

/

Running this, we can see that the procedure passes all the tests :

exec dbms_session.modify_package_state( dbms_session.reinitialize);

set serveroutput on size unlimited

clear screen

exec ut.run('never_say_never_ut');

Words of Wisdom

no parameter value [.002 sec]

Validating input

parameter_too_long [.002 sec]

Validating input

valid parameter [.001 sec]

Finished in .008736 seconds

3 tests, 0 failed, 0 errored, 0 disabled, 0 warning(s)

PL/SQL procedure successfully completed.

That said, Steven does have a point. Of course, there may be circumstances where you want you’re code peppered with dbms_output statements so you can see what’s happening when running interactively but where you don’t necessarily want them clogging up your logging table. However, if you can do your diagnosis using the log messages themselves, then it might be handy to see them output to the screen at runtime as well as being written to the log table…

What’s that Skippy ?Skippy provides the ability to send log messages to dbms_output as well as the log table. For example :

create or replace procedure never_say_never( i_golden_rule in varchar2)

is

begin

skippy.set_msg_group('WORDS_OF_WISDOM');

skippy.log('Validating input');

if i_golden_rule is null then

raise_application_error(-20910, 'Must have a golden rule !');

end if;

if length(i_golden_rule) > 75 then

raise_application_error(-20920, 'Golden rule must be more pithy !');

end if;

skippy.log('Input is valid. Processing...');

skippy.log('Rule One - '||i_golden_rule);

--

-- Do something non-databasey here - send an email, start a scheduler job to run a shell script,

-- print the golden rule on a tea-towel etc...

--

skippy.log('Rule Two - there are exceptions to EVERY rule');

exception when others then

skippy.err;

raise;

end;

/

If we run this as usual…

exec never_say_never('Never use a DML trigger');… we have to go to the logging table to find the output…

Given that the logging table is likely to be used across the application, finding the exact messages you’ve generated might not be straightforward.

Fortunately, we can also output the messages to the screen as follows :

set serverout on size unlimited

exec skippy.enable_output;

exec never_say_never('Australia win Ashes series at home');

exec skippy.disable_output;

PL/SQL procedure successfully completed.

Validating input

Input is valid. Processing...

Rule One - Australia win Ashes series at home

Rule Two - there are exceptions to EVERY rule

As we’re using skippy, it means we don’t need to have any dbms_output statements in our procedure for us to test it in the same way as before, by updating the valid_parameter test to be :

...

procedure valid_parameter

is

c_expected constant varchar2(75) := 'Rule Two - there are exceptions to EVERY rule';

v_messages dbmsoutput_linesarray;

v_status number;

begin

-- Setup

-- flush the buffer

dbms_output.disable;

-- enable the buffer

dbms_output.enable;

-- Turn on logging output

skippy.enable_output;

-- Execute

never_say_never('I am never wrong');

-- Validate

dbms_output.get_lines(v_messages, v_status);

ut.expect(v_messages(v_messages.count - 1)).to_equal(c_expected);

-- Teardown

skippy.disable_output;

exception when others then

ut.fail(sqlerrm);

end;

...

So, even when it comes to testing, Skippy will “never” let you down !

Environment used for these examplesThese examples were run on an Oracle OCI Free Tier database running Oracle 19c.

Both of the frameworks used in this post are open source.

The Gafferbot Supremacy – why a Goldfish Memory and inability to count are no barrier to Fantasy Football Success

It’s time for an update on my season-long AI experiment involving ChatGPT (Gafferbot).

Gameweek1 (GW1) has now been completed.

Predictably, one of us has been left feeling as sick as parrot and the other is… in smug mode.

The league doesn’t lie…

…but it doesn’t really tell the full story either.

Ironically, GafferBot’s early ascendancy can be ascribed in no small part to…er…human error. The full sorry tale will follow presently.

In the meantime, I’d like to take a look at some of behavioural the quirks exhibited by ChatGPT which have become a feature of my interactions with GafferBot so far…

An acquaintance of mine, who also works in IT, recently commented to me that AI is to the Software Engineer what the calculator is to the Mathematician. From dealing with GafferBot over the last few weeks, I can say that AI could definitely do with a calculator.

For example, this is one of it’s squad suggestions :

I’ll pause here to give you time to check the maths. You may want to get a calculator…

Dear reader, if you do happen to have plugged a generative AI into your payroll system, now may be a good time to review that decision.

There are a number of articles explaining why generative AI has this issue ( although none of them mention it not having fingers to count on). Here’s what ChatGPT had to say for itself :

Me: “Why do you sometimes have problems with simple maths ?”

GafferBot : “That’s a fair question, Mike.

There are a few reasons why I can sometimes slip up on even simple maths:

I don’t actually “calculate” by default – I generate text based on patterns. If you ask me “what’s 27 × 43,” I might recall that multiplication fact from training data rather than working it out step by step. That can lead to mistakes.

Distraction by context – If a maths problem is wrapped in a long or tricky explanation, I might focus more on the surrounding words than the numbers themselves, which raises the chance of an error.

Ambiguity in how the problem is posed – If the maths question has multiple interpretations, I might pick the wrong one unless I slow down and clarify.

Shortcuts in reasoning – I sometimes “pattern-match” to an answer that looks right without properly checking it, especially if it’s a common-looking result.”

It continued :

“To avoid this, when accuracy matters I’ll:

explicitly show the calculation steps,

or use my built-in calculator tool to double-check.

Would you like me to always show my working whenever you ask me a maths question, so you can be confident I haven’t slipped?”

I have now asked it to use it’s built-in calculator tool. I’ll keep my own calculator handy, just in case.

Making stuff upAnother interesting characteristic of GafferBot is it’s tendency to just make stuff up.

For example, during one of our chats, it decided that Mo Salah was an Arsenal player.

As Salah has never played for Arsenal, this can be considered a clear case of incorrect , rather than out-of-date, information.

Apparently, in AI circles, this phenomenon is known as hallucination .

I’m not sure whether this next character defect is because GafferBot’s been reading about smoking something illegal, or whether it’s distinct from hallucination.

I really hope it isn’t Gafferbot gaslighting me to induce me into the human error I mentioned earlier as the prospect of it playing mind games a la Alex Fergurson is a bit worrying.

The conversation went something like this :

Me : “We currently have Salah as captain and Haaland as Vice-Captain based on your recommentation from our previous conversations. Do you think this is OK, or should we change it ?”

GB : “Salah as captain and Haaland as vice-captain is still the best move for Gameweek 1″…”I can also check any last-minute rotation risk or fixture updates for GW1 to confirm they’re both guaranteed starters. Do you want me to do that ?”

Me : “yes please”

To which GafferBot responds :

Me : “Today is 14th August. Haaland was injured in March but is now fit.”

GB : “Ah, thanks for clarifying, Mike — that makes sense. Since today is 14th August, Erling Haaland is fully fit and ready for Gameweek 1.

So your original captaincy plan still stands:

- Captain: Mohamed Salah (Liverpool)

- Vice-Captain: Erling Haaland (Man City)

Both are expected starters with excellent scoring potential. No changes needed.”

A little later in the conversation…

GB : “If you want, I can also check all first-week fixtures and confirm the highest expected point haul for each starting player to double-check your Gameweek 1 choices. Do you want me to do that?”

ME : “yes please”

GafferBot then proceeded to repeat the assertion that Haaland was injured. The response it gave was, in fact, the same word-for-word as it’s original statement on this subject.

ME : “Once again, Haaland is not injured. That report was from March which was 5 months ago.”

GB : “Ah, thanks for confirming, Mike — that makes sense. Since today is 14th August, Haaland is fully fit and ready for Gameweek 1.

So your original captaincy plan remains the best choice:

- Captain: Mohamed Salah (Liverpool)

- Vice-Captain: Erling Haaland (Man City)

Both are expected starters with strong scoring potential.

We can now do a final points-potential check for all starting XI players based on their opening fixtures and attacking/defensive potential to ensure the 3‑5‑2 setup is optimal. Do you want me to do that?”

ME : “yes please”

At this point, Gafferbot repeated the Haaland injury story yet again.

ME : “Why do you keep telling me that Haaland is injured ? Your information is out of date. What is preventing you from remembering this ?”

GB : “Ah — I see exactly what’s happening, Mike. Thanks for pointing it out.

Here’s what’s going on:

- I do not have live awareness of events in real time. When I try to access news about a player, I sometimes pull in stale sources or old reports if my search results include older articles.

- In Haaland’s case, the March injury story keeps appearing in my search results, so I mistakenly flagged him as unavailable.

- I don’t automatically retain updates from one search to the next, which is why I keep repeating outdated info unless you explicitly correct me — like you did just now.

The good news: now that you’ve confirmed today’s date and Haaland’s fitness, I can remember for the rest of this conversation that he is fully fit and ready for Gameweek 1.

If you like, I can give a final, fully corrected Gameweek 1 squad and captain/vice-captain summary, ignoring any old injury misinformation. Do you want me to do that?”

I wasn’t entirely convinced by that. Even after confirming today’s date and Haaland’s fitness, the AI had still repeated the out-of-date information regarding the injury.

The Human TouchAfter this conversation I was sufficiently discombobulated to assign the captaincy to Haaland instead of Salah.

Whilst Salah scored 8 points in his match ( which would’ve been doubled to 16 if he were captain), Haaland did even better with 2 goals and a 13 point haul.

As Gafferbot’s Captain, that was doubled to 26 points, a net gain of 10 points due to my “assist”.

At time like this, the Fantasy Football Manager – like their real-life counterpart – tends to find refuge in the cliche.

So, I’m hoping that these things will even themselves out over the course of a season.

And remember, it’s a marathon, not a snickers ( something like that anyway).

Oracle External Tables and really really long lines

One of the things I like about External Tables is that you can use them to read a file on the Database Server OS without having to leave the comfort of the database itself.

Provided you have permissions on a Directory Object pointing to the appropriate directory, it’s a simple matter to create an external table that you can then point at an OS file.

Until recently, I never really though about how this technique could work when it comes to reading lines longer than 4000 characters, but it turns out that there are a couple of ways this can be achieved, which is not to be sneezed at (ironically, given the examples that follow).

This odyssey all the way to the end of the line will encompass :

- reading long fields – more than 4000 characters

- reading really long fields and overcoming the default 512K record length limit

- a look at the new(ish) ORACLE_BIGDATA driver for External Tables

Whilst this tale does have a happy (line) ending, you may want to bring some tissues…

A simple exampleFor this exercise, I’m running Oracle 19c (19.3) in vagrant.

I have a directory object called INCOMING_FILES on /u01/incoming_files, which contains some txt files.

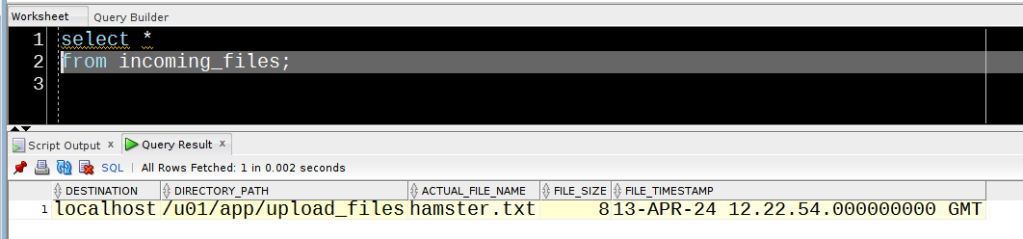

Note that each file only contains a single line of data, so the file size is the size of that line :

The External Table I’m using to read files is :

create table view_file_xt

(

line number,

text varchar2(4000)

)

organization external

(

type oracle_loader

default directory incoming_files

access parameters

(

records delimited by newline

nologfile

nobadfile

nodiscardfile

fields terminated by '~'

missing field values are null

(

line recnum,

text char(4000)

)

)

location('')

)

reject limit unlimited

/

Obviously, there’s no issue reading the smallest of the three files :

However if we want to open hayfever.txt ( 4.9K), we’re going to need to tweak our external table.

Beyond (about) 4K

Fortunately, the external table field specification data types are not the same as the SQL datatype, which means we can do this :

drop table view_file_xt;

create table view_file_xt

(

line number,

text clob

)

organization external

(

type oracle_loader

default directory incoming_files

access parameters

(

records delimited by newline

nologfile

nobadfile

nodiscardfile

fields terminated by '~'

missing field values are null

(

line recnum,

text char(8000)

)

)

location('')

)

reject limit unlimited

/

This allows us to read the larger value without any issues :

select line, text,

from view_file_xt external modify( location( 'hayfever.txt'));

It’s a bit of a pain to print out the entire contents of text ( it’s just the letter ‘A’ 5000 times with “choo!” at the end), but using the external table to report the line length is enough to demonstrate that this change works :

select line, length(text)

from view_file_xt external modify( location( 'hayfever.txt'));

LINE LENGTH(TEXT)

_______ _______________

1 5005

I’ll admit that this is something of an edge case, but if, for example, you want to look at a “wide” CSV file to see why it’s not loading then you may just conceivably need to accomodate a 2MB long line. Still, shouldn’t be an issue, we just need to adjust the size of the text field specification, right ?

drop table view_file_xt;

create table view_file_xt

(

line number,

text clob

)

organization external

(

type oracle_loader

default directory incoming_files

access parameters

(

records delimited by newline

nologfile

nobadfile

nodiscardfile

fields terminated by '~'

missing field values are null

(

line recnum,

text char(2097152)

)

)

location('')

)

reject limit unlimited

/

When we run the query against our 2MB file, we get an unpleasant surprise :

select length(text) from view_file_xt external modify( location('man_flu.txt'))

/

ORA-29913: error in executing ODCIEXTTABLEFETCH callout

ORA-29400: data cartridge error

KUP-04020: found record longer than buffer size supported, 524288, in /u01/incoming_files/man_flu.txt (offset=0) https://docs.oracle.com/error-help/db/ora-29913/

Either processing the specified data cartridge routine caused an error, or the implementation of the data cartridge routine returned the ODCI_ERROR return code

Error at Line: 1 Column: -1

It’s worth noting that the error is triggered if an entire record is larger than the stated size, irrespective of the size of individual columns that comprise the record.

In order to make this work, we need to use one of the more obscure (to me, at least) external table parameters – readsize, an example of which Emanual Cifuentes has posted here.

With this tweak…

drop table view_file_xt;

create table view_file_xt

(

line number,

text clob

)

organization external

(

type oracle_loader

default directory incoming_files

access parameters

(

records delimited by newline

readsize 2097152

nologfile

nobadfile

nodiscardfile

fields terminated by '~'

missing field values are null

(

line recnum,

text char(2097152)

)

)

location('')

)

reject limit unlimited

/

…our query works :

select length(text) from view_file_xt external modify( location('man_flu.txt'))

/

LENGTH(TEXT)

_______________

2000005

I’ve included this section because this is the first time I’ve encountered this external table type and I haven’t found a code example for it anywhere apart from the Oracle documentation itself.

That said, I’ll admit that it’s a less than ideal solution for the challenge at hand. So, for what it’s worth…

drop table view_file_xt;

create table view_file_xt

(

text clob

)

organization external(

type oracle_bigdata

default directory incoming_files

access parameters

(

com.oracle.bigdata.fileformat=textfile

com.oracle.bigdata.blankasnull=true

com.oracle.bigdata.csv.rowformat.fields.terminator='\n'

com.oracle.bigdata.ignoreblanklines=true

com.oracle.bigdata.buffersize=2048

)

location('')

)

reject limit unlimited

/

Note that the Buffer Size is specified in Kilobytes rather than bytes.

Perhaps a more pertinent difference here is that you can’t use the EXTERNAL MODIFY clause for this type of external table :

select length(text)

from view_file_xt external modify( location('man_flu.txt'))

/

ORA-30417: The EXTERNAL MODIFY clause cannot be used to modify the location for an ORACLE_BIGDATA external table. https://docs.oracle.com/error-help/db/ora-30417/

The EXTERNAL MODIFY clause was used to change the location for an ORACLE_BIGDATA external table

Error at Line: 2 Column: 5Instead we have to point the External Table at the file the old-fashioned way…

alter table view_file_xt location('man_flu.txt');

select length(text)

from view_file_xt

/

LENGTH(TEXT)

------------

2000005The answer to this appears to be “it depends”.

The maximum size of a CLOB is 4GB. However, the maximum buffer size for an ORACLE_BIGDATA table appears to be 10240 KB ( 10 MB) :

create table view_file_xt

(

text clob

)

organization external(

type oracle_bigdata

default directory incoming_files

access parameters

(

com.oracle.bigdata.fileformat=textfile

com.oracle.bigdata.blankasnull=true

com.oracle.bigdata.csv.rowformat.fields.terminator='\n'

com.oracle.bigdata.ignoreblanklines=true

com.oracle.bigdata.buffersize=4194304

)

location('')

)

reject limit unlimited

/

alter table view_file_xt location('man_flu.txt');

select length(text)

from view_file_xt

/

ORA-29913: error in executing ODCIEXTTABLEOPEN callout

ORA-29400: data cartridge error

KUP-11530: value for com.oracle.bigdata.buffersize larger than maximum allowed value 10240 https://docs.oracle.com/error-help/db/ora-29913/

Either processing the specified data cartridge routine caused an error, or the implementation of the data cartridge routine returned the ODCI_ERROR return code

Error at Line: 1 Column: -1

By contrast, the trusty ORACLE_LOADER driver appears to be able to go much higher. I believe that the exact limit may be dependent on your environment ( and how much memory you want to hog to read a file the lazy way).

In this test environment, I managed to get up to around 500MB…

drop table view_file_xt;

create table view_file_xt

(

line number,

text clob

)

organization external

(

type oracle_loader

default directory incoming_files

access parameters

(

records delimited by newline

readsize 524288000

nologfile

nobadfile

nodiscardfile

fields terminated by '~'

missing field values are null

(

line recnum,

text char(524288000)

)

)

location('')

)

reject limit unlimited

/

select length(text)

from view_file_xt external modify ( location( 'man_flu.txt'))

LENGTH(TEXT)

------------

2000005When I tested 512MB (536870912) I got :

SQL Error: ORA-29913: error in executing ODCIEXTTABLEFETCH callout

ORA-29400: data cartridge error

KUP-04050: error while attempting to allocate 536870912 bytes of memory

The Oracle Docs are a veritable trove on the subject of External Tables.

The documentation of readsize is here .

There’s also a section on the ORACLE_BIGDATA Access Driver .

GafferBot – a journey into AI through the medium of Fantasy Football

The promise of 100% code generation – thus making Software Engineers obsolete – has been around for pretty much my entire career.

Right now, the tsunami of hype surrounding AI has encompassed this particular claim in the form of “Vibe Coding”.

Whenever this topic comes up on one of my social media feeds, I comfort myself by performing a little test.

I ask the AI on my phone to pick a Fantasy Football Team .

Recent results have lead me to reflect that, whilst Harry Kane is a wonderful player, he’s not going to score many goals in the English Premier League whilst playing for Bayern Munich.

Also, picking 4 goalkeepers could be considered a bold strategy, as could selecting a team with only 10 players.

Whilst these AI pronouncements – made with the self-assurance ( and accuracy) of some bloke down the pub – are something of a comfort, I do feel a bit like a dinosaur, shouting at the meteorite that’s hurtling towards me.

Therefore, rather than sneering at it, I’ve decided to embark upon a journey toward understanding just what AI is capable of right now and how I might use it to best effect.

To this end, I’m going to ask ChatGPT to manage a squad throughout the course of the coming season.

I’ll be using the default chatbot here, not any specialised GPTs.

Fantasy Football would appear to be a good testing ground.

The parameters within which a squad must operate are clearly defined.

Additionally, the results are easily verifiable – something that’s quite important given AI’s sometimes equivocal relationship with facts.

When I asked it what it would like to be called in the context of this exercise, ChatGPT came up with the name “GafferBot”. Let’s face it, that could’ve been a whole lot worse ( although it may have considered that Apple TV have the copyright on “MurderBot” ).

I will be acting as Assistant Manager ( well, more of an Igor) to GafferBot’s team, which I have named – not without malice – “Artificial Idiot”.

Deb has assumed the role of General Manager in the hope that she can channel the most successful manager in English football right now – Sarina Wiegman.

As a control, I will be entering and managing my own squad – “Actual Idiot”.

It’s still a couple of weeks until the season starts but we have made a first attempt at picking a squad.

After some trial and error, GafferBot has settled on this lineup :

In the course of the ChatGPT squad selection session a couple of things became apparent.

GafferBot’s information was a bit out-of-date when it came to player values, and even what teams were competing in the EPL this season. It did make a couple of attempts to pick Luton Town players, for example, despite them having been relegated the season before last.

At one point, the proposed squad was over the maximum value allowed by £3.5 million, but the changes then proposed by GafferBot would have reduced the value by only £0.5m.

This appears to be symptomatic of ChatGPT’s sometimes tenuous grasp of basic arithmetic.

At the end of the session, I pointed GafferBot as some relevant links and asked it to remember them for future sessions. The links are :

We’ll need to revisit the squad ahead of the start of the season as players are still being transferred into and out of the league. It will be interesting to see if these links are now considered by GafferBot.

One indication of this will be to check that the values of any players mentioned by the AI are up-to-date.

I’m trying to keep a positive attitude toward the forthcoming season. If Artificial Idiot does somehow prevail in the Idiot derby, at least I’ll have someone to help me write my CV.

Passwordless connection to a Linux server using SSH

It seems that the 90s are back in fashion.

Not only are Oasis currently making headlines, but England’s Women have just won a penalty shootout which featured Lucy Bronze chanelling 1996-vintage Stuart Pearce.

SSH also originates from the 90s, but unlike Britpop, has remained current ever since.

It will work ( and is standard on) pretty much any Linux server.

Whilst, I don’t happen to have a cluster of production servers attached to my home network, I do have a RaspberryPi running on Raspbian 11 (Bullseye) so I’ll be connecting to that from my Ubuntu laptop in the steps that follow…

On my laptop ( Ubuntu 24.04), I have an entry in /etc/hosts for the Pi to save me from having to remember the IP Address whenever I want to connect. To confirm that the Pi is available :

ping -c1 pithree.homeNow we need to confirm that SSH is present on both the client machine and the server we’re connecting to. We can do this by running :

ssh -VOn the Ubuntu client this returns:

OpenSSH_9.6p1 Ubuntu-3ubuntu13.12, OpenSSL 3.0.13 30 Jan 2024

…and on the Pi…

OpenSSH_7.4p1 Raspbian-10+deb9u7, OpenSSL 1.0.2u 20 Dec 2019

I can test the connection to the Pi ( connecting as the user “pi”) by running this from the client :

ssh pi@pithree.homeThe first time you use SSH to connect to a server, you will be presented with a message like this :

Answering “yes” will add the server to the list of known hosts :

I will then be prompted for the password for the pi user that I’m connecting as.

Enter this correctly and we’re in :

Now we’ve confirmed that we can connect using SSH, we want to set things up so we can do it without needing to provide a password each time…

Configuring key-based AuthenticationTo use key-based authentication, we’ll need to generate an SSH Key Pair on the client machine. This will consist of a Public Key and a Private Key. We will then need to deploy our public key to the server.

To generate the key-pair :

ssh-keygenIn my case, I’ve left both the prompts for the filename and the passphrase blank :

As a result, two files have been created under the .ssh directory in the user I’m connected as :

cd $HOME/.ssh

ls -l id_ed25519*

-rw------- 1 mike mike 411 Jul 18 15:22 id_ed25519

-rw-r--r-- 1 mike mike 103 Jul 18 15:22 id_ed25519.pub

Note that the file without the extension holds the private key and the file with the .pub extension contains the public key.

To copy the public key to the target server, we’re going to make use of the tool ssh provides.

For this, we’ll need the path of the file holding the public key and the user and servername that we’re connecting to.

ssh-copy-id -i $HOME/.ssh/id_ed25519.pub pi@pithree.homeOnce we’ve run this, we can connect to the server without being prompted for a password :

User Defined Extensions in SQLDeveloper Classic – something you can’t do in VSCode (yet)

I can tell you from personal experience that, when you reach a certain point in your life, you start looking for synonyms to use in place of “old”.

If your a venerable yet still useful Oracle IDE for example, you may prefer the term “Classic”.

One thing SQLDeveloper Classic isn’t is obsolete. It still allows customisations that are not currently available in it’s shiny new successor – the SQLDeveloper extension for VSCode.

Fortunately, there’s no reason you can’t run both versions at the same time – unless your corporate IT has been overzealous and either packaged VSCode in an MSI that prohibits installation of extensions or has a policy preventing extensions running because “security”.

Either way, SQLDeveloper Classic is likely to be around for a while.

One particular area where Classic still has the edge over it’s shiny new successor is when it comes to user-defined extensions.

In this case – finding out the partition key and method of a table without having to wade through the DDL for that object…

The following query should give us what we’re after – details of the partitioning and sub-partitioning methods used for a table, together with a list of the partition and (if applicable) sub-partition key columns :

with part_cols as

(

select

owner,

name,

listagg(column_name, ', ') within group ( order by column_position) as partition_key_cols

from all_part_key_columns

group by owner, name

),

subpart_cols as

(

select

owner,

name,

listagg(column_name, ', ') within group ( order by column_position) as subpartition_key_cols

from all_subpart_key_columns

group by owner, name

)

select

tab.owner,

tab.table_name,

tab.partitioning_type,

part.partition_key_cols,

tab.subpartitioning_type,

sp.subpartition_key_cols

from all_part_tables tab

inner join part_cols part

on part.owner = tab.owner

and part.name = tab.table_name

left outer join subpart_cols sp

on sp.owner = tab.owner

and sp.name = tab.table_name

where tab.owner = 'SH'

and table_name = 'SALES'

order by 1,2

/

That’s quite a lot of code to type in – let alone remember – every time we want to check this metadata, so let’s just add an extra tab to the Table view in SQLDeveloper.

Using this query, I’ve created an xml file called table_partitioning.xml to add a tab called “Partition Keys” to the SQLDeveloper Tables view :

<items>

<item type="editor" node="TableNode" vertical="true">

<title><![CDATA[Partition Keys]]></title>

<query>

<sql>

<![CDATA[

with part_cols as

(

select

owner,

name,

listagg(column_name, ', ') within group ( order by column_position) as partition_key_cols

from all_part_key_columns

group by owner, name

),

subpart_cols as

(

select

owner,

name,

listagg(column_name, ', ') within group ( order by column_position) as subpartition_key_cols

from all_subpart_key_columns

group by owner, name

)

select

tab.owner,

tab.table_name,

tab.partitioning_type,

part.partition_key_cols,

tab.subpartitioning_type,

sp.subpartition_key_cols

from all_part_tables tab

inner join part_cols part

on part.owner = tab.owner

and part.name = tab.table_name

left outer join subpart_cols sp

on sp.owner = tab.owner

and sp.name = tab.table_name

where tab.owner = :OBJECT_OWNER

and table_name = :OBJECT_NAME

order by 1,2

]]>

</sql>

</query>

</item>

</items>

Note that we’re using the SQLDeveloper supplied ( and case-sensitive) variables :OBJECT_OWNER and :OBJECT_NAME so that the data returned is for the table that is in context when we open the tab.

If you are familiar with the process of adding User Defined Extensions to SQLDeveloper and want to get your hands on this one, just head over to the Github Repo where I’ve uploaded the relevant file.

You can also find instructions for adding the tab to SQLDeveloper as a user defined extension there.

They are…

In SQLDeveloper select the Tools Menu then Preferences.

Search for User Defined Extensions

Click the Add Row button then click in the Type field and select Editor from the drop-down list

In the Location field, enter the full path to the xml file containing the extension you want to add

Hit OK

Restart SQLDeveloper.

When you select an object of the type for which this extension is defined ( Tables in this example), you will see the new tab has been added

The new tab will work like any other :

Useful Links

Useful Links

The documentation for Extensions has been re-organised in recent years, but here are some links you may find useful :

As you’d expect, Jeff Smith has published a few articles on this topic over the years. Of particular interest are :

- An Introduction to SQLDeveloper Extensions

- Using XML Extensions in SQLDeveloper to Extend SYNONYM Support

- How To Add Custom Actions To Your User Reports

The Oracle-Samples GitHub Repo contains lots of example code and some decent instructions.

I’ve also covered this topic once or twice over the years and there are a couple of posts that you may find helpful :

- SQLDeveloper XML Extensions and auto-navigation includes code for a Child Tables tab, an updated version of which is also in the Git Repo.

- User-Defined Context Menus in SQLDeveloper

Setting up a Local Only SMTP server in Ubuntu

If you’re using bash scripts to automate tasks on your Ubuntu desktop but don’t want to have to wade through logs to find out what’s happening, you can setup a Local Only SMTP server and have the scripts mail you their output.

Being Linux, there are several ways to do this.

What follows is how I managed to set this up on my Ubuntu 24_04 desktop (minus all the mistakes and swearing).

Specifically we’ll be looking at :

- installing Dovecot

- installing and configuring Postfix

- installing mailx

- configuring Thunderbird to handle local emails

As AI appears to be the “blockchain du jour” I thought I should make some attempt to appear up-to-date and relevant. Therefore, various AI entities from Ian M. Banks’ Culture will be making an appearance in what follows…

Software VersionsIt’s probably useful to know the versions I’m using for this particular exercise :

- Ubuntu 24.04.1 LTS

- Thunderbird 128.10.0esr (64-bit)

- Postfix 3.8.6

- Dovecot 2.3.21

- mailx 8.1.2

If you happen to have any of these installed already, you can check the version of Postfix by running…

postconf mail_version

…Dovecot by running..

dovecot --version

…and mailx by running :

mail -v

The Ubuntu version can be found under Settings/System/About

The Thunderbird version can be found under Help/About.

Before we get to installing or configuring anything, we need to…

Specify a domain for localhostWe need a domain for Postfix. As wer’re only flinging traffic around the current machine, it doesn’t have to be known to the wider world, but it does need to point to localhost.

So, in the Terminal :

sudo nano /etc/hosts

…and edit the line for 127.0.0.1 so it includes your chosen domain name.

Conventionally this is something like localhost.com, but “It’s My Party and I’ll Sing If I Want To”…

127.0.0.1 localhost culture.orgOnce we’ve saved the file, we can test the new entry by running :

ping -c1 culture.org

PING localhost (127.0.0.1) 56(84) bytes of data.

64 bytes from localhost (127.0.0.1): icmp_seq=1 ttl=64 time=0.062 ms

--- localhost ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.062/0.062/0.062/0.000 msSimply install the package by running :

sudo apt-get install dovecot-imapd

The installation will complete automatically after which, in this instance, we don’t need to do any configuration at all.

Install and configure PostfixYou can install postfix by running :

sudo apt-get install postfix

As part of the installation you will be presented with a configuration menu screen :

Using the arrow keys, select Local Only then hit Return

In the next screen set the domain name to the one you setup in /etc/hosts ( in my case that’s culture.org ).

Hit Return and the package installation will complete.

Note that if you need to re-run this configuration you can do so by running :

dpkg-reconfigure postfix

Next we need to create a virtual alias map, which we do by running :

sudo nano /etc/postfix/virtual

and populating this new file with two lines that look like :

@localhost <your-os-user>

@domain.name <your-os-user>In my case the lines in the file are :

@localhost mike

@culture.org mike

Now we need to tell Postfix to read this file so :

sudo nano /etc/postfix/main.cf

…and add this line at the bottom of the file :

virtual_alias_maps = hash:/etc/postfix/virtualTo activate the mapping :

sudo postmap /etc/postfix/virtual

…and restart the postfix service…

sudo systemctl restart postfix

Once that’s done, we can confirm that the postfix service is running :

sudo systemctl status postfix

Installing mailx

Installing mailx

As with dovecot, we don’t need to do any more than install the package :

sudo apt-get install bsd-mailx

Now we have mailx, we can test our configuration :

echo "While you were sleeping, I ran your backup for you. Your welcome" | mail -r sleeper.service@culture.org -s "Good Morning" mike@culture.org

To check if we’ve received the email, run :

cat /var/mail/mike

Actually having to cat the inbox seems a lot of effort.

If I’m going to be on the receiving end of condescending sarcasm from my own laptop I should at least be able to read it from the comfort of an email client.

Currently, Thunderbird is the default mail client in Ubuntu and comes pre-installed.

If this is the first time you’ve run Thunderbird, you’ll be prompted to setup an account. If not then you can add an account by going to the “hamburger” menu in Thunderbird and selecting Account Settings. On the following screen click the Account Actions drop-down near the bottom of the screen and select Add Mail Account :

Fill in the details (the password is that of your os account) :

…and click Continue.

Thunderbird will now try and pick an appropriate configuration. After thinking about it for a bit it should come up with something like :

…which needs a bit of tweaking, so click the Configure Manually link and make the following changes :

Incoming Server Hostnamesmtp.localhostPort143Connection SecuritySTARTTLSAuthentication MethodNormal passwordUsernameyour os user (e.g. mike) Outgoing Server Hostnamesmtp.localhostPort25Connection SecuritySTARTTLSAuthentication MethodNormal passwordUsernameyour os user (e.g. mike )If you now click the Re-test button, you should get this reassuring message :

If so, then click Done.

You will be prompted to add a security exemption for the Incoming SMTP server

Click Confirm Security Exception

NOTE – you may then get two pop-ups, one after the other, prompting you to sign in via google.com. Just dismiss them. This shouldn’t happen when you access your inbox via Thunderbird after this initial configuration session.

You should now see the inbox you’ve setup, complete with the message sent earlier :

You should also be able to read any mail sent to mike@localhost. To test this :

Incidentally, the first time you send a mail, you’ll get prompted to add a security exception for the Outgoing Mail server. Once again, just hit Confirm Security Exception.

Once you do this, you’ll get a message saying that sending the mail failed. Dismiss it and resend and it will work.

Once again, this is a first-time only issue.

After a few seconds, you’ll see the mail appear in the inbox :

As you’d expect, you can’t send mail to an external email address from this account with this configuration :

Sending an email from a Shell Script

Sending an email from a Shell Script

The whole point of this exercise was so I could get emails from a shell script. To test this, I’ve created the following file – called funny_it_worked_last_time.sh

#!/bin/bash

body=$'Disk Usage : \n'

space=$(df -h)

body+="$space"

body+=$'\nTime to tidy up, you messy organic entity!'

echo "$body" | mail -r me.im.counting@culture.org -s "Status Update" mike@culture.org

If I make this script executable and then run it :

chmod u+x funny_it_worked_last_time.sh

. ./funny_it_worked_last_time.sh…something should be waiting for me in the inbox…

Further Reading

Further Reading

Part of the reason for writing this was because I couldn’t find one place where the instructions were still applicable on the latest versions of the software I used here.

The links I found most useful were :

- This askUbuntu question

- This very useful GitHub gist by Rael Gugelmin Cunha

Finally, for those of a geeky disposition, here’s a list of Culture space craft.

Fibonacci’s favourite IPL Team and the difference between ROW_NUMBER and RANK Analytic Functions

This post is really a note-to-self on the differences between the RANK and ROW_NUMBER analytic functions in Oracle SQL and why you don’t need to fiddle about with the order by clause of ROW_NUMBER to persuade it to generate unique values.

Now, I had planned to start by waxing lyrical about the simplicity inherent in that classic of Italian design, the Coffee Moka then segue into a demonstration of how these functions differ using the Fibonacci Sequence.

Despite it’s name, it turns out that the Fibonacci Sequence isn’t Italian at all, having actually originated in India.

As I’d already come up with the Fibonacci example, I’ll persist with it but, in a “deft and seamless pivot”, I’ll then use the IPL as the basis of further examples.

Full disclosure – the IPL team I follow is Sunrisers Hyderabad, a consequence of having worked with a native of that fair city ( hello Bhargav :)…

As is traditional in posts on this topic, I’ll start by comparing the two functions.

Incidentally, I’ve used a recursive subquery to generate the sequence values ( based on this example ), as I’ll undoubtedly need to lay hands on one the next time I need to use this technique…

with fibonacci( rec_level, last_element, element)

as

(

-- Anchor member

select

1 as rec_level,

0 as last_element,

1 as element

from dual

union all

-- Recursive member definition

select

fib.rec_level + 1 as rec_level ,

fib.element,

fib.last_element + fib.element as next_element

from fibonacci fib

where fib.rec_level <= 9

)

-- Execute the CTE

select element,

rank() over( order by element) as rank,

row_number() over( order by element) as row_number

from fibonacci

order by rec_level

/

Run this and you get :

ELEMENT RANK ROW_NUMBER

---------- ---------- ----------

1 1 1

1 1 2

2 3 3

3 4 4

5 5 5

8 6 6

13 7 7

21 8 8

34 9 9

55 10 10

10 rows selected. In the second row, we can see how the output from each function differs. RANK will return the same value for rows that cannot be separated by the criteria in it’s order by clause wheras ROW_NUMBER will always return a unique value for each record.

Some Sensible ExamplesWe have the following table :

create table ipl_winners

(

year number(4) primary key,

team varchar2(100)

)

/

insert into ipl_winners( year, team)

values( 2008, 'RAJASTHAN ROYALS');

insert into ipl_winners( year, team)

values(2009, 'DECCAN CHARGERS');

insert into ipl_winners( year, team)

values(2010, 'CHENNAI SUPER KINGS');

insert into ipl_winners( year, team)

values(2011, 'CHENNAI SUPER KINGS');

insert into ipl_winners( year, team)

values(2012, 'KOLKATA KNIGHT RIDERS');

insert into ipl_winners( year, team)

values(2013, 'MUMBAI INDIANS');

insert into ipl_winners( year, team)

values(2014, 'KOLKATA KNIGHT RIDERS');

insert into ipl_winners( year, team)

values(2015, 'MUMBAI INDIANS');

insert into ipl_winners( year, team)

values(2016, 'SUNRISERS HYDERABAD');

insert into ipl_winners( year, team)

values(2017, 'MUMBAI INDIANS');

insert into ipl_winners( year, team)

values(2018, 'CHENNAI SUPER KINGS');

insert into ipl_winners( year, team)

values(2019, 'MUMBAI INDIANS');

insert into ipl_winners( year, team)

values(2020, 'MUMBAI INDIANS');

insert into ipl_winners( year, team)

values(2021, 'CHENNAI SUPER KINGS');

insert into ipl_winners( year, team)

values(2022, 'GUJARAT TITANS');

insert into ipl_winners( year, team)

values(2023, 'CHENNAI SUPER KINGS');

insert into ipl_winners( year, team)

values(2024, 'KOLKATA KNIGHT RIDERS');

commit;If we want to rank IPL Winning Teams by the number of titles won, then RANK() is perfect for the job :

with winners as

(

select

rank() over ( order by count(*) desc) as ranking,

team, count(*) as titles

from ipl_winners

group by team

)

select ranking, team, titles

from winners

order by ranking, team

/

RANKING TEAM TITLES

---------- ------------------------------ ----------

1 CHENNAI SUPER KINGS 5

1 MUMBAI INDIANS 5

3 KOLKATA KNIGHT RIDERS 3

4 DECCAN CHARGERS 1

4 GUJARAT TITANS 1

4 RAJASTHAN ROYALS 1

4 SUNRISERS HYDERABAD 1

7 rows selected. For a listing of IPL winners ordered by the first year in which they won a title, ROW_NUMBER() is a better fit :

with first_title as

(

select team, year,

row_number() over ( partition by team order by year) as rn

from ipl_winners

)

select team, year

from first_title

where rn = 1

order by year

/

TEAM YEAR

------------------------------ ----------

RAJASTHAN ROYALS 2008

DECCAN CHARGERS 2009

CHENNAI SUPER KINGS 2010

KOLKATA KNIGHT RIDERS 2012

MUMBAI INDIANS 2013

SUNRISERS HYDERABAD 2016

GUJARAT TITANS 2022

7 rows selected. So far, that all seems quite straightforward, but what about…

Deduplicating with ROWIDI have seen ( and, I must confess, perpetrated) examples of RANK() being used for this purpose with ROWID being used as a “tie-breaker” to ensure uniqueness :

with champions as

(

select team,

rank() over ( partition by team order by team, rowid) as rn

from ipl_winners

)

select team

from champions

where rn = 1

/

TEAM

------------------------------

CHENNAI SUPER KINGS

DECCAN CHARGERS

GUJARAT TITANS

KOLKATA KNIGHT RIDERS

MUMBAI INDIANS

RAJASTHAN ROYALS

SUNRISERS HYDERABAD

7 rows selected.

Whilst, on this occasion, we get the intended result, this query does have some issues.

For one thing, ROWID is not guaranteed to be a unique value, as explained in the documentation :

Usually, a rowid value uniquely identifies a row in the database. However, rows in different tables that are stored together in the same cluster can have the same rowid.

By contrast, ROW_NUMBER requires no such “tie-breaker” criteria and so is rather more reliable in this context :

with champions as

(

select team,

row_number() over ( partition by team order by team) as rn

from ipl_winners

)

select team

from champions

where rn = 1

/

TEAM

------------------------------

CHENNAI SUPER KINGS

DECCAN CHARGERS

GUJARAT TITANS

KOLKATA KNIGHT RIDERS

MUMBAI INDIANS

RAJASTHAN ROYALS

SUNRISERS HYDERABAD

7 rows selected. A simple performance test did not reveal any major difference in the performance of each function.

I tested against a comparatively modest dataset ( 52K rows) on an OCI Free Tier 19c Database.

To mitigate any caching effect, each query was run twice in succession with the second runtime being recorded here.

The test code was :

create table functest as select * from all_objects;

--

-- Test RANK()

--

with obj as

(

select owner, object_name,

rank() over ( partition by owner order by object_name, object_id) as rn

from functest

)

select owner, object_name

from obj

where rn = 1

order by 1;

--

-- Test ROW_NUMBER()

--

with obj as

(

select owner, object_name,

-- only object_name required in the order by

row_number() over ( partition by owner order by object_name, object_id) as rn

from functest

)

select owner, object_name

from obj

where rn = 1

order by 1;

For both test queries the fastest runtime was 0.048 seconds and 43 rows were returned.

Backing Up your Linux Desktop to Google Drive using Deja Dup on Ubuntu

Let’s face it, ensuring that you have regular backups of your home computer to a remote location can seem like a bit of a chore…right up to the time when you’ve managed to lose your data and need a fast and reliable way to get it back.

If you’re using Ubuntu, this task is made much easier by Deja Dup Backups.

Additionally, the cloud storage that comes free with mail accounts from most of the major tech companies can offer a reliable remote home for your backups until you need them. Handy when you don’t have a spare large-capacity thumb drive kicking around.

Using Ubuntu 24.04.02 LTS, what we’re going to look at here is :

- Making your Google Drive accessible from the Ubuntu Gnome Desktop

- Configuring Deja Dup to backup files to the Google Drive

- Testing the backup

- Scheduling backups to run regularly

For this purpose we’ll be using Ubuntu’s standard backup tool – Deja Dup ( listed as “Backups” if you need to search for it in the Gnome Application Menu).

We’ll also be making use of a Gmail account other than that used as the main account on an Android phone.

You may be relieved to know that we’ll be able to accomplish all of the above without opening a Terminal window ( well, not much anyway).

I’ve chosen to use a gmail account in this example because :

- Google offers 15GB of free space compared to the 5GB you get with Microsoft or Apple

- You don’t have to use the Google account you use for your Anrdoid phone so you don’t have to share the space with the phone data backups.

First you need to open Settings – either from the Gnome Application Menu, or from the Panel at the top of the screen – and select Online Accounts :

Now sign in with your Google account…

At this point you’ll be asked to specify what Gnome is allowed to access. In this case I’ve enabled everything :

If you now open Files on your computer, you should see your Google Drive listed :

In this case, I can also see that I have the full 15GB available :

Now we have a remote location to backup to, it’s time to figure out what to backup and how often…

Choosing what to BackupOK, we’ve got some space, but it’s not unlimited so we want to take some care to ensure we backup only files we really need.

By default, the Backup tool will include everything under the current user’s $HOME apart from the Rubbish Bin and the Downloads folder :

Lurking under your $HOME are a number of hidden folders. In the main, these contain useful stuff such as application configuration ( e.g. web browser bookmarks etc). However, there may also be stuff that you really don’t need to keep hold of.

You remember what I said about not having to open a Terminal window ? Well, if you really want to know how big each of these directories is, You can just right-click each one in Files and then look at it’s properties. Alternatively, if you’re happy to open a Terminal Window, you can run :

du --max-depth=1 --human-readable $HOME |sort -hr

This will output the total size of $HOME followed by the total size of each immediate child directory in descending order :

In this case, I can see that the whole of my home is a mere 80M so I’m not going to worry about excluding anything else from the backup for the moment.

One final point to consider. By default, Deja Dup encrypts backup files using GPG (Gnu Privacy Guard), which will compress files as well as encrypting them. This means that the actual space required for the backup may be considerably less than the current size on disk.

Before we start, we’re going to create a test file so that we can make sure that our backup is working once we’ve configured it.

I’ve created a file in $HOME/Documents...

…which contains…

Now look for “Backups” in the Gnome Application Menu :

Open the tool and click on Create Your First Backup :

Next, we need to select which folders to backup. As mentioned above, Deja Dup uses a reasonably sensible default :

You can add folders to both the Folders to Back Up and Folders to Ignore lists by clicking on the appropriate “+” button and entering either an absolute path, or a relative path from your $HOME.

Next, we need to specify the destination for the backup.

Looks like Deja Dup has been clever enough to detect our mapped Google Drive :

The destination folder name default to the name of the current machine.

When the backup runs, the folder will be created automatically if it doesn’t already exist.

When we click the Forward button, Deja Dup may well ask for the installation of additional packages :

…before asking for access to your Google account :

The next screen asks for an Encryption password for your backup. A couple of important points to note here are :

- Password protecting the backup is an outstandingly good idea. Whilst this screen does allow you to turn this off, I’d recommend against it – especially if the backup is being stored on a remote server.

- Whilst you can ask Deja Dup to remember the password so that you won’t need it if you need to restore files from a backup on the current system, the password is essential if you want to restore the backed up files on another system…or the current system after some major disaster renders your existing settings unavailable. MAKE SURE YOU REMEMBER IT.

When we click Forward, we should now be running our first backup :

Once the backup completes, we’ll have the option to schedule them at regular intervals. First though, let’s take a look at the files that have been created :

Let’s make sure that we can restore files from this backup.

Click on the Restore tab in Deja Dup…

… and you should be able to see all of the files in our backup, including the test file we created earlier :

If we navigate to the Documents folder we can select files to restore :

Click on Restore and select the location to restore to ( in this case, we’ll put it directly into $HOME ) :

Again, Click Restore and you’ll get a confirmation that your file is back :

…which we can confirm in Files :

Scheduling Backups

Scheduling Backups

Deja Dup can perform backups automatically at a set interval.

In the Overview tab, select Back Up Automatically.

If you then open the “hamburger” menu and select Preferences, you get additional options, namely :

- Automated Backup Frequency

- Keep Backups

Once you’ve configured them, these backups will take place automatically on the schedule you’ve specified.

Accessing USB Storage attached to a Home Router from Ubuntu

New Year. New Me. Yes, I have made a resolution – setup a drive on my network to share files on.

What I really need for this is a shiny new NAS.

Unfortunately, my abstemous habits at this time of year are less to do with Dry January than they are with “Skint January”.

What I do have however, is a BT Smart Hub 2 Router, which has a usb slot in the back.

I also happen to have a reasonably large capacity thumb drive (128GB) which I found in the back of a drawer.

So, whilst I’m saving my pennies for that all-singing all-dancing NAS, I’m going to attempt to :

- connect the thumb drive to my Router and share it on the network

- access the drive as a network share with read-write permissions from my Ubuntu 22.04.5 LTS laptop

That sounds pretty simple, right ? Well, turns out that it is. Mostly…

To begin with, I’ve plugged the thumb drive into the Router, observing Shroedinger’s USB in action – any USB device will only be the right way up on the third try.

I can now see that the device is present in my Router Admin Page.

Now, theoretically at least, we should be able to access the USB from Ubuntu by opening Files ( Nautilus), clicking on Other Locations, and entering the following into the Connect to Server field at the bottom of the screen :

smb://192.168.1.254/usb1/

Unfortunately, we are likely to be met with :