Fuad Arshad

ZDLRA System Activity Report

The ZDLRA Development team just released a very nifty little script that is available via

Zero Data Loss Recovery Appliance System Activity Script (Doc ID 2275176.1)

This System is supposed to be used in conjunction with Enterprise Manager and a different way of l

The script is broken down into Multiple Sections and the header is very important to read and understand

--------

Setting up Redo Transport With Standby's for ZDLRA with EM 13.2

Links for 2017-02-22 [del.icio.us]

- Sponsored: 64% off Code Black Drone with HD Camera

Our #1 Best-Selling Drone--Meet the Dark Night of the Sky!

Happy new year

It's the start of a new year , and as with all new years it's time to prepare and start learning a fresh. Oracle as a database , as a technology , as a field is constantly being update from being a database to a platform to a cloud provider .

Learning is the core of what we thrive to do . as technology evolves , the opportunities to learn increase .

This year i had the pleasure to emerse myself to technologies that spanned between on-premises and the cloud and i hope to continue my learning curve

Benjamin Stotter / 500px

Benjamin Stotter / 500px#ThanksOTN OTN Appreciation Day: Recovery Appliance - Database Recovery on Steroids

Recovery Appliance or RA or ZDLRA is something I've been very passionate about since its release and thus this very biased post on RA. Recovery Appliance is Database Backup and Recovery on Steroids . The ability to do fulls and incremental backups is something that every product boasts, so whats special about ZDLRA. Its the Ability to sleep in peace, its the ability to know my backups are good.

To Quote this Article from DBTA which is for Sqlserver and 2009

"To summarize, data deduplication is a great feature for backing up desktops, collaboration systems, web applications, and email systems. If I were a DBA or storage administrator, however, I'd skip deduplicating databases files and backups and devote that expensive technology to the areas of my infrastructure where it can offer a strong ROI"

This notion really hasn't changed much though de-duplication software has come a long way.

Why de-dup when you dont even send what you dont need , and thats what the Recovery Appliance brings to the table. Send less data and recover as whole , no more restoring L0's then applying L1's and redo . Just ask to recover a virtual Full and redo needed to get to that point will be sent . This makes the Restore and recovery Process automated much faster than traditional backups.

This couple with automatic block checking , built in validation makes the RA something i'm personally proud of a product that i work with and it truly makes my database recovery on steroids.

REDO_TRANSPORT_USER and Recovery Appliance (ZDLRA)

“REDO_TRANSPORT_USER” was an Oracle Database Parameter that was introduced in Oracle release 11.1 to help transporting redo from a primary to a standby by using a user designated for log transport , The default configuration assumes the user “SYS” is performing the transport.

This distinction is very important since the user “SYS” is available on every Oracle database and as such most data guard environment when created with default settings are created with “SYS” being the used for Log Transport services.

The Zero Data Loss Recovery Appliance (ZDLRA) adds an interesting twist to this configuration. In order for Real-TIme redo to work on a ZDLRA, the “REDO_TRANSPORT_USER” needs to be set to the Virtual Private Catalog (VPC) user of the ZDLRA. For database that are not participating in the Data Guard configuration , this is not an issue and a user does not be created on the Protected Database i.e the database being backed up to the ZDLRA. The important distinction comes into play if you already have a standby configured to receive redo, that process will break since we have switched the “REDO_TRANSPORT_USER” to a user that doesn’t exist on the protected database. In order to avoid this issue if you already have a Data Guard , you will need to create the VPC user as a user in the primary database with the "create session” and “sysoper" with an optional “sysdg” (12c) .

An example configuration is detailed below.

SQL> select * from v$pwfile_users;

USERNAMESYSDBSYSOPSYSASSYSBASYSDGSYSKMCON_IDSYSTRUETRUEFALSEFALSEFALSEFALSE 0SYSDGFALSEFALSEFALSEFALSETRUEFALSE 0SYSBACKUPFALSEFALSEFALSETRUEFALSEFALSE 0SYSKMFALSEFALSEFALSEFALSEFALSETRUE 0

SQL> create user ravpc1 identified by ratest;

User created.

SQL> grant sysoper,create session to ravpc1;

Grant succeeded.

SQL> select * from v$pwfile_users;

SQL> spool off

Once you have ensure that the password file has the entries , copy the password file to the standby node(s) and then ensure that the destination state on the primary to the standby is reset by deferring and then reenabling the destination state

SQL> alter system set log_archive_dest_state_X=defer scope=both sid='*'

SQL> alter system set log_archive_dest_state_X=enable scope=both sid='*'

This will ensure that you have redo transport working to the Data Guard standby and the ZDLRA

Data Guard Standby Database log shipping failing reporting ORA-01031 and Error 1017 when using Redo Transport User (Doc ID 1542132.1)

MAA White Paper - Deploying a Recovery Appliance in a Data Guard environment

REDO_TRANSPORT_USER Reference

Redo Transport Services

Real-Time Redo for Recovery Appliance

Links for 2016-04-29 [del.icio.us]

- Sponsored: 64% off Code Black Drone with HD Camera

Our #1 Best-Selling Drone--Meet the Dark Night of the Sky!

Enterprise Manager 13c And Database Backup Cloud Service

The Oracle Database Cloud Service allows for backup of an Oracle Database to the Oracle Cloud using Rman. Enterprise Manager 13c provides a very easy way to configure Oracle Database Backup Cloud Service. This post will walk you thru setup of the Oracle Database Backup Cloud service as well as running backups from EM.

There is a new menu Item to configure the Database Backup Cloud Service (DBCS) in the Backup & Recovery Drop down.

This will show you how to setup the Database Backup Cloud Service. If nothing was configured before you will see the screenshot .

Once you click on the Configure Database Backup Cloud Service you will be asked for the Service (Storage) and the Identity Domain that you want the Backups to go to . This Identity Domain comes as part of the DBCS or as Part of DBaaS that can be purchased from Oracle Cloud

Once the Settings are saved . A popup will confirm that the setting have been saved.

After Saving the Settings Submit the Configuration Job . This will Download the Oracle Backup Module to the hosts as well as configure the Media Management Settings. The Job will provide details and confirm all configuration is complete, and will configure this on all nodes of a RAC which can save a lot of time.

We have now completed the setup and can validate by looking the Configure Cloud Backup Setup . This also has an option to test cloud backup as well.

. Lets ensure we have settings there and Checking in Backup Settings , The Media Management settings will shows the location of the Library , Environment and Wallet. The Database Backup Cloud Service requires all backups sent to it is encrypted.

You can also validate this by connecting to rman on the command line and running a "SHOW ALL"

As you can see we have confirmed that the media management setup is completed and well as run a job to download the Cloud Backup Module and configure it.

Now as a final Step we will configure a backup and run an Rman Backup to the Cloud. In the Backup and Recovery Menu Schedule a Backup . Fill out the pertinent setting and make sure ou either encrypt via a password or a wallet or both. The backup that i scheduled was encrypted using a password.

On the Second Page Select the Destination which is the Cloud in our case. and Schedule it

Validate that the setting are right and execute the Job. You can monitor the job by clicking the View Job. The New Job Interface in EM13c is really nice and allows you to see a graphical representation of execution time as well as a log of what is happening side by Side like below.

Once the Backup is completed you can not only see the backup thru EM but also using RMAN on the command line

There are a couple of things that i didn’t show during the process . Parallelism during a backups is important as is compression.

Enterprise Manager 13c allows for making the already simple process of setting up Backup’s to the Database Cloud Service much easier.

Zero Data Loss Recovery Appliance - Basics

Oracle released Zero Data Loss Recovery Appliance in 2014. The Recovery Appliance was designed to ensure efficient and consistent Oracle Database Backups with a very key focus on Recovery.

I am going to write a series of blogs starting with this one to discuss the fundamental architecture of the Recovery Appliance and discuss the business case as well as deployment and operational strategies around the Recovery Appliance.

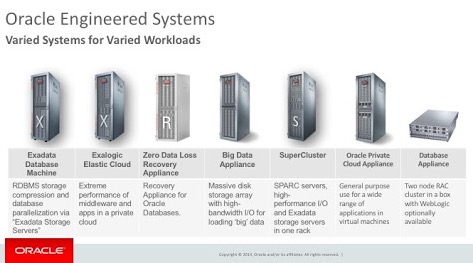

So Lets start with why an Appliance. Oracle has had a very interesting strategy start from way before the sun Acquisition. The Exadata was a prime example of a Database Machine that was optimized for Database Workloads. The Engineered Systems Family has since grown to include the smaller Oracle Database Appliance to the currently newest member of the family Zero Data loss recovery Appliance.

Now Lets Start with the Basics . The Recovery Appliance as the name suggests is an Appliance built to solve Data Protection gaps that most customers face , when trying to ensure their critical data that most often resides in the Oracle Database. So why recovery appliance and why now. Over the years Data storage has continued to grow and so does the amount of data stored in databases, where once a couple of GB’s of data was a big deal, today organizations are dealing with Petabytes of Database Storage. Database’s backups are getting harder and harder to manage and modern Backup Appliances have a focus on getting more out of the storage rather than provide a way to ensure recoverability and don’t have a good enough method to ensure that backups are valid. The Recovery Appliance is designed to solve these challenges and give customer an autopilot for their backups.

The name Recovery appliance suggests how much emphasis was put forward in ensuring recoverability of the database, and hence there were controls put in place to ensure everything is validated not just once , but on a regular basis, with extensive reporting made available.Backups are a very important part of every enterprise and the Recovery Appliance brings the ability to perform an incremental forever backup strategy. The incremental forever strategy as the name suggests provides for one full backup (Level 0 ) followed by subsequent incrementals (Level 1 ) Backups. This in conjunction with Protection Policies that ensure a recovery window is maintained , thus providing the autopilot that ensures backups are successful with very little overhead on the machine that is taking the backup. This is done by offloading the de-duplication and compression activities to the Recovery Appliance.

So far i’ve used terminologies like Protection Policies , De-duplication , compression etc. While these terminologies are common in the backup space , too often people have a hard time making the connection. So lets start by a brief definition of each term

Full Backups

When a Complete Backup of the database is taken, This is called a Full Backup and in a traditional environment, this can be done daily or weekly , depending on the backup strategy . Traditional Backup appliances rely on these full to provide De-duplication capabilities. Full backup require a lot of overhead since all blocks have to be read from the I/O subsystem and processed by database host.

Incremental Backups

Incremental backups as the name suggests is the ability to take backups of data blocks that have changed since the previous backups. The Oracle Backup and Recovery Users Guide is the best place to understand the incremental backup strategy and how that can be employed in terms of a backup strategy.

De-duplication

De-duplication is a technique to eliminate duplicate copies of repeating data. This technique is typically employed with flat files or text based data since you can find a better repeating . Incremental Backups are a poor source to de-dup since there is not much data that is repeating and due to the unique structure of the Oracle block , it makes it hard to get a lot of de-duplication.

Compression

Compression is act of shrinking data and Oracle provides various methods of compressing data within the database and with the rman backup process itself.

In Part 2 of this Blog post i will talk about some of the terminologies likes protection policies and incremental forever strategy as well as dicuss the architecture of the Recovery Appliance.