Scott Spendolini

This is the end...

...of my blog at this URL.

Please follow me over on spendolini.blog for a fresh, updated continuation of this blog.

Engage!

Don't Call it a Comeback

Logging APEX Report Downloads

I knew that any Javascript-based solution would fall short of their security requirements, since it is trivial to reconstruct the URL pattern required to initiate a download, even if the Javascript had removed the option from the menu. Thus, I had to consider a PL/SQL-based approach - one that could not be bypassed by a malicious end user.

To solve this problem, I turned to APEX’s Initialization PL/SQL Code parameter. Any PL/SQL code entered in this region will be executed before any other APEX-related process. Thus, it is literally the first place that a developer can interact with an APEX page - be it a full page view or Ajax-based process.

Both IRs and Classic Reports leave enough data in the REQUEST parameter of the URL in the logs to decode which report was downloaded and what format was selected. However, if you don’t know what the specific URL patterns look like, or don’t have the Request column selected, you’d never know it. For my solution, I chose to incorporate all three types of reports - Classic, IG and IR - which also centralized it into a single place.

The solution is relatively simple, and requires two components: a table to track the downloads and a procedure to populate the table. It should also work with both Oracle 11g & 12c. I have tested it on APEX 5.1.4. The IG portion will not work on APEX 5.0, since there is no IG component in that release.

First, create the table to store the logs:

CREATE TABLE dl_audit_log

(

app_user VARCHAR2(255),

app_id NUMBER,

app_page_id NUMBER,

request VARCHAR2(255),

downloaded_on DATE,

report_id NUMBER,

report_name VARCHAR2(255),

report_type VARCHAR2(255),

report_format VARCHAR2(255)

)

/ Next, create the procedure that will capture any download.

CREATE OR REPLACE PROCEDURE dl_audit

(

p_request IN VARCHAR2 DEFAULT v('REQUEST'),

p_app_user IN VARCHAR2 DEFAULT v('APP_USER'),

p_app_page_id IN NUMBER DEFAULT v('APP_PAGE_ID'),

p_app_id IN NUMBER DEFAULT v('APP_ID'),

p_app_session IN NUMBER DEFAULT v('APP_SESSION')

)

AS

l_count NUMBER;

l_id NUMBER;

l_report_name VARCHAR2(255);

l_report_format VARCHAR2(255);

l_json VARCHAR2(10000);

BEGIN

-------------------------------------------------------------------------------------------------------------------------------

-- Capture Classic Report

-- Region ID will be embedded in the request

--------------------------------------------------------------------------------------------------------------------------------

CASE

WHEN p_request LIKE 'FLOW_EXCEL_OUTPUT%' THEN

-- Uncomment the two lines below to prevent the downloaded

--htp.prn('Download Prohibited');

--apex_application.g_unrecoverable_error := TRUE;

-- Get the ID

SELECT SUBSTR(p_request, 20, INSTR(p_request,'_',20)-20) INTO l_id FROM dual;

SELECT region_name INTO l_report_name FROM apex_application_page_regions WHERE region_id = l_id;

-- Log the download

INSERT INTO dl_audit_log (app_user, app_id, app_page_id, request, downloaded_on, report_id, report_name, report_type, report_format

VALUES (p_app_user, p_app_id, p_app_page_id, p_request, SYSDATE, l_id, l_report_name, 'CLASSIC', 'CSV');

-------------------------------------------------------------------------------------------------------------------------------

-- Capture IR download

-- Region ID embedded in request only when there is more than 1 IR on the page

-------------------------------------------------------------------------------------------------------------------------------

WHEN p_request LIKE '%CSV' OR p_request LIKE '%HTMLD' OR p_request LIKE '%PDF' THEN

-- Uncomment the two lines below to prevent the downloaded

--htp.prn('Download Prohibited');

--apex_application.g_unrecoverable_error := TRUE;

-- Determine how many IRs are on the page

SELECT COUNT(*) INTO l_count FROM apex_application_page_ir where page_id = p_app_page_id AND application_id = p_app_id;

-- If there is 1, then get the ID from the view

IF l_count = 1 THEN

SELECT interactive_report_id, region_name INTO l_id, l_report_name

FROM apex_application_page_ir where page_id = p_app_page_id AND application_id = p_app_id; ELSE

-- Otherwise, get the ID from the REQUEST

SELECT SUBSTR(p_request,5, INSTR(p_request,']')-5) INTO l_id FROM dual;

SELECT region_name INTO l_report_name FROM apex_application_page_ir where region_id = TRIM(l_id);

END IF;

-- Log the download

INSERT INTO dl_audit_log (app_user, app_id, app_page_id, request, downloaded_on, report_id, report_name, report_type, report_format

VALUES (p_app_user, p_app_id, p_app_page_id, p_request, SYSDATE, l_id, l_report_name, 'IR'

CASE WHEN p_request LIKE '%CSV' THEN 'CSV' WHEN p_request LIKE '%HTMLD' THEN 'HTML' WHEN p_request LIKE '%PDF' THEN 'PDF' ELSE 'OTHER' END);

-------------------------------------------------------------------------------------------------------------------------------

-- Capture IG download

--------------------------------------------------------------------------------------------------------------------------------

WHEN LOWER(owa_util.get_cgi_env('QUERY_STRING')) LIKE 'p_flow_id=' || p_app_id || '&p_flow_step_id=

|| p_app_page_id || '&p_instance=' || p_app_session || '%&p_json%download%' THEN

-- Uncomment the two lines below to prevent the downloaded

--htp.prn('Download Prohibited');

--apex_application.g_unrecoverable_error := TRUE;

-- Extract the JSON

SELECT utl_url.unescape(substr(owa_util.get_cgi_env('QUERY_STRING'),

INSTR(owa_util.get_cgi_env('QUERY_STRING'), 'p_json=') + 7)) INTO l_json FROM dual;

apex_json.parse(l_json);

-- Get the report ID

l_id := apex_json.get_varchar2(p_path => 'regions[%d].id', p0 => 1);

l_report_format := apex_json.get_varchar2(p_path => 'regions[%d].download.format', p0 => 1);

-- Lookup the name

SELECT region_name INTO l_report_name FROM apex_application_page_regions where region_id = l_id;

-- Log the download

INSERT INTO dl_audit_log (app_user, app_id, app_page_id, request, downloaded_on, report_id, report_name, report_type, report_format

VALUES (p_app_user, p_app_id, p_app_page_id, p_request, SYSDATE, l_id, l_report_name, 'GRID', l_report_format);

-- No auditing needed, as the user did not download a report

ELSE NULL;

END CASE;

END;

/ Lastly, add a reference to the procedure to the Initialization PL/SQL Code. This can be found under your Shared Components > Security.

Once these three steps are completed, then any download of any report will be logged automatically. There’s no need to adjust any specific report or add any parameters - it will just work as reports are downloaded.

Also, uncommenting the referenced lines in each section will also prevent that kind of report from being downloaded entirely. The message could be changed as needed or even re-directed to an error page instead.

Thanks, ODC (Oracle Developer Community)!

In any case, what am I thankful for? A lot. To start, the tools that I use day in and day out: SQL Developer, ORDS, Oracle Data Modeler, SQLcl and - of course - APEX. Without these tools, I'm likely on a completely different career path, perhaps even one that aligns more closely with my degree in television management.

While the tools are great, it's really the people that make up the community that make ODC stand out. From the folks who run ODC and the Oracle ACE program to the developers and product managers who are behind the awesome tools, the ODC community is one of, if not the greatest asset of being involved with Oracle's products.

If you have yet to get more involved with this community, and are wondering how you can, well, there's no better time that on ODC appreciation day! Here's some basic and simple things that you can do to become more involved:

- Read and reply to posts on the ODC forums. You'd be surprised how far a simple reply can go to help others.

- Attend local user group conferences. Consider not only presenting at them as well, but volunteering your time to help with the organization.

- Attend and/or create a local MeetUp that focuses on the tools that you use. It can be as general or as specific as you'd like it to be.

- Get a Twitter account and follow the ODC community members. Not sure where to start? Try this list of "Oracle Peeps" from Jeff Smith: https://twitter.com/thatjeffsmith/lists/oraclepeeps

- Encourage your co-workers to do the same!

Spaced Out

While that's all well and good, sometimes you only want to change the font for a report, not the entire page. One of the applications that I'm building contains a number of IRs based mostly on log data. Thus, having that data in a monospaced font would make it a whole lot easier to read.

You can search Google Fonts for monospaced fonts by selecting only that option on the right-side menubar. You can also opt for the standard yet kinda boring Courier and achieve the same thing.

To implement this in your application, follow the steps in my other post, but stop shy of the final step. Instead of pasting in the text that I specify, paste in the following to the Custom CSS field in Theme Roller, using the name of the font you selected for the font-family:

.a-IRR-table tr td { font-family: Ubuntu Mono; font-size: 14px; }This will only apply the monospaced font to IR data. Save your Theme Roller changes, and now, all of your IR data should be in a monospaced font. If you only need this on a specific page, then instead of pasting the code to Theme Roller, simply paste it into the specific page or pages Custom CSS region.

Whose Deck is it Anyways?

Here's what we're going to do: the will be four 5-minute presentations - one on each of the following: BI, EPM, Database & APEX.

Sound interesting? Probably not. We get that, too. So here's what we did.

Each 5-minute session will be presented by a non-expert. For example, it's highly likely that I'll be presenting on BI or EPM.

To make it even better, each slide deck will be prepared by the corresponding expert. So again, it's highly likely that my slide deck's creator will be either Stewart Bryson or Edward Roske. If nothing else, this session will be a crash course in how not to make cohesive, easy to read slides.

Interested now? Ya, I thought so. Here's some more details on the KScope site.

#fakecode

So what is fake code? That, I can at least try to explain in a bit more detail.

The other day, I saw this image posted on Kris Rice's twitter feed:

I thought it was a joke, but it's actually a real book. That made me laugh. Then cry. Then I read the book, mainly since it's only 4 pages. Believe it or not, there's actually some really good content packed in there. Let me summarize:

If you choose to copy code from Stack Overflow, the OTN forum, or anywhere, really, there's a few things to keep in mind:

- Who owns the code. It's more than likely perfectly legal to copy and use the code you find on these sites "as is", but keep in mind there may be exceptions to this rule. The first page in the booklet offers some insight as to what they are.

- Who gets credit for the code. While not required, it's a good idea to credit the author of the snippet that you used. This is not just courteous, but also provides a reference back to the source of the code, so when it breaks, you know where to start looking. Which brings me to the third and most important thing to consider:

- How good is the code. Unfortunately, there is no easy way to verify this. You can look at the number of up votes for a specific response, but even that can be easily faked - or more likely, just be wrong because it is based on an older version.

The first two issues don't concern me all that much, as the first is rarely an issue and the second is simple to solve. The third however, does concern me a lot. FAKE code - where FAKE stands for Found Another Killer Example (yes, I made that up) - is fast becoming the way that we develop solutions. Simply enter in some string of what you're trying to do into Google, and a set of possible solutions will magically appear on the page.

With very little effort, you can copy & paste that snippet into your application, run it, and if it works, then you're job is done and you're a hero. If it doesn't, some more searching and some more tinkering is in order. Maybe it's a 15 minute task instead of a 5 minute one. But that doesn't matter, as what you were asked to do is done, and you can move on to the next problem.

There's definitely some problems with this approach. If you don't understand what the code is doing, who is going to fix it when it breaks? And it will break at some point. As other libraries or components get upgraded and/or browser versions change, code will eventually break - usually at the worst possible time.

If you get lucky, and it doesn't break, then who is going to change how it works when the users come up with new requirements? If you don't have a full grasp as to what it does or how it does it, then you'll have little success in changing how it works.

And just because there's a solution, does it mean that is the best, most secure, most performant solution? It may work fine for a single user on a development environment, but what happens when its implemented in the real world? This is especially a concern when you're implementing something in a language that you're not as proficient in, as you won't be able to readily spot bad practices.

In no way am I saying that any of these sites are bad, nor saying don't use them. I use them all of the time to find solutions to problems and contribute solutions, as do many others. They are all a valuable resource that makes our jobs a lot easier to do.

What I do want to emphasize is that when you do use any site that presents a solution or code snippet, be sure to not only vet the solution, but also ensure that you completely understand how it works, and be ready to fix it if it breaks. If you can't do either of these things easily, then perhaps its best to find an alternate solution to your problem - one that you can understand and maintain

FAKE code is a real danger to any development project. Sprinkle enough of it around, and you're essentially creating a ticking time bomb, that's just waiting to explode. But FAKE code can be spotted and stopped fairly easily: Document the source of any snippet or blog you use. Take the time to learn what it does line-by-line, and document that, too. Be ready to support it if in the case it breaks. And if you're not comfortable doing so, have alternative resources lined up or at least identified.

Together, with a little bit of work, we can stop the FAKEcode epidemic.

Iceland, Iceland, Baby

Alright, stop! Collaborate and listen! Ok, I’ll be the one to take my own advice here and stop...

Later this month, I’ll be heading to Reykjavík, Iceland to deliver our 3-day training class “Developing Desktop APEX Applications”. This class will be open to the public and costs about $2500 per student, so anyone is welcome to sign up. You’ll have to make your way to Iceland, of course.

![]()

Here’s a brief overview of what we’re going to cover:

This 3-day course is an introduction to developing web applications using Oracle Application Express, or simply APEX. The course starts out with an overview of data model of the application that student will build. It then transitions to the SQL Workshop portion of APEX, where basic database object management concepts are addressed.

The bulk of the remainder of the class focuses on building an APEX application, starting with the core components that make up the foundation of the application. Students will then build several forms and reports, which allow user interaction with the data. Next, additional types of forms and reports will be introduced, as well as more advanced techniques used when managing them. The course will conclude with a review of the basic security attributes of an application as well as how to prepare and deploy it to a production environment.

The course will run from March 28th through March 30th, and will be held in a location TBD in Reykjavík. More details, as well as the course outline and a link to register can be found on Miracle’s site here: http://miracle.is/en/building-apex-applications/

Taste of KScope 2017 Webinars

This Thursday, I’ll be participating in the Taste of KScope 2017 webinar series by presenting GET POST ORDS JSON: Web Services for APEX Decoded. The webinar will begin at noon EDT on Thursday, March 16th. The webinar is completely free, and you don’t need to be an ODTUG member to attend.

Here’s a summary of the abstract:

Web Services in the APEX world are becoming more and more popular. However, there is still a lot of confusion as to what they are and how they could benefit the APEX developer. After a review of the syntax and jargon associated with web services, this session will review and boil down web services to their basic components. It will then demonstrate how APEX developers can start to use these powerful components - both to send and receive data from other sites.

Not only will I be presenting this session at KScope later this year, but I’ve also done it a few times already, so most of the kinks are (hopefully) worked out.

You can register for the webinar here: https://attendee.gotowebinar.com/register/2300788935263147265

Low

Recently, there has been a lot of buzz about "low code" development platforms. Even the Oracle APEX team has embraced this term (see https://apex.oracle.com/lowcode/ for details). This approach allows the "citizen developer" - someone without a traditional IT background - to build basic applications with little to no code. Platforms such as QuickBase, Appian, Mendix and even SalesForce.com have popup up, offering the promise to quickly build applications with little to no code. Users from all walks of life can now build and deploy applications to some sort of cloud in just minutes!

But is it possible to build a truly useful application with little to no code? Perhaps. Perhaps not. I suppose that all depends on what you want the application to do and what data it will use. It probably also depends on the security of the application, and how easy it will be to integrate into a corporate identity management system behind a firewall. It also probably depends on what type of availability and reliability you need. And it will definitely depend on how much any of these solutions cost, especially if your application gets popular and more users need to use it. While some of these companies are solid and not going anywhere soon, a few of these names are new to me, and if they were to fold, it would not be the first time a startup failed.

While I have not tried any of the products that I mentioned, I do have a bit of experience with Oracle APEX, so I'll speak from that angle. APEX does fit into the "low code" profile, as you can easily build an application that manages data, provides visualizations, and even facilitates some basic workflows. You can do all of this without writing any code, save for maybe a query or two. There’s even an “App Store” of sorts - called Packaged Applications - that can get you create a fully functional point-solution application with a single click. I’ve seen people from skilled developers with multiple IT-related degrees to vice presidents with limited IT experience build and deploy APEX applications. The "citizen developer" term truly fits in here.

However, there is a limit as to what you can make any application do without writing code. Over the years, APEX has done a great job of pushing this limit out further and further. You can create a much better looking, more secure, more functional APEX application with APEX 5.1 in much less time than you could with versions as recent as APEX 4.0. But even with the latest and greatest release, if you want to add some business rules or put basic conditions on a region, you’re likely going to have to use a little bit of code.

Think about this: if you had a couple of citizen developers build a basic project management tool in APEX, and then as it becomes more popular, more people in the organization start to use it. Thus, the citizen developers enhance and modify it to support multiple teams, multiple users and roles, etc. All along, there is no oversight from IT about how the application is enhanced and managed. No standards are followed, as it’s totally up to the citizen developers to choose how it looks and works. It’s fairly likely that at some point, bad decisions were made. Perhaps a few security vulnerabilities were introduced, or a couple of poorly-written SQL statements were created. It’s not all that different from the MS Access mess, but at least this one is backed up...

Low code is fine when it’s just that: low code. As soon as applications cross some threshold and become a critical component of an enterprise, it’s time to stop calling them low code and transition their ownership to the professionals. With most of the online tools, I don’t believe that this is an option, or at least not a simple one. If you exceed the capabilities of the platform which you’re building on, it’s going to be a good amount of work to migrate to another one.

This is where APEX differs. While APEX does seem to fit the low code moniker, it’s so much more capable than that. Calling APEX low code makes me think of other “low” branded things: low fat, low carb, low T, etc. It’s perfectly possible to start citizen developers in APEX, and show them how to build basic applications that meet some of their less critical business needs. Some applications will remain somewhat simple, and that’s just fine. But for the ones that don’t - it doesn’t take much at all to transition ownership of that application to IT, or perhaps just monitor and manage it a bit while still allowing the citizen developer to own it.

Those who have used APEX for years know this: it’s one of the few development platforms that you can become productive with in just a few days, but it will take your entire career to master all it can do. It’s an ideal platform that meets the criteria of low code. But it’s so much more - offering one of the most seamless transition paths from low code to enterprise applications.

jQuery, Security and Web Services - Oh My!

It's going to be a hectic couple of weeks for me, as I get ready to head to Utah this weekend for the annual UTOUG Training Days conference next week. I love Salt Lake City, and the UTOUG conference is just the right size - not too large, but large enough that most of the rooms are full of attendees.

NATCAP OUG Reboot

My "Must See" ADF/MAF Sessions at KScope 16

In any case, the following sessions in the ADF/MAF track are worth checking out at Kscope 16 this year:

How to Use Oracle ALTA UI to Create a Smashing UI for Web and Mobile

Luc Bors, eProseed NL

When: Jun 28, 2016, Session 12, 4:45 pm - 5:45 pm

I've always liked UI, and Oracle ALTA is a new set of templates that we'll be seeing quite a bit of across a number of new technologies.

Three's Company: Going Mobile with Oracle APEX, Oracle MAF, and Oracle MCS

Frederic Desbiens , Oracle Corporation

When: Jun 27, 2016, Session 6, 4:30 pm - 5:30 pm

I'll admit - anytime there's a comparison of APEX and other similar technologies, it's always interesting to witness the discussion. If nothing else, there will be a good healthy debate as a result of this session!

Introduction to Oracle JET: JavaScript Extension Toolkit

Shay Shmeltzer, Oracle Corporation

When: Jun 28, 2016, Session 7, 8:30 am - 9:30 am

Oracle JET is a lot more than just charts, and there's a lot of momentum behind this technology. I'm very interested to learn more and perhaps even see a thing or two that you can do with it, as well as the various integration points that are possible with other technologies.

Build a Mobile App in 60 Minutes with MAF

John King , King Training Resources

When: Jun 27, 2016, Session 5, 3:15 pm - 4:15 pm

Native mobile applications are something that APEX doesn't do, so it would be nice to see how this would be possible, should the need ever arise.

- APEX sessions not to be missed, as recommended by EPM expert Cameron Lackpour: http://camerons-blog-for-essbase-hackers.blogspot.com/2016/05/kscope16-apex-sessions-im-interested-in.html

- BI and Data Warehousing sessions not to be missed, as recommended by EPM expert Dayalan Punniyamoorthy: http://onlyhyperion.blogspot.com/2016/05/Kscope-6-blog-hop-BI-Data-Warehousing-sessions-not-to-miss.html

- Big Data and Advanced Analytics sessions not to be missed, as recommended by Data Warehouse expert Kent Graziano: http://wp.me/p25i8c-Rz

- Database sessions not be missed, as recommended by BI expert Stewart Bryson: https://medium.com/@stewartbryson/what-you-positively-have-to-see-in-the-database-track-at-kscope16-e98615851202#.g74pqn1xp

- EPM sessions not to be missed, as recommended by Database expert Danny Bryant: http://dbaontap.com/2016/05/26/kscope16-blog-hop-epm-sessions-not-miss

Stinkin' Badges

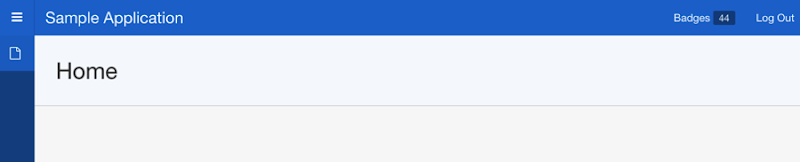

Another use for the Navigation Bar is to present simple metrics via badges. You've seen the before: the little red numbered icons that hover in the upper-right corner of an iPhone or Mac application, indicating that there's something that needs attention. Whether you consider them annoying or helpful, truth be told, they are a simple, minimalistic way to convey that something needs attention.

Fortunately, adding a badge to a Navigation Bar entry in the Universal Theme in APEX 5 is tremendously simple. In fact, it's almost too simple! Here's what you need to do:

First, navigate to the Shared Components of your application and select Navigation Bar List. From there, click Desktop Navigation Bar. There will likely only be one entry there: Log Out.

Click Create List Entry to get started. Give the new entry a List Entry Label and make sure that the sequence number is lower than the Log Out link. This will ensure that your badged item displays to the left of the Log Out link. Optionally add a Target page. Ideally, this will be a modal page that will pop open from any page. This page can show the summary of whatever the badge is conveying. Next, scroll down to the User Defined Attributes section. Enter the value that you want the badge to display in the first (1.) field. Ideally, you should use an Application or Page Item here with this notation: &ITEM_NAME. But for simplicity's sake, it's OK to enter a value outright.

Run your application, and have a look:

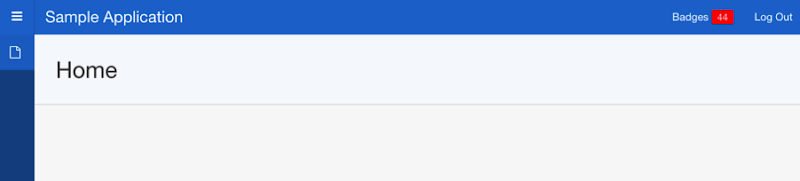

Not bad for almost no work. But we can make it a little better. You can control the color of the badge with a single line of CSS, which can easily be dropped in the CSS section of Theme Roller. Since most badges are red, let's make ours red as well. Run your application and Open Theme Roller and scroll to the bottom of the options. Expand the Custom CSS region and enter the following text:

.t-Button--navBar .t-Button-badge { background-color: red;}Save your customizations, and note that the badge should now be red:

Repeat for each metric that you want to display in your Navigation Bar.

Formatting a Download Link

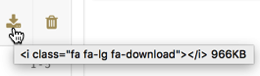

In the interest of saving screen real estate, I wanted to represent the download link in an IR with an icon - specifically fa-download. This is a simple task to achieve - edit the column and set the Download Text to this:

<i class="fa fa-lg fa-download"></i>The fa-lg will make the icon a bit larger, and is not required. Now, instead of a "download" link, you'll see the icon rendered in each row. Clicking on the icon will download the corresponding file. However, when you hover over the icon, instead of getting the standard text, it displays this:

Clearly not optimal, and very uninformative. Let's fix this with a quick Dynamic Action. I placed mine on the global page, as this application has several places where it can download files. You can do the same or simply put on the page that needs it.

The Dynamic Action will fire on Page Load, and has one true action - a small JavaScript snippet:

$(".fa-download").prop('title','Download File');This will find any instance of fa-download and replace the title with the text "Download File":

If you're using a different icon for your download, or want it to say something different, then be sure to alter the code accordingly.

Conference Season

Here it is:

RMOUG - Denver, CO

One of the larger conferences, the year usually starts out in Denver for me, where crowds are always large and appreciative. RMOUG has some of the most dedicated volunteers and puts on a great conference year after year.

GAOUG - Atlanta, GA

This will be my first time at GAOUG, and I'm excited to help them get their annual conference started. Lots of familiar faces will be in attendance. At only $150, if you near the Atlanta drive, it's worth checking out.

OCOJ - Williamsburg, VA (submitted)

This will (hopefully) also be my first Oracle Conference on the James. Held in historic Williamsburg, OCOJ is also a steal at just $99.

UTOUG - Salt Lake City, UT

I'll head back out west to Utah for UTOUG. Always good to catch up with the local Oracle community in Utah each year. Plus, I make my annual SLC brewery tour while there.

GLOC - Cleveland, OH (submitted)

Steadily growing in popularity, the folks at GLOC put on an excellent conference. Waiting to hear back on whether my sessions got accepted.

KSCOPE - Chicago, IL

Like everyone, I'm looking forward to one of the best annual technical conferences that I've regularly attended. In addition to the traditional APEX content, there's few surprises planned this year!

ECO - Raleigh/Durham, NC (planning on submitting)

ECO - formerly VOUG - is also growing in numbers each year. There's a lot of tech in the RDU area, and many of the talented locals present here. Bonus: Jeff Smith curated brewery/bar tour the night before.

OOW - San Francisco, CA (planning on submitting)

As always, the conference year typically ends with the biggest one - Oracle Open World. While there's not as much APEX content as there once way, it's always been more focused on the marketing side of technology, which is good to hear every now and then.

User group conferences are one of the best types of training available, especially since they typically cost just a couple hundred dollars. I encourage you to try to check out one near you. Smaller groups are also great places to get an opportunity to present. In addition to annual conferences, many smaller groups meet monthly or quarterly and are always on the look out for new content.

Refreshing PL/SQL Regions in APEX

If you've been using APEX long enough, you've probably used a PL/SQL Region to render some sort of HTML that the APEX built-in components simply can't handle. Perhaps a complex chart or region that has a lot of custom content and/or layout. While best practices may be to use an APEX component, or if not, build a plugin, we all know that sometimes reality doesn't give us that kind of time or flexibility.

While the PL/SQL Region is quite powerful, it still lacks a key feature: the ability to be refreshed by a Dynamic Action. This is true even in APEX 5. Fortunately, there's a simple workaround that only requires a small change to your code: change your procedure to a function and call it from a Classic Report region.

In changing your procedure to a function, you'll likely only need to make one type of change: converting and htp.prn calls to instead populate and return a variable at the end of the function. Most, if not all of the rest of the code can remain untouched.

Here's a very simple example:

Before:

PROCEDURE print_region

(p_item IN VARCHAR2)

IS

BEGIN

htp.prn('This is the value: ' || p_item);

END;

After:

FUNCTION print_region

(p_item IN VARCHAR2)

RETURN VARCHAR2

IS

l_html VARCHAR2(100);

BEGIN

l_html := 'This is the value: ' || p_item;

RETURN l_html;

END;

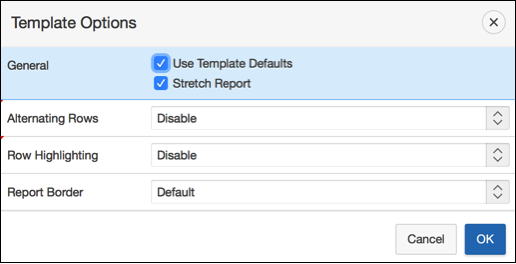

On the APEX side, simply create a Classic Report and set the query to something like this that refers to your function:

SELECT package_name.function_name(p_item => :P1_ITEM) result FROM dualYou'll then want to edit the Attributes of the Classic Report and turn off Pagination, set the Headings type to None and ensure Partial Page Refresh is enabled. Next, click on the Template Options and Disable Alternating Rows and Row Highlighting and then check Stretch Report.

Make any other UI tweaks that you need, and you should now have a Dynamic PL/SQL Region that can be refreshed in a Dynamic Action.

APEX 5 Cheat Sheet

Not to be outdone, I created a cheat sheet for the APEX 5 Keyboard Shortcuts. Not only can you view it online, but you can also download a PDF version of it. Check it out and let me know if there's anything that you'd like to see added.

Hide and Seek

In migrating SERT from 4.2 to 5.0, there's a number of challenges that I'm facing. This has to do with the fact that I am also migrating a custom theme to the Universal Theme, as almost 100% of the application just worked if I chose to leave it alone. I didn't. More on that journey in a longer post later.

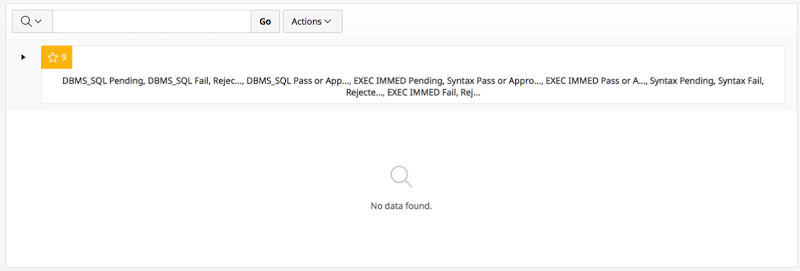

In any case, some of the IR filters that I have on by default can get a bit... ugly. Even in the Universal Theme:

In APEX 4.2, you could click on the little arrow, and it would collapse the region entirely, leaving only a small trace that there's a filter. That's no longer the case:

So what to do... Enter CSS & the Universal Theme.

Simply edit the page and add the following to the Inline CSS region (or add the CSS to the Theme Roller if you want this change to impact all IRs):

.a-IRR-reportSummary-item { display: none; }

This will cause most of the region to simply not display at all - until you click on the small triangle icon, which will expand the entire set of filters for the IR. Clicking it again makes it go away. Problem solved with literally three words (and some punctuation).